Table of Contents¶

1 An example of a small Multi-Player simulation, with Centralized Algorithms

1.1 Creating the problem

1.1.1 Parameters for the simulation

1.1.2 Three MAB problems with Bernoulli arms

1.1.3 Some RL algorithms

1.2 Creating the EvaluatorMultiPlayers objects

1.3 Solving the problem

1.4 Plotting the results

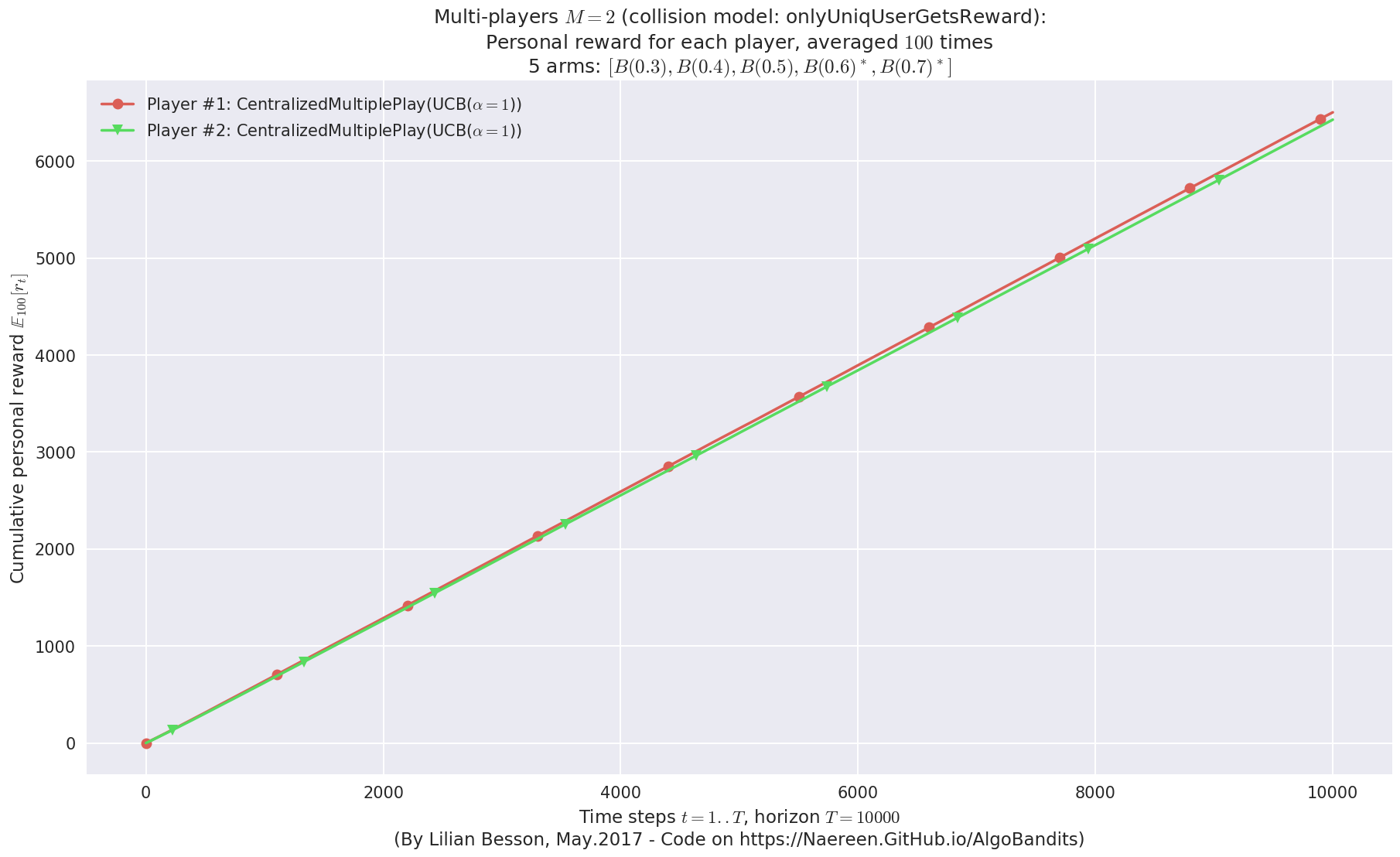

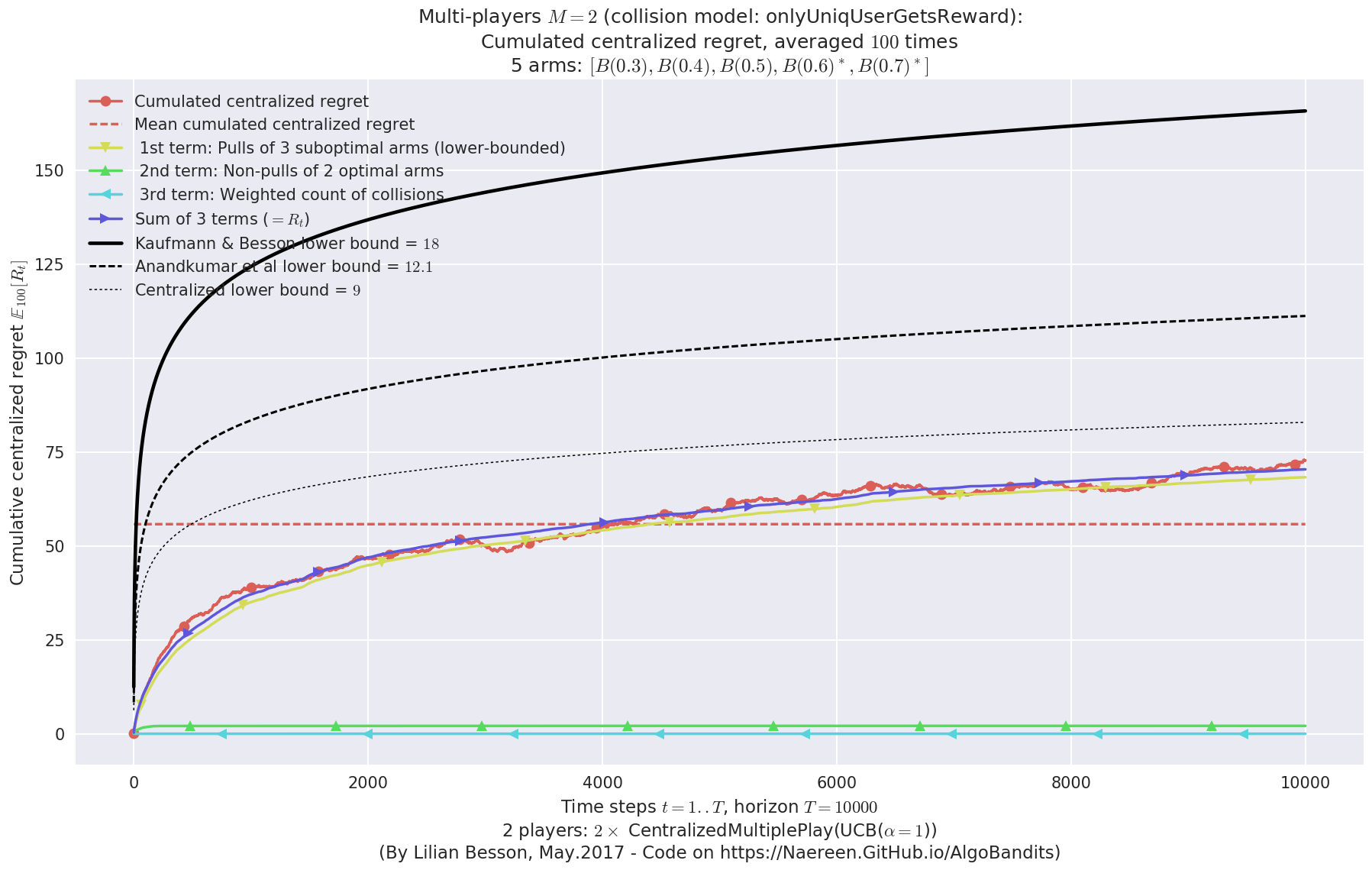

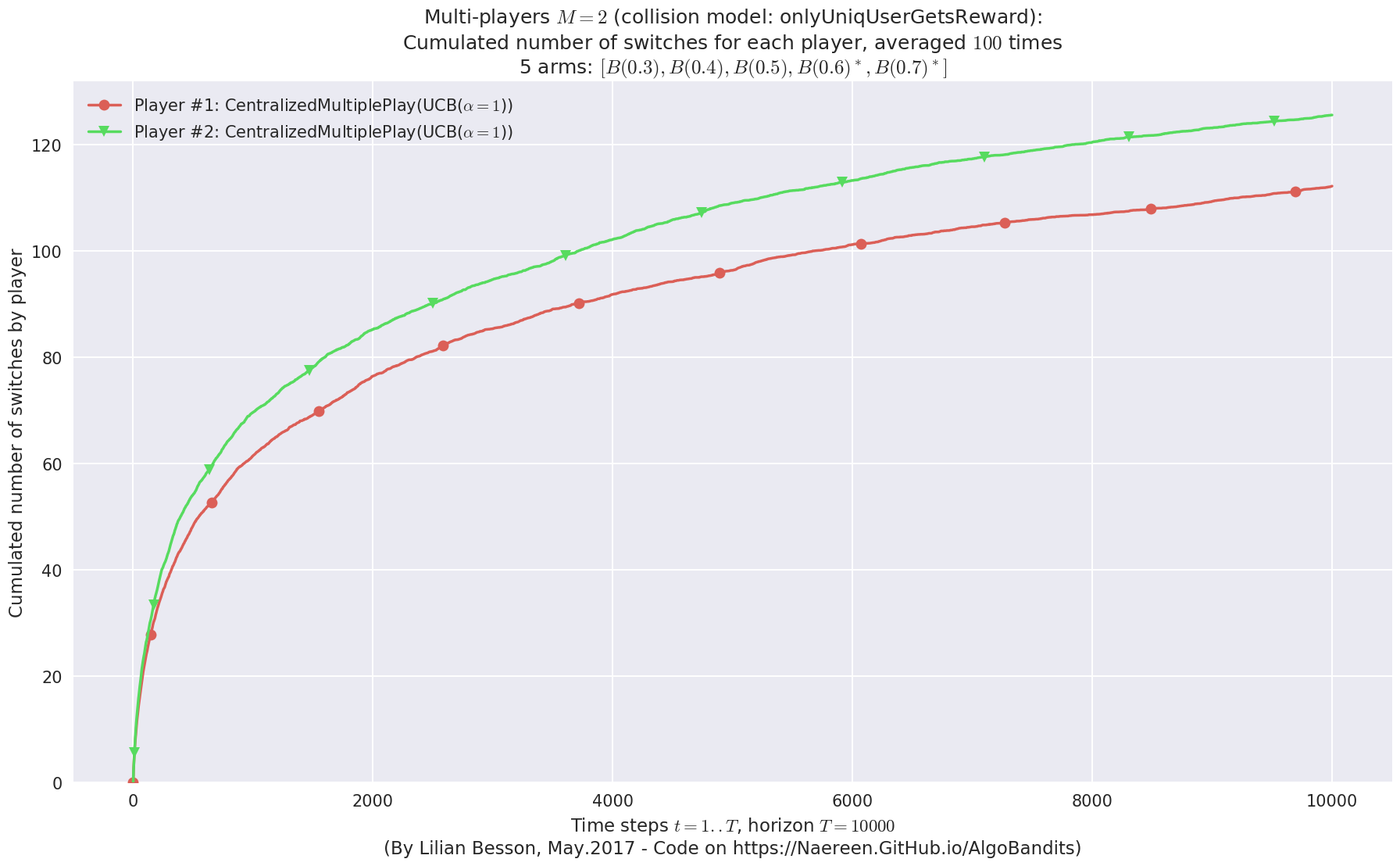

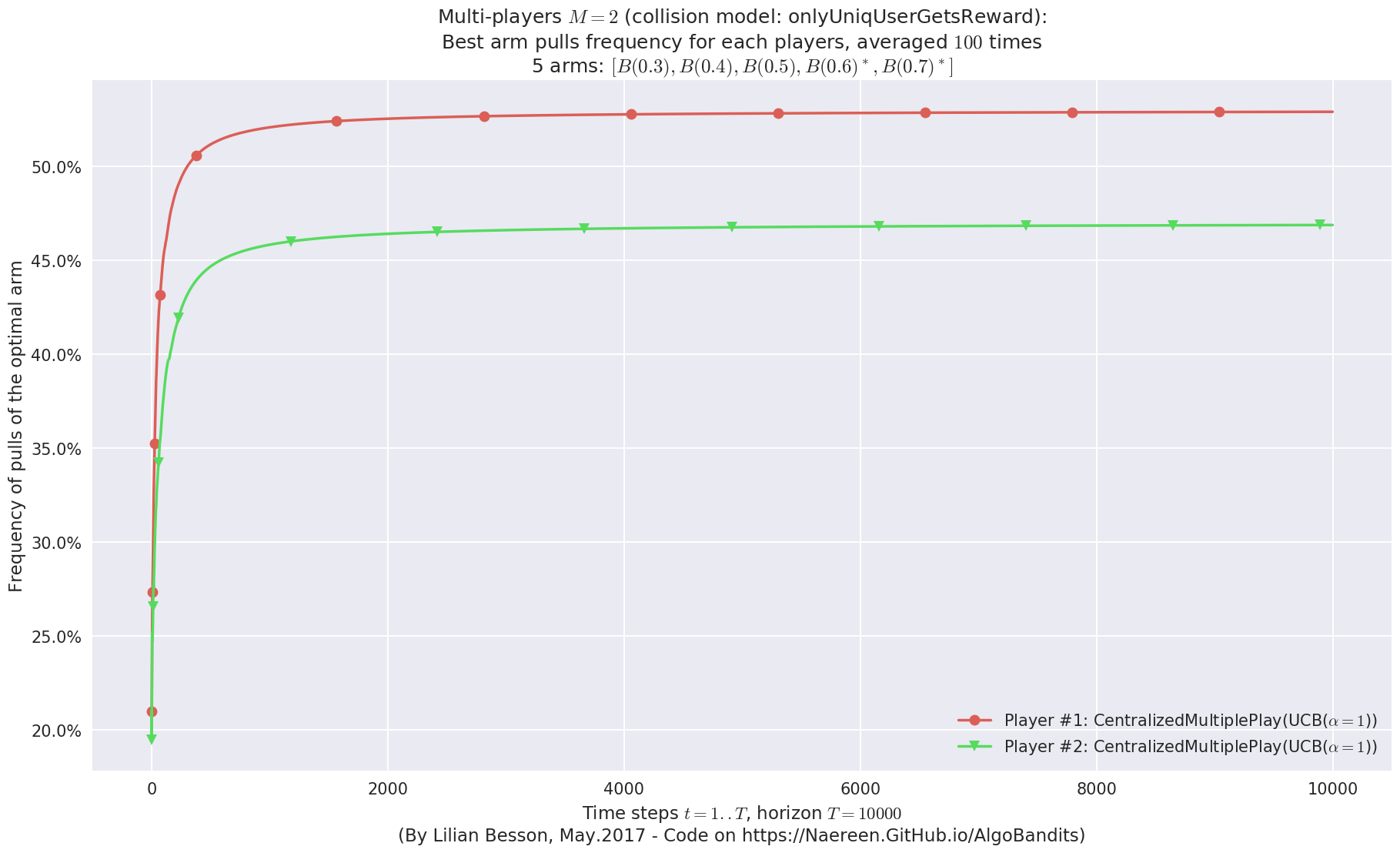

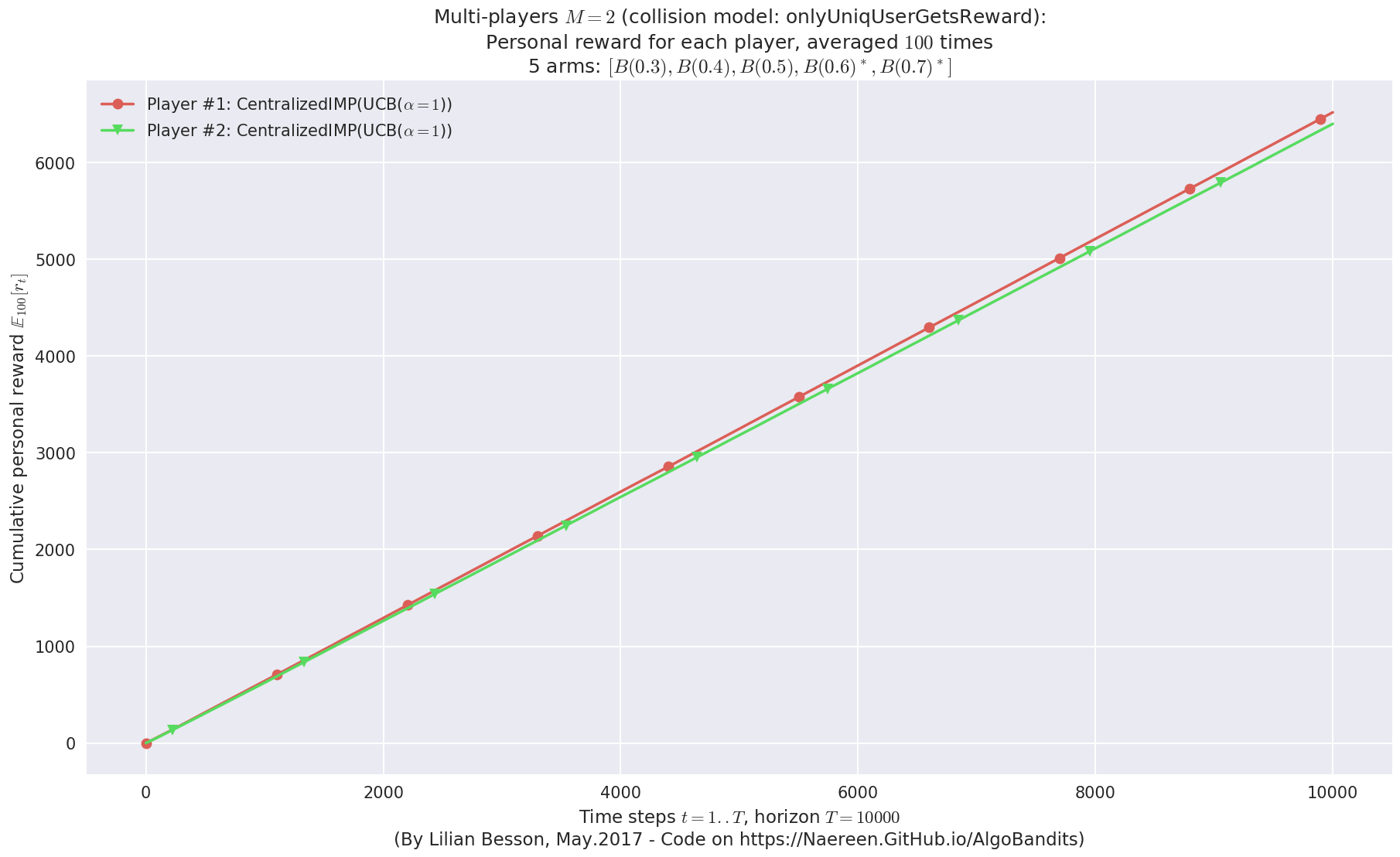

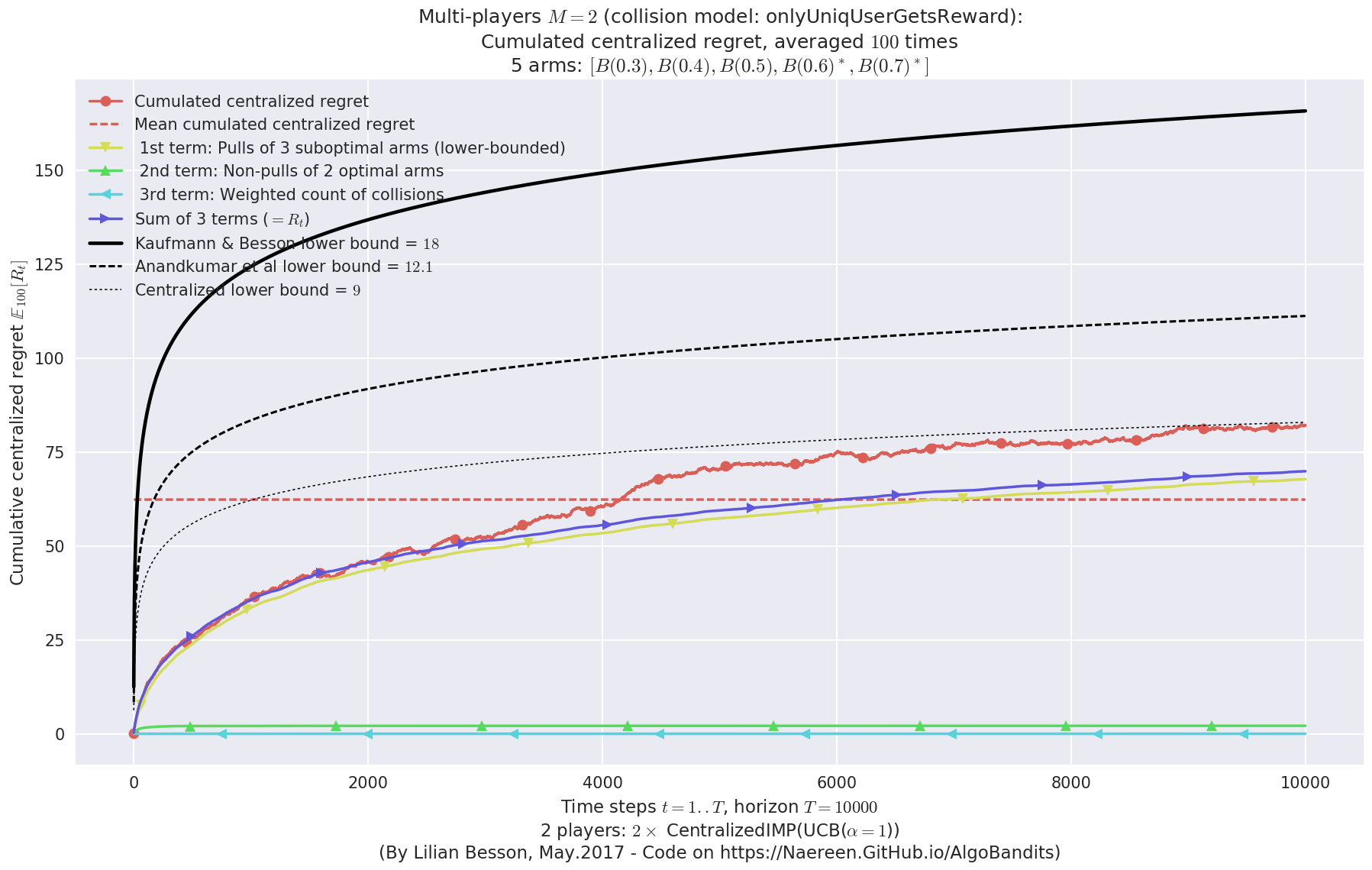

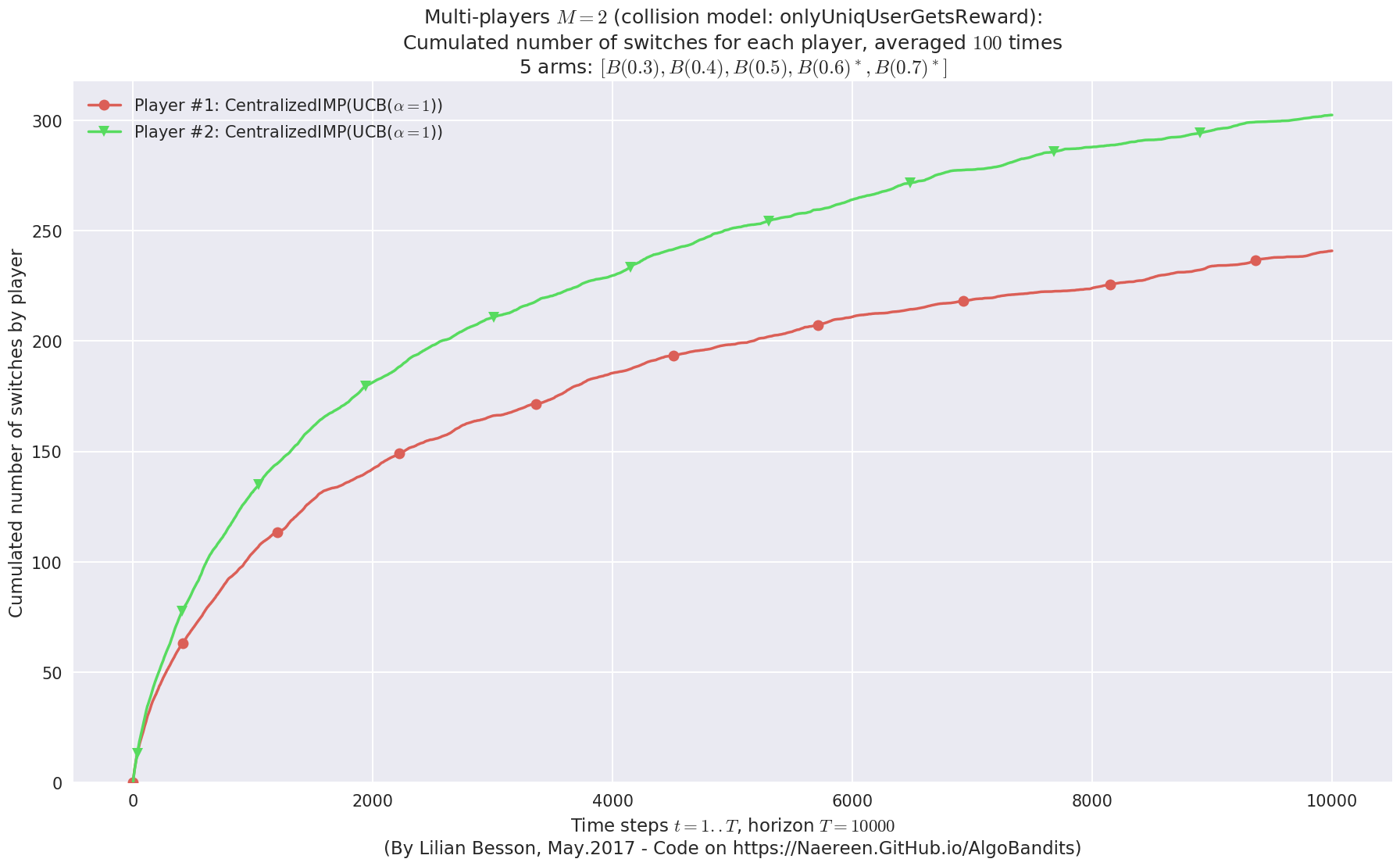

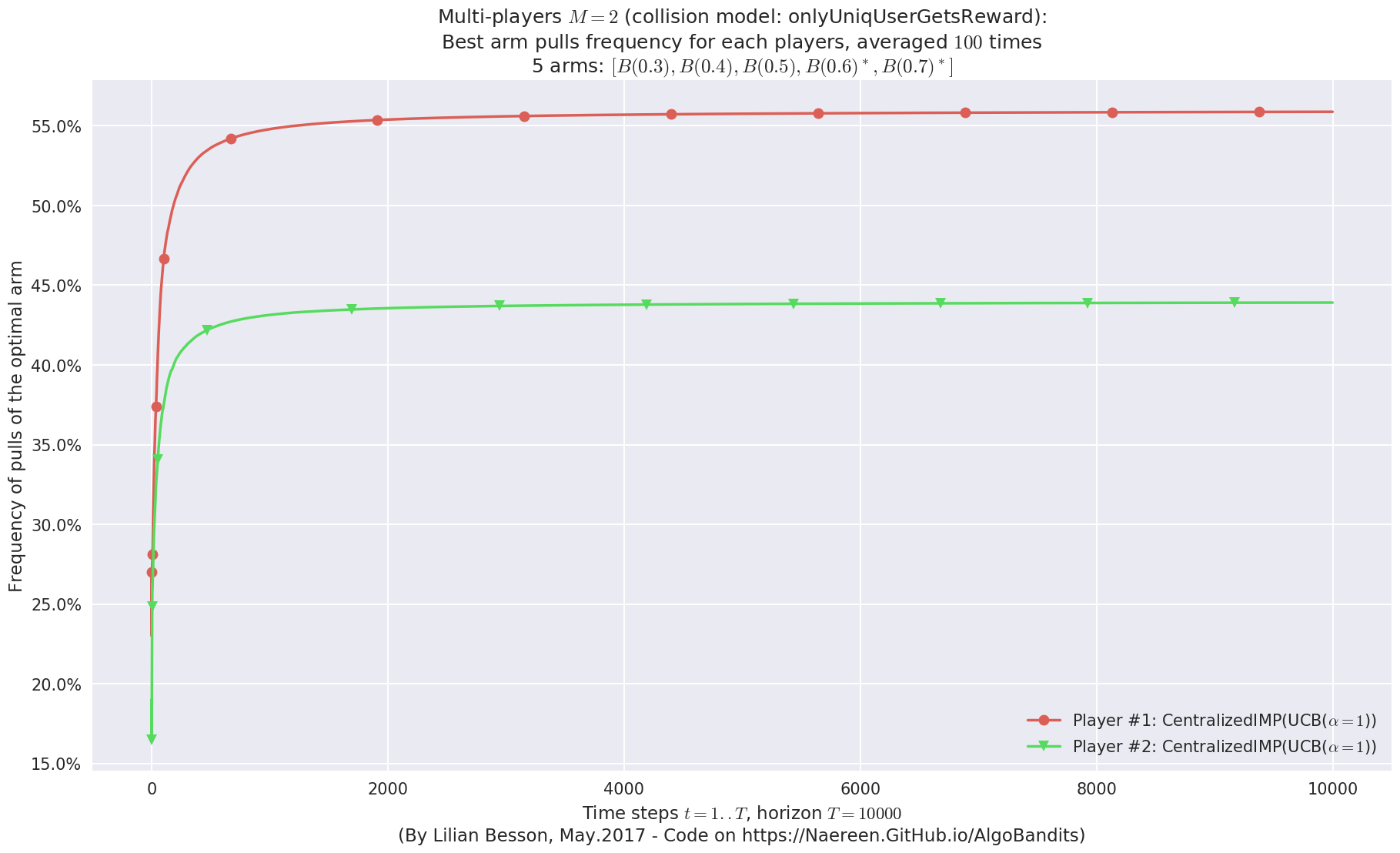

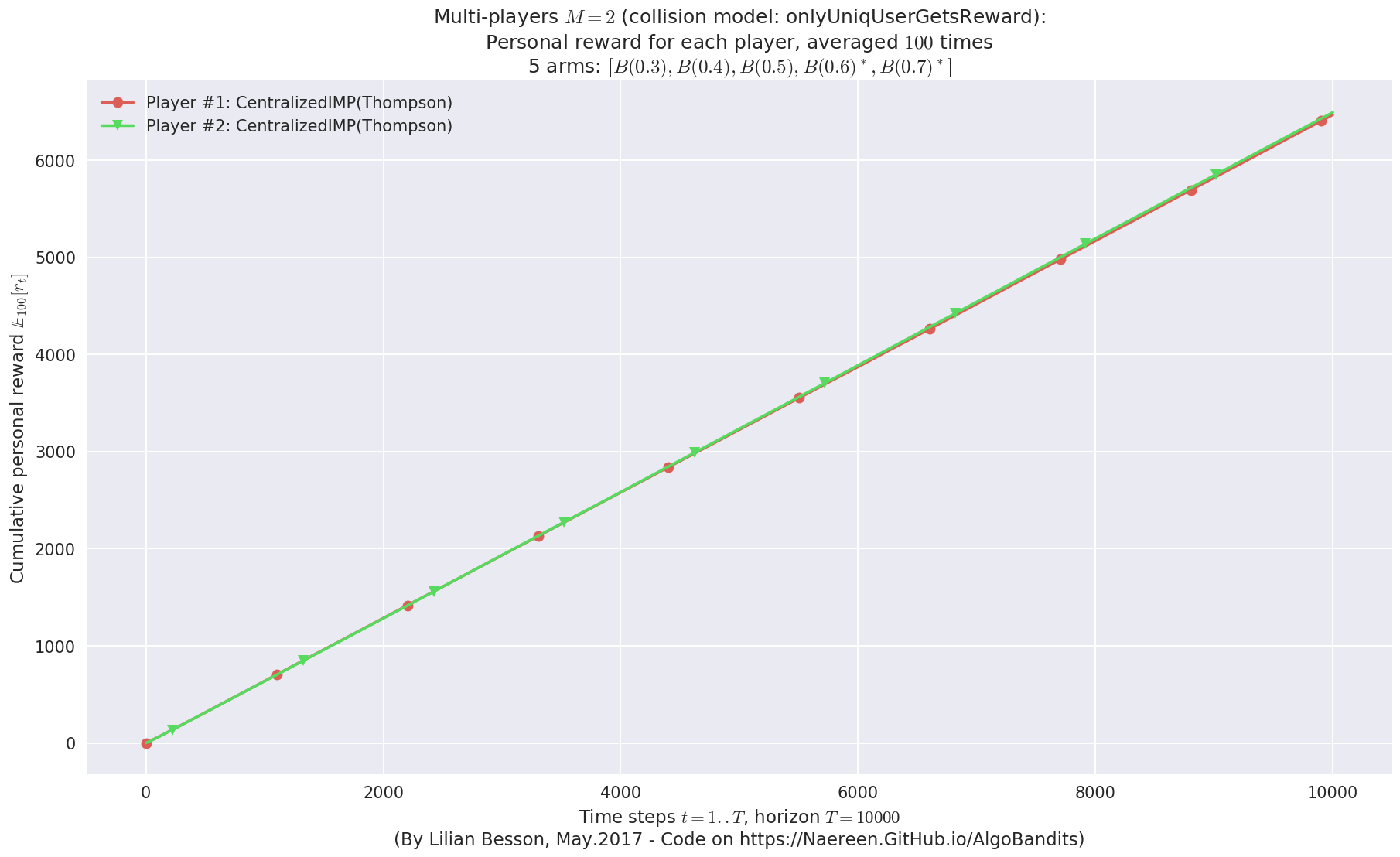

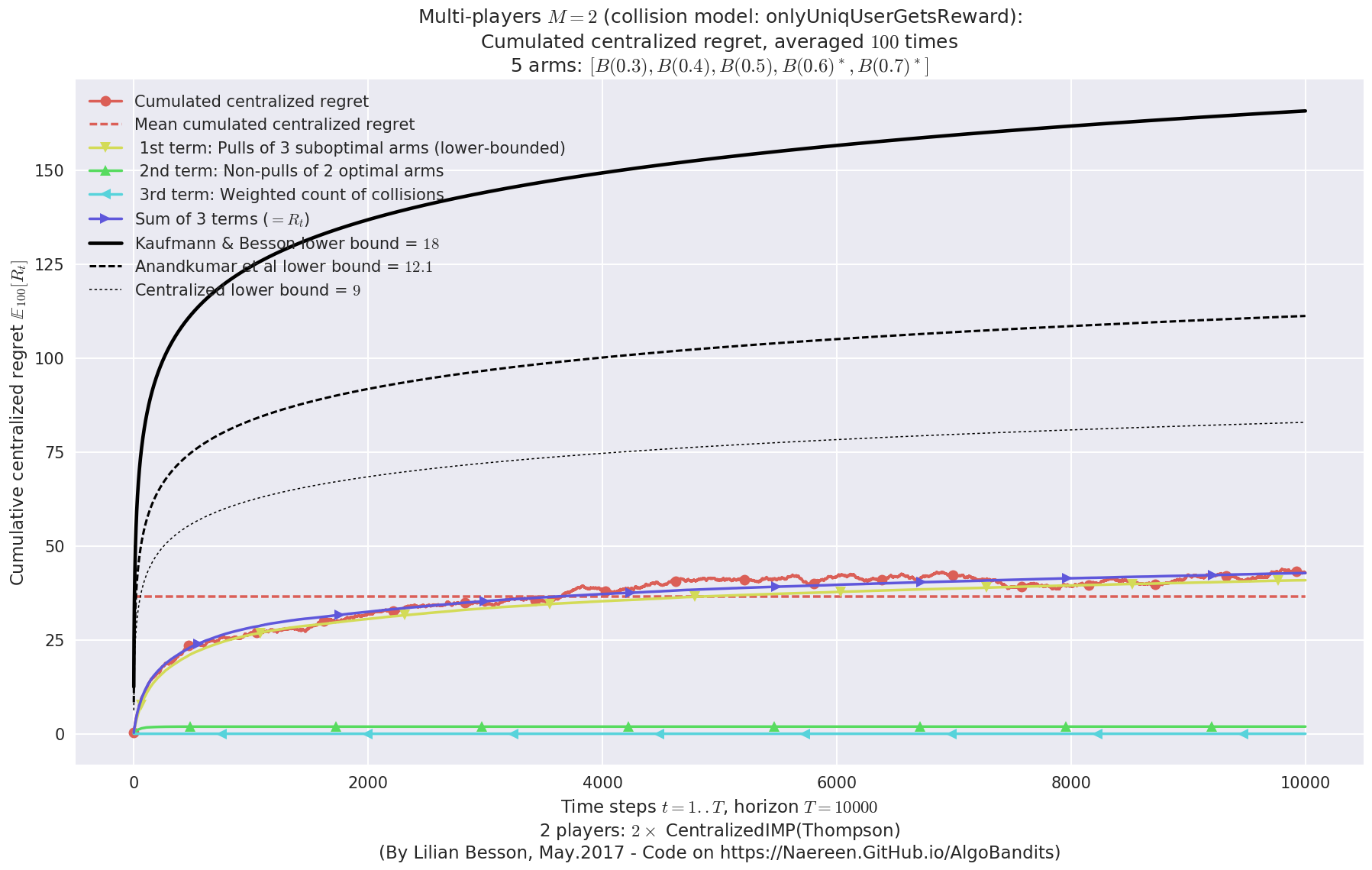

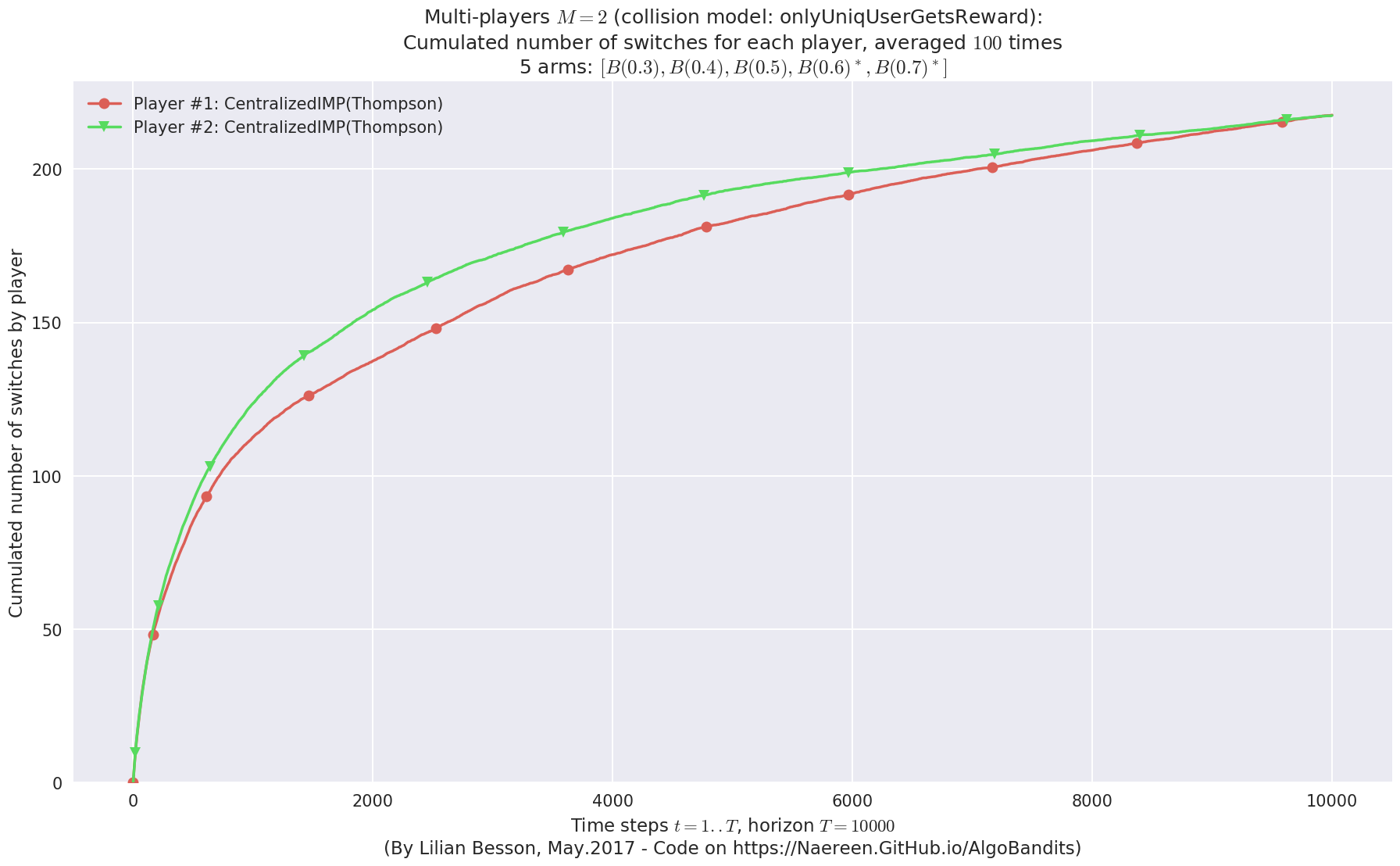

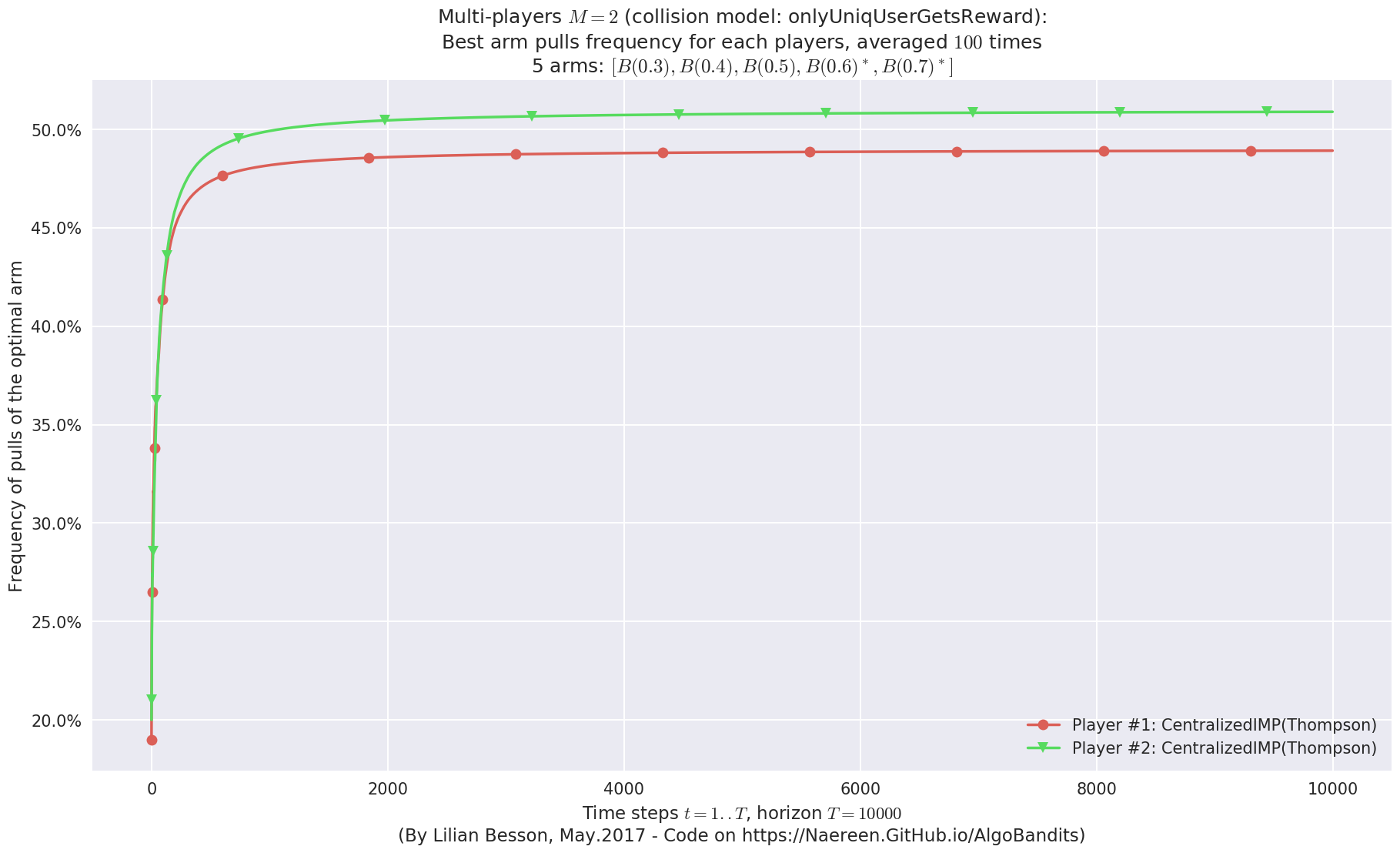

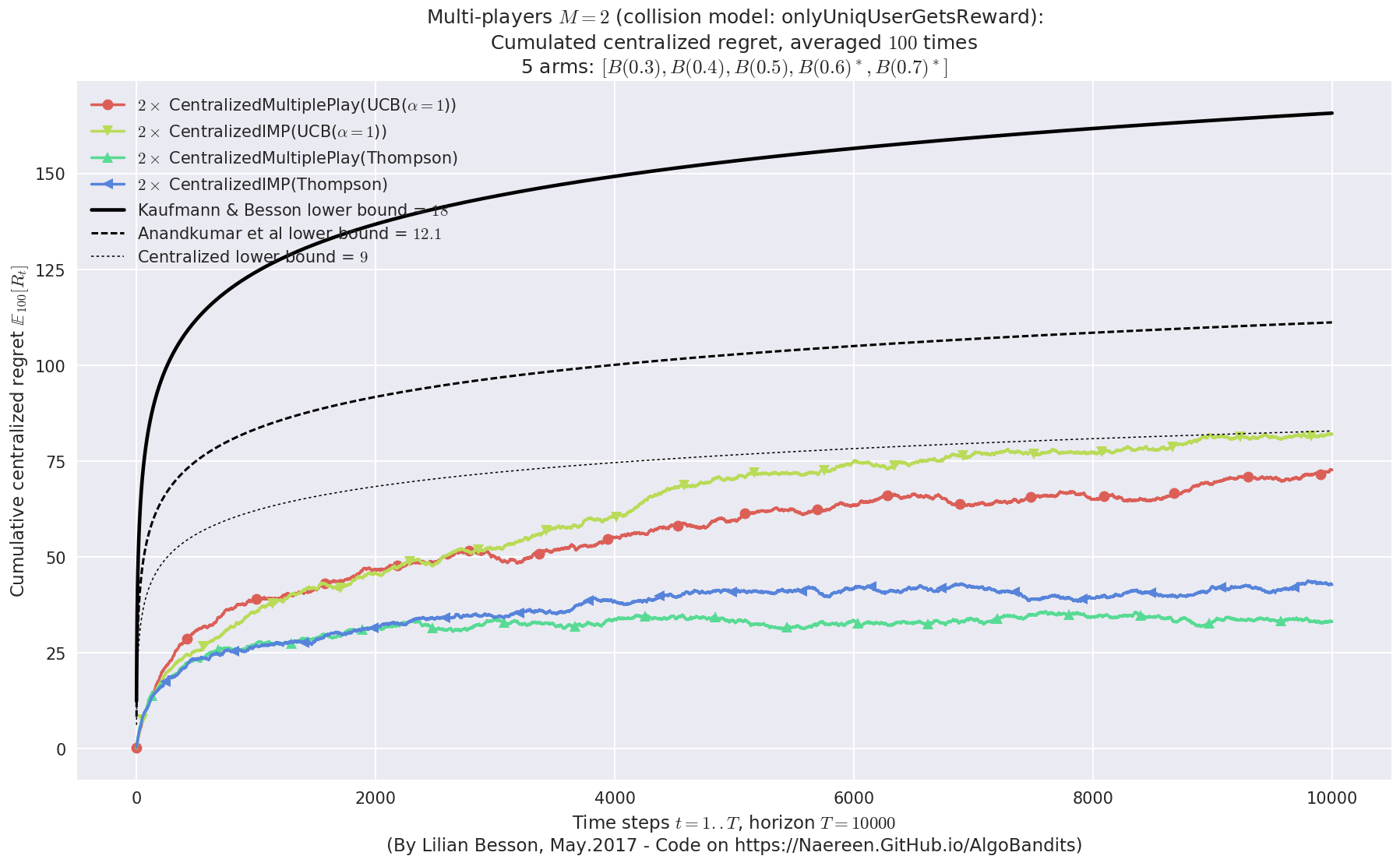

1.4.1 First problem

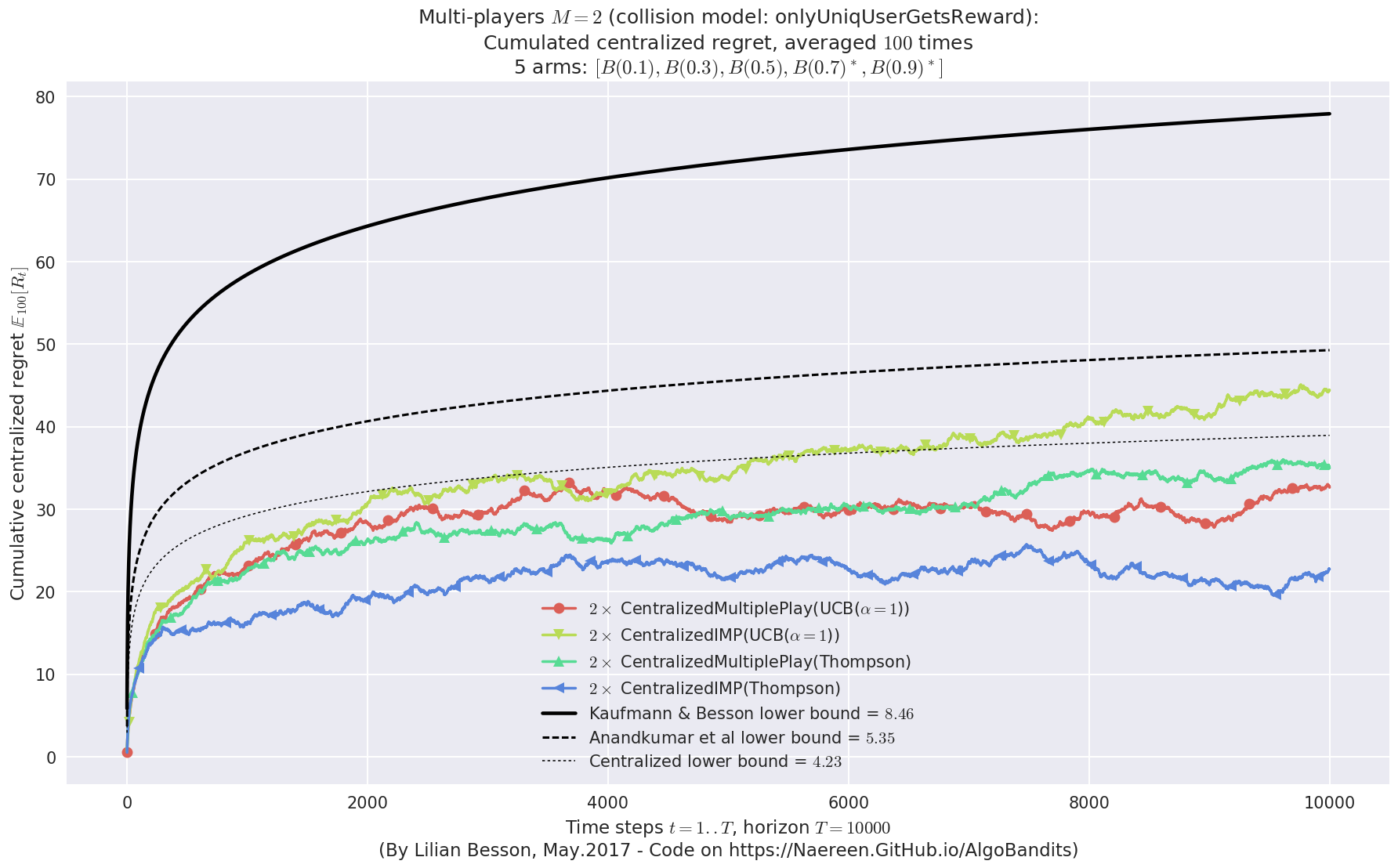

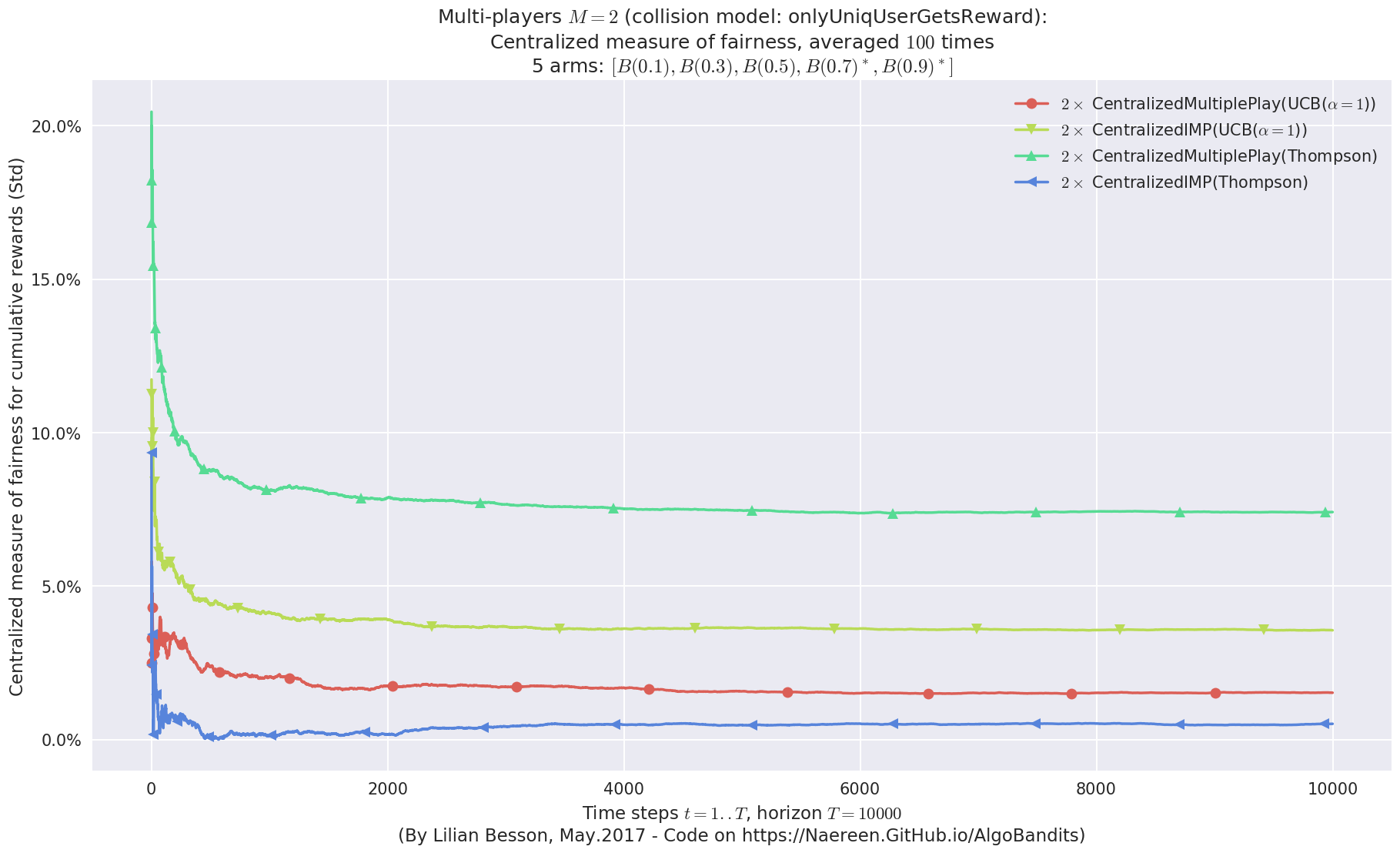

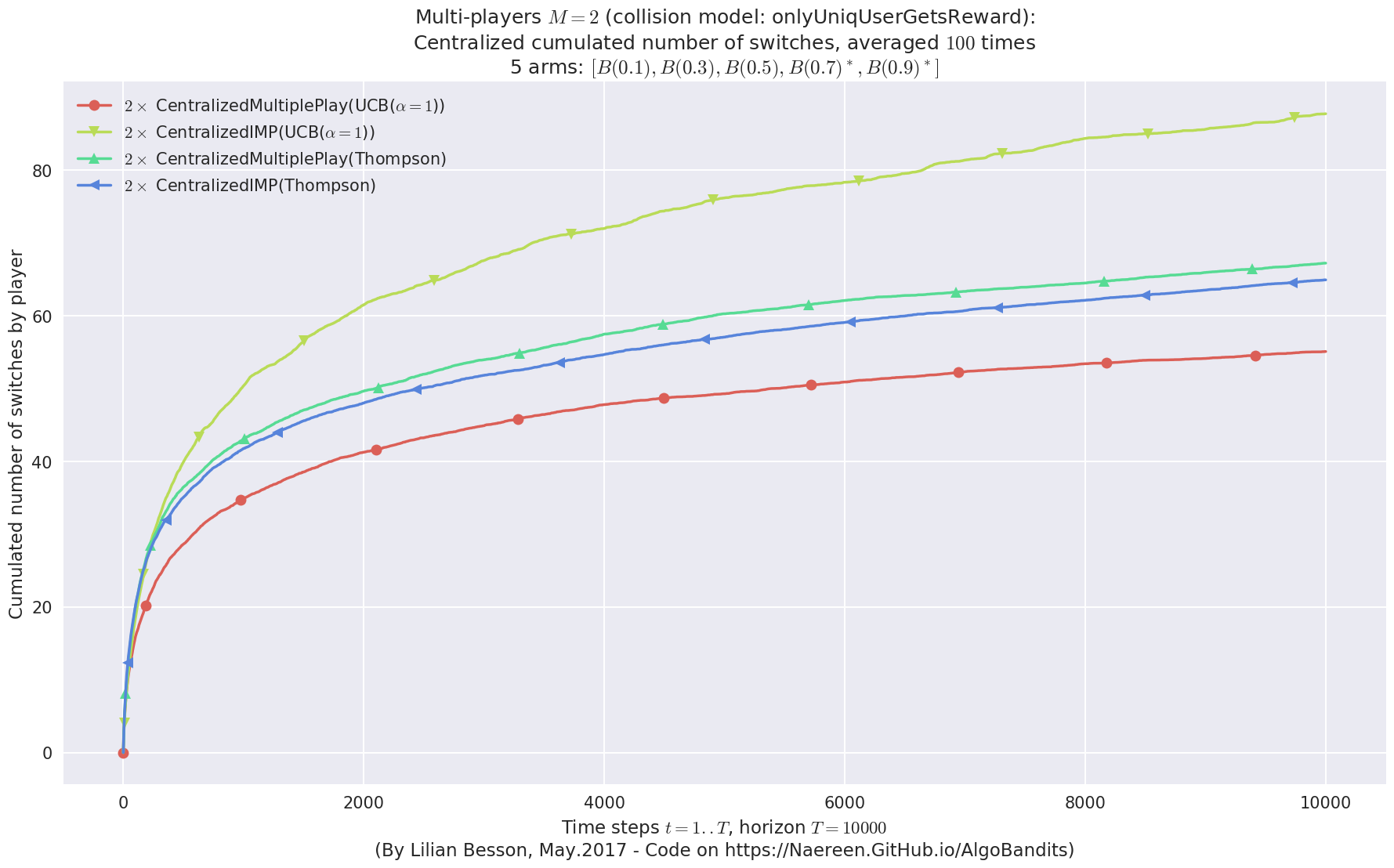

1.4.2 Second problem

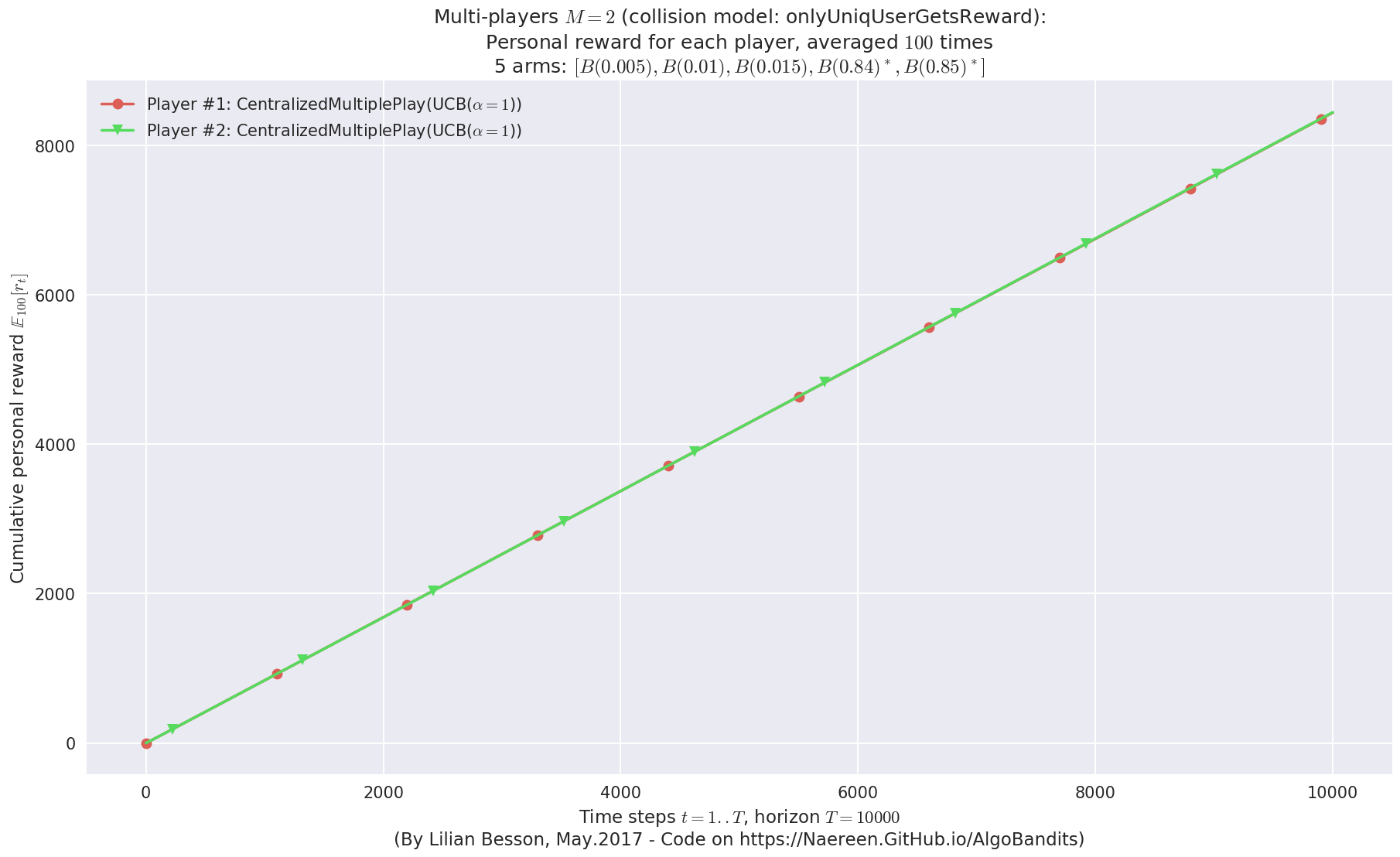

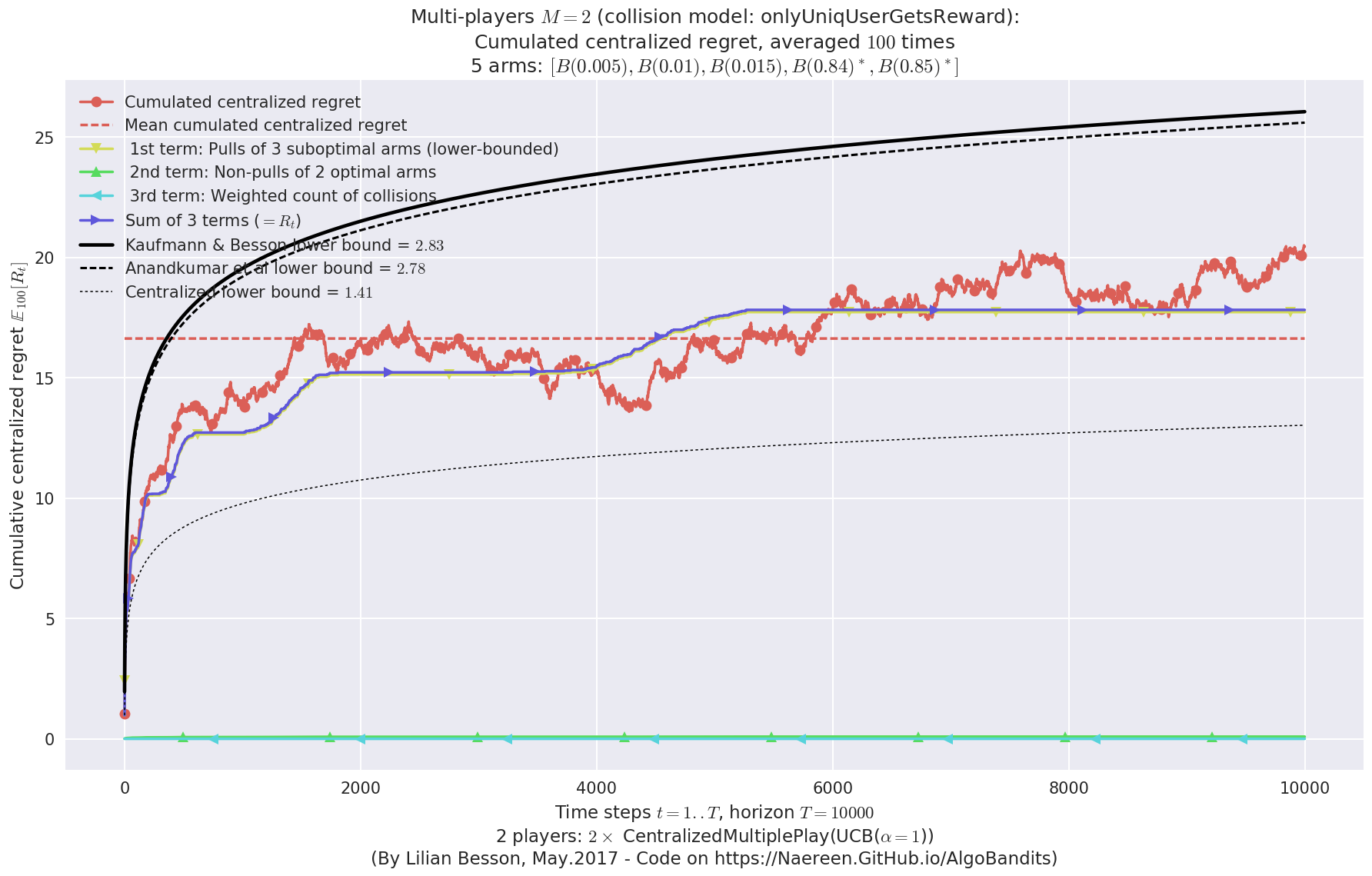

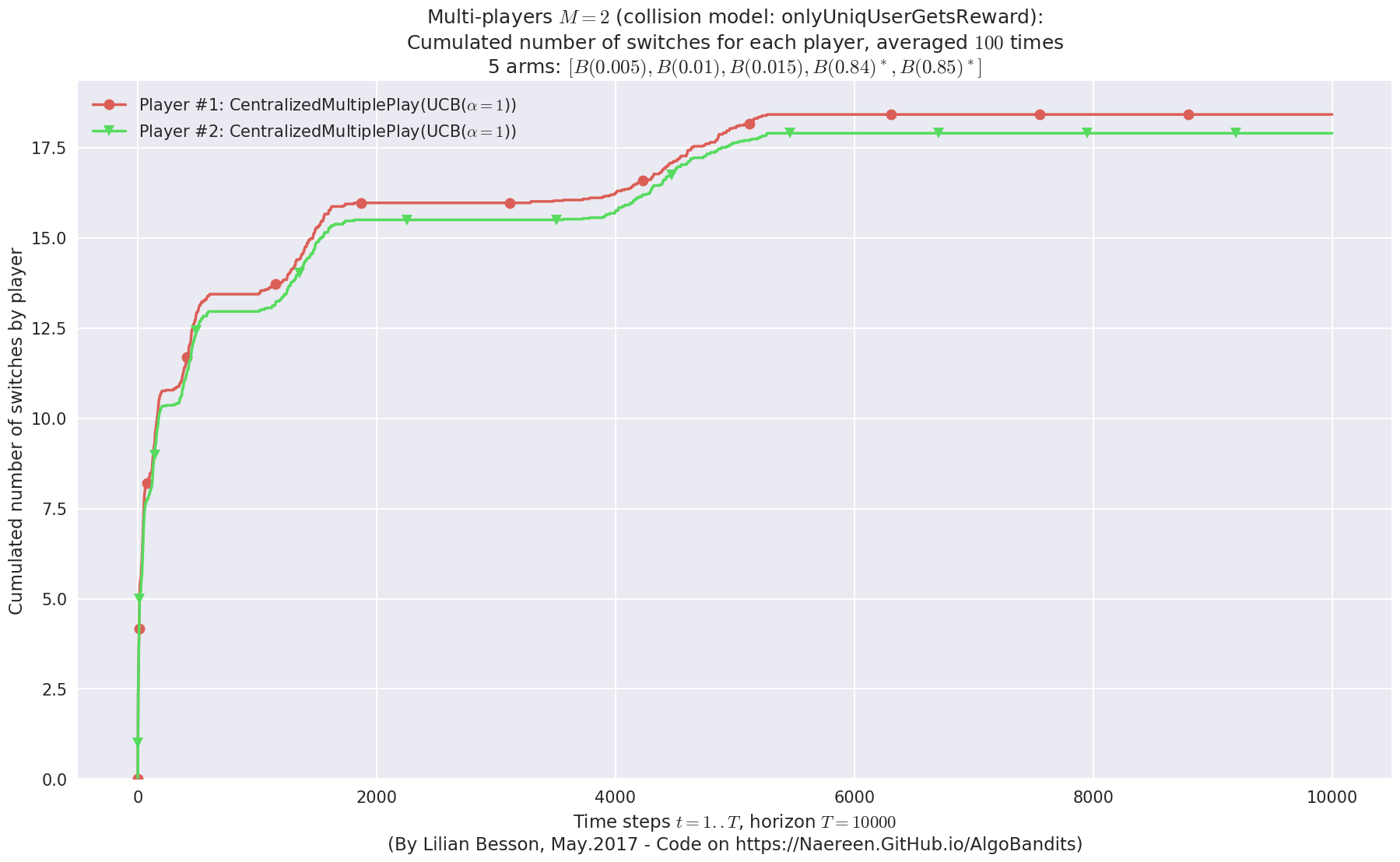

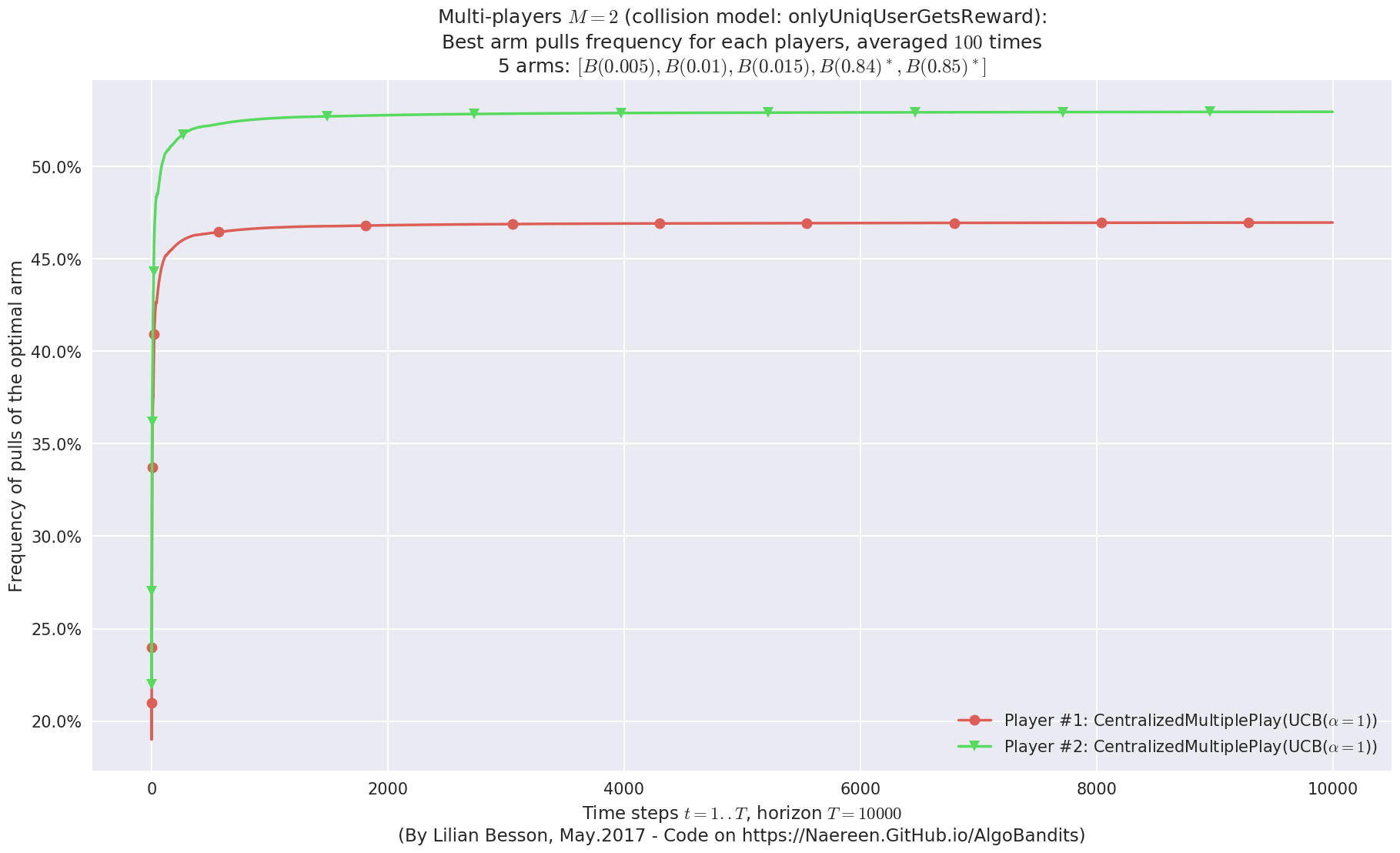

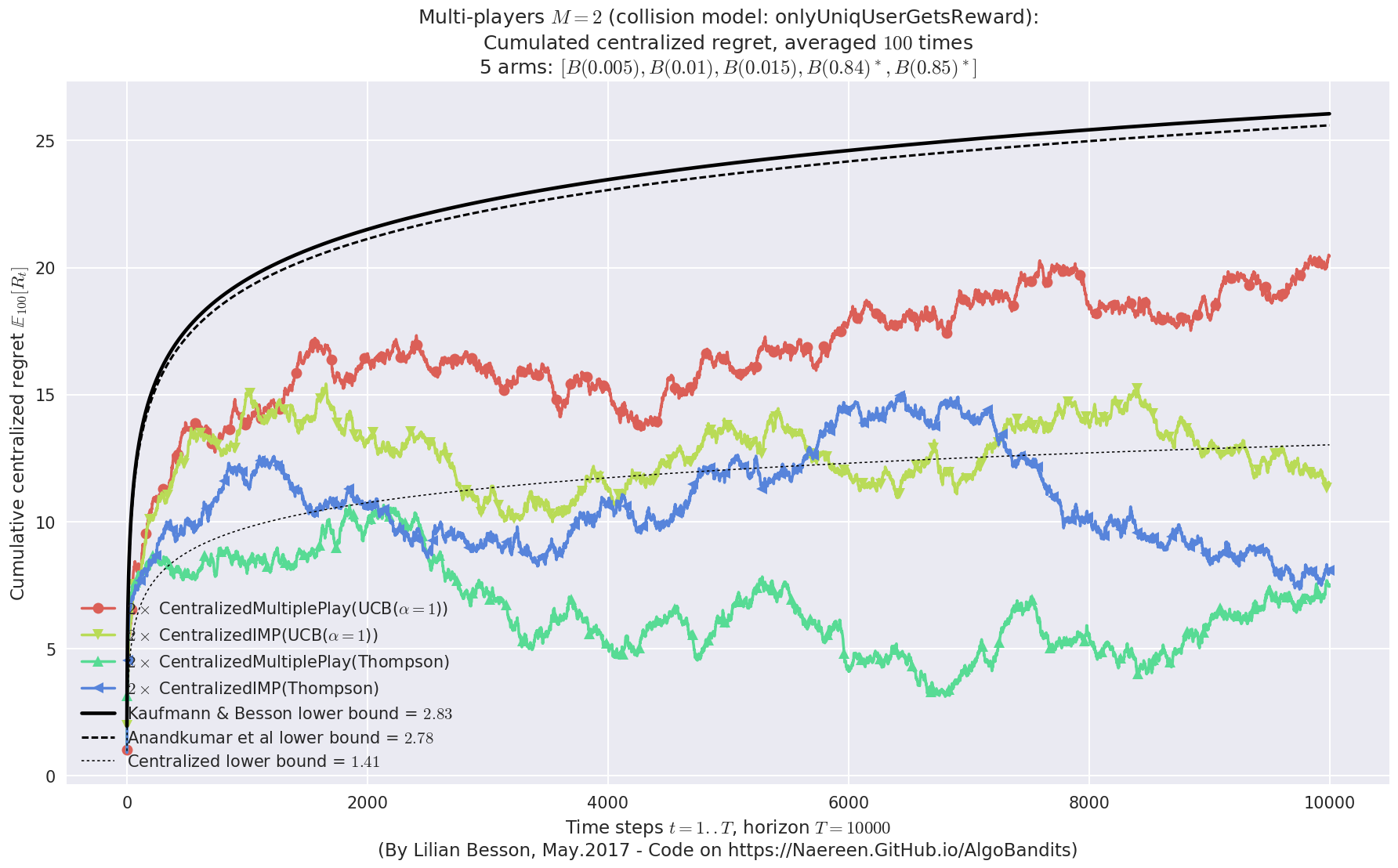

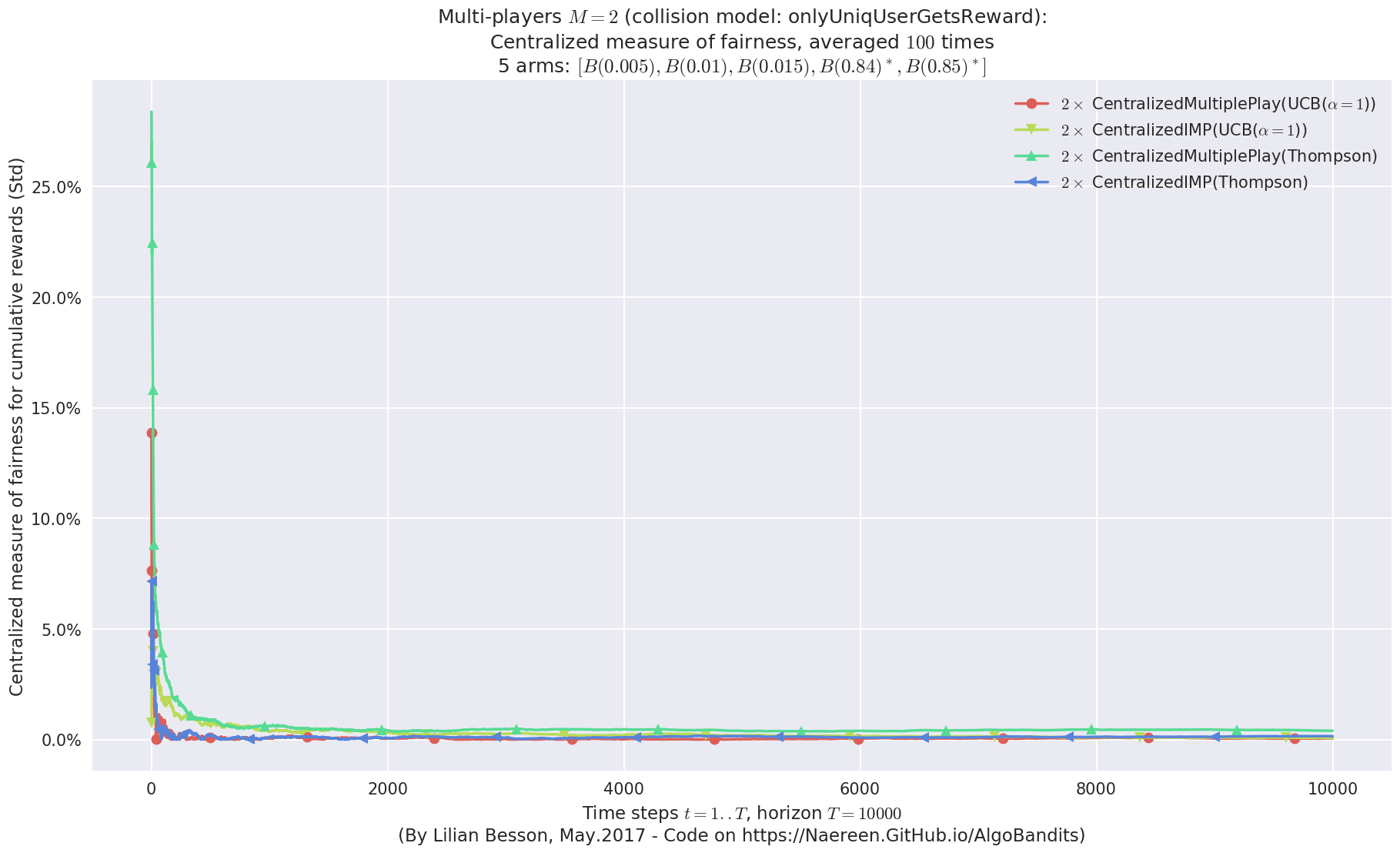

1.4.3 Third problem

1.4.4 Comparing their performances

An example of a small Multi-Player simulation, with Centralized Algorithms¶

First, be sure to be in the main folder, and import

EvaluatorMultiPlayers from Environment package:

In [1]:

from sys import path

path.insert(0, '..')

In [3]:

# Local imports

from Environment import EvaluatorMultiPlayers, tqdm

We also need arms, for instance Bernoulli-distributed arm:

In [6]:

# Import arms

from Arms import Bernoulli

And finally we need some single-player and multi-player Reinforcement Learning algorithms:

In [8]:

# Import algorithms

from Policies import *

from PoliciesMultiPlayers import *

In [9]:

# Just improving the ?? in Jupyter. Thanks to https://nbviewer.jupyter.org/gist/minrk/7715212

from __future__ import print_function

from IPython.core import page

def myprint(s):

try:

print(s['text/plain'])

except (KeyError, TypeError):

print(s)

page.page = myprint

For instance, this imported the UCB algorithm:

In [10]:

UCBalpha?

Init signature: UCBalpha(nbArms, alpha=1, lower=0.0, amplitude=1.0)

Docstring:

The UCB1 (UCB-alpha) index policy, modified to take a random permutation order for the initial exploration of each arm (reduce collisions in the multi-players setting).

Reference: [Auer et al. 02].

Init docstring:

New generic index policy.

- nbArms: the number of arms,

- lower, amplitude: lower value and known amplitude of the rewards.

File: ~/SMPyBandits.git/Policies/UCBalpha.py

Type: type

As well as the CentralizedMultiplePlay multi-player policy:

In [11]:

CentralizedMultiplePlay?

Init signature: CentralizedMultiplePlay(nbPlayers, playerAlgo, nbArms, uniformAllocation=False, *args, **kwargs)

Docstring:

CentralizedMultiplePlay: a multi-player policy where ONE policy is used by a centralized agent; asking the policy to select nbPlayers arms at each step.

Init docstring:

- nbPlayers: number of players to create (in self._players).

- playerAlgo: class to use for every players.

- nbArms: number of arms, given as first argument to playerAlgo.

- uniformAllocation: Should the affectations of users always be uniform, or fixed when UCB indexes have converged? First choice is more fair, but linear nb of switches, second choice is not fair, but cst nb of switches.

- `*args`, `**kwargs`: arguments, named arguments, given to playerAlgo.

Examples:

>>> s = CentralizedMultiplePlay(10, TakeFixedArm, 14)

>>> s = CentralizedMultiplePlay(NB_PLAYERS, Softmax, nbArms, temperature=TEMPERATURE)

- To get a list of usable players, use s.children.

- Warning: s._players is for internal use ONLY!

File: ~/SMPyBandits.git/PoliciesMultiPlayers/CentralizedMultiplePlay.py

Type: type

We also need a collision model. The usual ones are defined in the

CollisionModels package, and the only one we need is the classical

one, where two or more colliding users don’t receive any rewards.

In [12]:

# Collision Models

from Environment.CollisionModels import onlyUniqUserGetsReward

onlyUniqUserGetsReward?

Signature: onlyUniqUserGetsReward(t, arms, players, choices, rewards, pulls, collisions)

Docstring:

Simple collision model where only the players alone on one arm samples it and receives the reward.

- This is the default collision model, cf. https://arxiv.org/abs/0910.2065v3 collision model 1.

- The numpy array 'choices' is increased according to the number of users who collided (it is NOT binary).

File: ~/SMPyBandits.git/Environment/CollisionModels.py

Type: function

Creating the problem¶

Parameters for the simulation¶

- \(T = 10000\) is the time horizon,

- \(N = 100\) is the number of repetitions (should be larger to have consistent results),

- \(M = 2\) is the number of players,

N_JOBS = 4is the number of cores used to parallelize the code.

In [14]:

HORIZON = 10000

REPETITIONS = 100

NB_PLAYERS = 2

N_JOBS = 4

collisionModel = onlyUniqUserGetsReward

Three MAB problems with Bernoulli arms¶

We consider in this example \(3\) problems, with Bernoulli arms,

of different means.

The first problem is very easy, with two good arms and three arms, with a fixed gap \(\Delta = \max_{\mu_i \neq \mu_j}(\mu_{i} - \mu_{j}) = 0.1\).

The second problem is as easier, with a larger gap.

Third problem is harder, with a smaller gap, and a very large difference between the two optimal arms and the suboptimal arms.

Note: right now, the multi-environments evaluator does not work well for MP policies, if there is a number different of arms in the scenarios. So I use the same number of arms in all the problems.

In [15]:

ENVIRONMENTS = [ # 1) Bernoulli arms

{ # Scenario 1 from [Komiyama, Honda, Nakagawa, 2016, arXiv 1506.00779]

"arm_type": Bernoulli,

"params": [0.3, 0.4, 0.5, 0.6, 0.7]

},

{ # Classical scenario

"arm_type": Bernoulli,

"params": [0.1, 0.3, 0.5, 0.7, 0.9]

},

{ # Harder scenario

"arm_type": Bernoulli,

"params": [0.005, 0.01, 0.015, 0.84, 0.85]

}

]

Some RL algorithms¶

We will compare Thompson Sampling against \(\mathrm{UCB}_1\), using two different centralized policy:

CentralizedMultiplePlayis the naive use of a Bandit algorithm for Multi-Player decision making: at every step, the internal decision making process is used to determine not \(1\) arm but \(M\) to sample. For UCB-like algorithm, the decision making is based on a \(\arg\max\) on UCB-like indexes, usually of the form \(I_j(t) = X_j(t) + B_j(t)\), where \(X_j(t) = \hat{\mu_j}(t) = \sum_{\tau \leq t} r_j(\tau) / N_j(t)\) is the empirical mean of arm \(j\), and \(B_j(t)\) is a bias term, of the form \(B_j(t) = \sqrt{\frac{\alpha \log(t)}{2 N_j(t)}}\).CentralizedIMPis very similar, but instead of following the internal decision making for all the decisions, the system uses just the empirical means \(X_j(t)\) to determine \(M-1\) arms to sample, and the bias-corrected term (i.e., the internal decision making, can be sampling from a Bayesian posterior for instance) is used just for one decision. It is an heuristic, proposed in [Komiyama, Honda, Nakagawa, 2016].

In [16]:

nbArms = len(ENVIRONMENTS[0]['params'])

assert all(len(env['params']) == nbArms for env in ENVIRONMENTS), "Error: not yet support if different environments have different nb of arms"

nbArms

SUCCESSIVE_PLAYERS = [

CentralizedMultiplePlay(NB_PLAYERS, UCBalpha, nbArms, alpha=1).children,

CentralizedIMP(NB_PLAYERS, UCBalpha, nbArms, alpha=1).children,

CentralizedMultiplePlay(NB_PLAYERS, Thompson, nbArms).children,

CentralizedIMP(NB_PLAYERS, Thompson, nbArms).children

]

SUCCESSIVE_PLAYERS

Out[16]:

5

- One new child, of index 0, and class #1<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

- One new child, of index 1, and class #2<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

- One new child, of index 0, and class #1<CentralizedIMP(UCB($\alpha=1$))> ...

- One new child, of index 1, and class #2<CentralizedIMP(UCB($\alpha=1$))> ...

- One new child, of index 0, and class #1<CentralizedMultiplePlay(Thompson)> ...

- One new child, of index 1, and class #2<CentralizedMultiplePlay(Thompson)> ...

- One new child, of index 0, and class #1<CentralizedIMP(Thompson)> ...

- One new child, of index 1, and class #2<CentralizedIMP(Thompson)> ...

Out[16]:

[[CentralizedMultiplePlay(UCB($\alpha=1$)),

CentralizedMultiplePlay(UCB($\alpha=1$))],

[CentralizedIMP(UCB($\alpha=1$)), CentralizedIMP(UCB($\alpha=1$))],

[CentralizedMultiplePlay(Thompson), CentralizedMultiplePlay(Thompson)],

[CentralizedIMP(Thompson), CentralizedIMP(Thompson)]]

The mother class in this case does all the job here, as we use centralized learning.

In [17]:

OnePlayer = SUCCESSIVE_PLAYERS[0][0]

OnePlayer.nbArms

OneMother = OnePlayer.mother

OneMother

OneMother.nbArms

Out[17]:

5

Out[17]:

<PoliciesMultiPlayers.CentralizedMultiplePlay.CentralizedMultiplePlay at 0x7f5580f03f60>

Out[17]:

5

Complete configuration for the problem:

In [18]:

configuration = {

# --- Duration of the experiment

"horizon": HORIZON,

# --- Number of repetition of the experiment (to have an average)

"repetitions": REPETITIONS,

# --- Parameters for the use of joblib.Parallel

"n_jobs": N_JOBS, # = nb of CPU cores

"verbosity": 6, # Max joblib verbosity

# --- Collision model

"collisionModel": onlyUniqUserGetsReward,

# --- Arms

"environment": ENVIRONMENTS,

# --- Algorithms

"successive_players": SUCCESSIVE_PLAYERS,

}

Creating the EvaluatorMultiPlayers objects¶

We will need to create several objects, as the simulation first runs one policy against each environment, and then aggregate them to compare them.

In [20]:

%%time

N_players = len(configuration["successive_players"])

# List to keep all the EvaluatorMultiPlayers objects

evs = [None] * N_players

evaluators = [[None] * N_players] * len(configuration["environment"])

for playersId, players in tqdm(enumerate(configuration["successive_players"]), desc="Creating"):

print("\n\nConsidering the list of players :\n", players)

conf = configuration.copy()

conf['players'] = players

evs[playersId] = EvaluatorMultiPlayers(conf)

Considering the list of players :

[CentralizedMultiplePlay(UCB($\alpha=1$)), CentralizedMultiplePlay(UCB($\alpha=1$))]

Number of players in the multi-players game: 2

Time horizon: 10000

Number of repetitions: 100

Sampling rate for saving, delta_t_save: 1

Sampling rate for plotting, delta_t_plot: 1

Number of jobs for parallelization: 4

Using collision model onlyUniqUserGetsReward (function <function onlyUniqUserGetsReward at 0x7f55868d3510>).

More details:

Simple collision model where only the players alone on one arm samples it and receives the reward.

- This is the default collision model, cf. https://arxiv.org/abs/0910.2065v3 collision model 1.

- The numpy array 'choices' is increased according to the number of users who collided (it is NOT binary).

Creating a new MAB problem ...

Reading arms of this MAB problem from a dictionnary 'configuration' = {'params': [0.3, 0.4, 0.5, 0.6, 0.7], 'arm_type': <class 'Arms.Bernoulli.Bernoulli'>} ...

- with 'arm_type' = <class 'Arms.Bernoulli.Bernoulli'>

- with 'params' = [0.3, 0.4, 0.5, 0.6, 0.7]

- with 'arms' = [B(0.3), B(0.4), B(0.5), B(0.6), B(0.7)]

- with 'means' = [ 0.3 0.4 0.5 0.6 0.7]

- with 'nbArms' = 5

- with 'maxArm' = 0.7

- with 'minArm' = 0.3

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 9.46 ...

- a Optimal Arm Identification factor H_OI(mu) = 60.00% ...

- with 'arms' represented as: $[B(0.3), B(0.4), B(0.5), B(0.6), B(0.7)^*]$

Creating a new MAB problem ...

Reading arms of this MAB problem from a dictionnary 'configuration' = {'params': [0.1, 0.3, 0.5, 0.7, 0.9], 'arm_type': <class 'Arms.Bernoulli.Bernoulli'>} ...

- with 'arm_type' = <class 'Arms.Bernoulli.Bernoulli'>

- with 'params' = [0.1, 0.3, 0.5, 0.7, 0.9]

- with 'arms' = [B(0.1), B(0.3), B(0.5), B(0.7), B(0.9)]

- with 'means' = [ 0.1 0.3 0.5 0.7 0.9]

- with 'nbArms' = 5

- with 'maxArm' = 0.9

- with 'minArm' = 0.1

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 3.12 ...

- a Optimal Arm Identification factor H_OI(mu) = 40.00% ...

- with 'arms' represented as: $[B(0.1), B(0.3), B(0.5), B(0.7), B(0.9)^*]$

Creating a new MAB problem ...

Reading arms of this MAB problem from a dictionnary 'configuration' = {'params': [0.005, 0.01, 0.015, 0.84, 0.85], 'arm_type': <class 'Arms.Bernoulli.Bernoulli'>} ...

- with 'arm_type' = <class 'Arms.Bernoulli.Bernoulli'>

- with 'params' = [0.005, 0.01, 0.015, 0.84, 0.85]

- with 'arms' = [B(0.005), B(0.01), B(0.015), B(0.84), B(0.85)]

- with 'means' = [ 0.005 0.01 0.015 0.84 0.85 ]

- with 'nbArms' = 5

- with 'maxArm' = 0.85

- with 'minArm' = 0.005

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 27.3 ...

- a Optimal Arm Identification factor H_OI(mu) = 29.40% ...

- with 'arms' represented as: $[B(0.005), B(0.01), B(0.015), B(0.84), B(0.85)^*]$

Number of environments to try: 3

Considering the list of players :

[CentralizedIMP(UCB($\alpha=1$)), CentralizedIMP(UCB($\alpha=1$))]

Number of players in the multi-players game: 2

Time horizon: 10000

Number of repetitions: 100

Sampling rate for saving, delta_t_save: 1

Sampling rate for plotting, delta_t_plot: 1

Number of jobs for parallelization: 4

Using collision model onlyUniqUserGetsReward (function <function onlyUniqUserGetsReward at 0x7f55868d3510>).

More details:

Simple collision model where only the players alone on one arm samples it and receives the reward.

- This is the default collision model, cf. https://arxiv.org/abs/0910.2065v3 collision model 1.

- The numpy array 'choices' is increased according to the number of users who collided (it is NOT binary).

Creating a new MAB problem ...

Reading arms of this MAB problem from a dictionnary 'configuration' = {'params': [0.3, 0.4, 0.5, 0.6, 0.7], 'arm_type': <class 'Arms.Bernoulli.Bernoulli'>} ...

- with 'arm_type' = <class 'Arms.Bernoulli.Bernoulli'>

- with 'params' = [0.3, 0.4, 0.5, 0.6, 0.7]

- with 'arms' = [B(0.3), B(0.4), B(0.5), B(0.6), B(0.7)]

- with 'means' = [ 0.3 0.4 0.5 0.6 0.7]

- with 'nbArms' = 5

- with 'maxArm' = 0.7

- with 'minArm' = 0.3

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 9.46 ...

- a Optimal Arm Identification factor H_OI(mu) = 60.00% ...

- with 'arms' represented as: $[B(0.3), B(0.4), B(0.5), B(0.6), B(0.7)^*]$

Creating a new MAB problem ...

Reading arms of this MAB problem from a dictionnary 'configuration' = {'params': [0.1, 0.3, 0.5, 0.7, 0.9], 'arm_type': <class 'Arms.Bernoulli.Bernoulli'>} ...

- with 'arm_type' = <class 'Arms.Bernoulli.Bernoulli'>

- with 'params' = [0.1, 0.3, 0.5, 0.7, 0.9]

- with 'arms' = [B(0.1), B(0.3), B(0.5), B(0.7), B(0.9)]

- with 'means' = [ 0.1 0.3 0.5 0.7 0.9]

- with 'nbArms' = 5

- with 'maxArm' = 0.9

- with 'minArm' = 0.1

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 3.12 ...

- a Optimal Arm Identification factor H_OI(mu) = 40.00% ...

- with 'arms' represented as: $[B(0.1), B(0.3), B(0.5), B(0.7), B(0.9)^*]$

Creating a new MAB problem ...

Reading arms of this MAB problem from a dictionnary 'configuration' = {'params': [0.005, 0.01, 0.015, 0.84, 0.85], 'arm_type': <class 'Arms.Bernoulli.Bernoulli'>} ...

- with 'arm_type' = <class 'Arms.Bernoulli.Bernoulli'>

- with 'params' = [0.005, 0.01, 0.015, 0.84, 0.85]

- with 'arms' = [B(0.005), B(0.01), B(0.015), B(0.84), B(0.85)]

- with 'means' = [ 0.005 0.01 0.015 0.84 0.85 ]

- with 'nbArms' = 5

- with 'maxArm' = 0.85

- with 'minArm' = 0.005

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 27.3 ...

- a Optimal Arm Identification factor H_OI(mu) = 29.40% ...

- with 'arms' represented as: $[B(0.005), B(0.01), B(0.015), B(0.84), B(0.85)^*]$

Number of environments to try: 3

Considering the list of players :

[CentralizedMultiplePlay(Thompson), CentralizedMultiplePlay(Thompson)]

Number of players in the multi-players game: 2

Time horizon: 10000

Number of repetitions: 100

Sampling rate for saving, delta_t_save: 1

Sampling rate for plotting, delta_t_plot: 1

Number of jobs for parallelization: 4

Using collision model onlyUniqUserGetsReward (function <function onlyUniqUserGetsReward at 0x7f55868d3510>).

More details:

Simple collision model where only the players alone on one arm samples it and receives the reward.

- This is the default collision model, cf. https://arxiv.org/abs/0910.2065v3 collision model 1.

- The numpy array 'choices' is increased according to the number of users who collided (it is NOT binary).

Creating a new MAB problem ...

Reading arms of this MAB problem from a dictionnary 'configuration' = {'params': [0.3, 0.4, 0.5, 0.6, 0.7], 'arm_type': <class 'Arms.Bernoulli.Bernoulli'>} ...

- with 'arm_type' = <class 'Arms.Bernoulli.Bernoulli'>

- with 'params' = [0.3, 0.4, 0.5, 0.6, 0.7]

- with 'arms' = [B(0.3), B(0.4), B(0.5), B(0.6), B(0.7)]

- with 'means' = [ 0.3 0.4 0.5 0.6 0.7]

- with 'nbArms' = 5

- with 'maxArm' = 0.7

- with 'minArm' = 0.3

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 9.46 ...

- a Optimal Arm Identification factor H_OI(mu) = 60.00% ...

- with 'arms' represented as: $[B(0.3), B(0.4), B(0.5), B(0.6), B(0.7)^*]$

Creating a new MAB problem ...

Reading arms of this MAB problem from a dictionnary 'configuration' = {'params': [0.1, 0.3, 0.5, 0.7, 0.9], 'arm_type': <class 'Arms.Bernoulli.Bernoulli'>} ...

- with 'arm_type' = <class 'Arms.Bernoulli.Bernoulli'>

- with 'params' = [0.1, 0.3, 0.5, 0.7, 0.9]

- with 'arms' = [B(0.1), B(0.3), B(0.5), B(0.7), B(0.9)]

- with 'means' = [ 0.1 0.3 0.5 0.7 0.9]

- with 'nbArms' = 5

- with 'maxArm' = 0.9

- with 'minArm' = 0.1

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 3.12 ...

- a Optimal Arm Identification factor H_OI(mu) = 40.00% ...

- with 'arms' represented as: $[B(0.1), B(0.3), B(0.5), B(0.7), B(0.9)^*]$

Creating a new MAB problem ...

Reading arms of this MAB problem from a dictionnary 'configuration' = {'params': [0.005, 0.01, 0.015, 0.84, 0.85], 'arm_type': <class 'Arms.Bernoulli.Bernoulli'>} ...

- with 'arm_type' = <class 'Arms.Bernoulli.Bernoulli'>

- with 'params' = [0.005, 0.01, 0.015, 0.84, 0.85]

- with 'arms' = [B(0.005), B(0.01), B(0.015), B(0.84), B(0.85)]

- with 'means' = [ 0.005 0.01 0.015 0.84 0.85 ]

- with 'nbArms' = 5

- with 'maxArm' = 0.85

- with 'minArm' = 0.005

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 27.3 ...

- a Optimal Arm Identification factor H_OI(mu) = 29.40% ...

- with 'arms' represented as: $[B(0.005), B(0.01), B(0.015), B(0.84), B(0.85)^*]$

Number of environments to try: 3

Considering the list of players :

[CentralizedIMP(Thompson), CentralizedIMP(Thompson)]

Number of players in the multi-players game: 2

Time horizon: 10000

Number of repetitions: 100

Sampling rate for saving, delta_t_save: 1

Sampling rate for plotting, delta_t_plot: 1

Number of jobs for parallelization: 4

Using collision model onlyUniqUserGetsReward (function <function onlyUniqUserGetsReward at 0x7f55868d3510>).

More details:

Simple collision model where only the players alone on one arm samples it and receives the reward.

- This is the default collision model, cf. https://arxiv.org/abs/0910.2065v3 collision model 1.

- The numpy array 'choices' is increased according to the number of users who collided (it is NOT binary).

Creating a new MAB problem ...

Reading arms of this MAB problem from a dictionnary 'configuration' = {'params': [0.3, 0.4, 0.5, 0.6, 0.7], 'arm_type': <class 'Arms.Bernoulli.Bernoulli'>} ...

- with 'arm_type' = <class 'Arms.Bernoulli.Bernoulli'>

- with 'params' = [0.3, 0.4, 0.5, 0.6, 0.7]

- with 'arms' = [B(0.3), B(0.4), B(0.5), B(0.6), B(0.7)]

- with 'means' = [ 0.3 0.4 0.5 0.6 0.7]

- with 'nbArms' = 5

- with 'maxArm' = 0.7

- with 'minArm' = 0.3

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 9.46 ...

- a Optimal Arm Identification factor H_OI(mu) = 60.00% ...

- with 'arms' represented as: $[B(0.3), B(0.4), B(0.5), B(0.6), B(0.7)^*]$

Creating a new MAB problem ...

Reading arms of this MAB problem from a dictionnary 'configuration' = {'params': [0.1, 0.3, 0.5, 0.7, 0.9], 'arm_type': <class 'Arms.Bernoulli.Bernoulli'>} ...

- with 'arm_type' = <class 'Arms.Bernoulli.Bernoulli'>

- with 'params' = [0.1, 0.3, 0.5, 0.7, 0.9]

- with 'arms' = [B(0.1), B(0.3), B(0.5), B(0.7), B(0.9)]

- with 'means' = [ 0.1 0.3 0.5 0.7 0.9]

- with 'nbArms' = 5

- with 'maxArm' = 0.9

- with 'minArm' = 0.1

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 3.12 ...

- a Optimal Arm Identification factor H_OI(mu) = 40.00% ...

- with 'arms' represented as: $[B(0.1), B(0.3), B(0.5), B(0.7), B(0.9)^*]$

Creating a new MAB problem ...

Reading arms of this MAB problem from a dictionnary 'configuration' = {'params': [0.005, 0.01, 0.015, 0.84, 0.85], 'arm_type': <class 'Arms.Bernoulli.Bernoulli'>} ...

- with 'arm_type' = <class 'Arms.Bernoulli.Bernoulli'>

- with 'params' = [0.005, 0.01, 0.015, 0.84, 0.85]

- with 'arms' = [B(0.005), B(0.01), B(0.015), B(0.84), B(0.85)]

- with 'means' = [ 0.005 0.01 0.015 0.84 0.85 ]

- with 'nbArms' = 5

- with 'maxArm' = 0.85

- with 'minArm' = 0.005

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 27.3 ...

- a Optimal Arm Identification factor H_OI(mu) = 29.40% ...

- with 'arms' represented as: $[B(0.005), B(0.01), B(0.015), B(0.84), B(0.85)^*]$

Number of environments to try: 3

CPU times: user 96 ms, sys: 8 ms, total: 104 ms

Wall time: 96.2 ms

Solving the problem¶

Now we can simulate the \(2\) environments, for the successive policies. That part can take some time.

In [21]:

%%time

for playersId, evaluation in tqdm(enumerate(evs), desc="Policies"):

for envId, env in tqdm(enumerate(evaluation.envs), desc="Problems"):

# Evaluate just that env

evaluation.startOneEnv(envId, env)

# Storing it after simulation is done

evaluators[envId][playersId] = evaluation

Evaluating environment: MAB(nbArms: 5, arms: [B(0.3), B(0.4), B(0.5), B(0.6), B(0.7)], minArm: 0.3, maxArm: 0.7)

- Adding player #1 = #1<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

Using this already created player 'player' = #1<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

- Adding player #2 = #2<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

Using this already created player 'player' = #2<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

Estimated order by the policy #1<CentralizedMultiplePlay(UCB($\alpha=1$))> after 10000 steps: [1 2 0 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 68.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 85.84% (relative success)...

==> Spearman distance from optimal ordering: 81.19% (relative success)...

==> Gestalt distance from optimal ordering: 80.00% (relative success)...

==> Mean distance from optimal ordering: 78.76% (relative success)...

Estimated order by the policy #2<CentralizedMultiplePlay(UCB($\alpha=1$))> after 10000 steps: [1 2 0 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 68.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 85.84% (relative success)...

==> Spearman distance from optimal ordering: 81.19% (relative success)...

==> Gestalt distance from optimal ordering: 80.00% (relative success)...

==> Mean distance from optimal ordering: 78.76% (relative success)...

[Parallel(n_jobs=4)]: Done 5 tasks | elapsed: 3.1s

[Parallel(n_jobs=4)]: Done 42 tasks | elapsed: 17.0s

[Parallel(n_jobs=4)]: Done 100 out of 100 | elapsed: 39.0s finished

Evaluating environment: MAB(nbArms: 5, arms: [B(0.1), B(0.3), B(0.5), B(0.7), B(0.9)], minArm: 0.1, maxArm: 0.9)

- Adding player #1 = #1<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

Using this already created player 'player' = #1<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

- Adding player #2 = #2<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

Using this already created player 'player' = #2<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

Estimated order by the policy #1<CentralizedMultiplePlay(UCB($\alpha=1$))> after 10000 steps: [1 0 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 84.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 95.00% (relative success)...

==> Spearman distance from optimal ordering: 96.26% (relative success)...

==> Gestalt distance from optimal ordering: 80.00% (relative success)...

==> Mean distance from optimal ordering: 88.81% (relative success)...

Estimated order by the policy #2<CentralizedMultiplePlay(UCB($\alpha=1$))> after 10000 steps: [1 0 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 84.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 95.00% (relative success)...

==> Spearman distance from optimal ordering: 96.26% (relative success)...

==> Gestalt distance from optimal ordering: 80.00% (relative success)...

==> Mean distance from optimal ordering: 88.81% (relative success)...

[Parallel(n_jobs=4)]: Done 5 tasks | elapsed: 3.1s

[Parallel(n_jobs=4)]: Done 42 tasks | elapsed: 19.5s

[Parallel(n_jobs=4)]: Done 100 out of 100 | elapsed: 43.4s finished

Evaluating environment: MAB(nbArms: 5, arms: [B(0.005), B(0.01), B(0.015), B(0.84), B(0.85)], minArm: 0.005, maxArm: 0.85)

- Adding player #1 = #1<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

Using this already created player 'player' = #1<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

- Adding player #2 = #2<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

Using this already created player 'player' = #2<CentralizedMultiplePlay(UCB($\alpha=1$))> ...

Estimated order by the policy #1<CentralizedMultiplePlay(UCB($\alpha=1$))> after 10000 steps: [0 1 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

Estimated order by the policy #2<CentralizedMultiplePlay(UCB($\alpha=1$))> after 10000 steps: [0 1 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

[Parallel(n_jobs=4)]: Done 5 tasks | elapsed: 2.7s

[Parallel(n_jobs=4)]: Done 42 tasks | elapsed: 18.5s

[Parallel(n_jobs=4)]: Done 100 out of 100 | elapsed: 39.0s finished

Evaluating environment: MAB(nbArms: 5, arms: [B(0.3), B(0.4), B(0.5), B(0.6), B(0.7)], minArm: 0.3, maxArm: 0.7)

- Adding player #1 = #1<CentralizedIMP(UCB($\alpha=1$))> ...

Using this already created player 'player' = #1<CentralizedIMP(UCB($\alpha=1$))> ...

- Adding player #2 = #2<CentralizedIMP(UCB($\alpha=1$))> ...

Using this already created player 'player' = #2<CentralizedIMP(UCB($\alpha=1$))> ...

Estimated order by the policy #1<CentralizedIMP(UCB($\alpha=1$))> after 10000 steps: [1 0 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 84.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 95.00% (relative success)...

==> Spearman distance from optimal ordering: 96.26% (relative success)...

==> Gestalt distance from optimal ordering: 80.00% (relative success)...

==> Mean distance from optimal ordering: 88.81% (relative success)...

Estimated order by the policy #2<CentralizedIMP(UCB($\alpha=1$))> after 10000 steps: [1 0 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 84.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 95.00% (relative success)...

==> Spearman distance from optimal ordering: 96.26% (relative success)...

==> Gestalt distance from optimal ordering: 80.00% (relative success)...

==> Mean distance from optimal ordering: 88.81% (relative success)...

[Parallel(n_jobs=4)]: Done 5 tasks | elapsed: 5.8s

[Parallel(n_jobs=4)]: Done 42 tasks | elapsed: 33.1s

[Parallel(n_jobs=4)]: Done 100 out of 100 | elapsed: 1.3min finished

Evaluating environment: MAB(nbArms: 5, arms: [B(0.1), B(0.3), B(0.5), B(0.7), B(0.9)], minArm: 0.1, maxArm: 0.9)

- Adding player #1 = #1<CentralizedIMP(UCB($\alpha=1$))> ...

Using this already created player 'player' = #1<CentralizedIMP(UCB($\alpha=1$))> ...

- Adding player #2 = #2<CentralizedIMP(UCB($\alpha=1$))> ...

Using this already created player 'player' = #2<CentralizedIMP(UCB($\alpha=1$))> ...

Estimated order by the policy #1<CentralizedIMP(UCB($\alpha=1$))> after 10000 steps: [0 1 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

Estimated order by the policy #2<CentralizedIMP(UCB($\alpha=1$))> after 10000 steps: [0 1 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

[Parallel(n_jobs=4)]: Done 5 tasks | elapsed: 6.3s

[Parallel(n_jobs=4)]: Done 42 tasks | elapsed: 33.7s

[Parallel(n_jobs=4)]: Done 100 out of 100 | elapsed: 1.3min finished

Evaluating environment: MAB(nbArms: 5, arms: [B(0.005), B(0.01), B(0.015), B(0.84), B(0.85)], minArm: 0.005, maxArm: 0.85)

- Adding player #1 = #1<CentralizedIMP(UCB($\alpha=1$))> ...

Using this already created player 'player' = #1<CentralizedIMP(UCB($\alpha=1$))> ...

- Adding player #2 = #2<CentralizedIMP(UCB($\alpha=1$))> ...

Using this already created player 'player' = #2<CentralizedIMP(UCB($\alpha=1$))> ...

Estimated order by the policy #1<CentralizedIMP(UCB($\alpha=1$))> after 10000 steps: [0 1 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

Estimated order by the policy #2<CentralizedIMP(UCB($\alpha=1$))> after 10000 steps: [0 1 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

[Parallel(n_jobs=4)]: Done 5 tasks | elapsed: 5.8s

[Parallel(n_jobs=4)]: Done 42 tasks | elapsed: 33.6s

[Parallel(n_jobs=4)]: Done 100 out of 100 | elapsed: 1.3min finished

Evaluating environment: MAB(nbArms: 5, arms: [B(0.3), B(0.4), B(0.5), B(0.6), B(0.7)], minArm: 0.3, maxArm: 0.7)

- Adding player #1 = #1<CentralizedMultiplePlay(Thompson)> ...

Using this already created player 'player' = #1<CentralizedMultiplePlay(Thompson)> ...

- Adding player #2 = #2<CentralizedMultiplePlay(Thompson)> ...

Using this already created player 'player' = #2<CentralizedMultiplePlay(Thompson)> ...

Estimated order by the policy #1<CentralizedMultiplePlay(Thompson)> after 10000 steps: [0 1 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

Estimated order by the policy #2<CentralizedMultiplePlay(Thompson)> after 10000 steps: [0 1 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

[Parallel(n_jobs=4)]: Done 5 tasks | elapsed: 2.7s

[Parallel(n_jobs=4)]: Done 42 tasks | elapsed: 15.1s

[Parallel(n_jobs=4)]: Done 100 out of 100 | elapsed: 35.0s finished

Evaluating environment: MAB(nbArms: 5, arms: [B(0.1), B(0.3), B(0.5), B(0.7), B(0.9)], minArm: 0.1, maxArm: 0.9)

- Adding player #1 = #1<CentralizedMultiplePlay(Thompson)> ...

Using this already created player 'player' = #1<CentralizedMultiplePlay(Thompson)> ...

- Adding player #2 = #2<CentralizedMultiplePlay(Thompson)> ...

Using this already created player 'player' = #2<CentralizedMultiplePlay(Thompson)> ...

Estimated order by the policy #1<CentralizedMultiplePlay(Thompson)> after 10000 steps: [0 1 2 3 4] ... ==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

Estimated order by the policy #2<CentralizedMultiplePlay(Thompson)> after 10000 steps: [1 0 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 84.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 95.00% (relative success)...

==> Spearman distance from optimal ordering: 96.26% (relative success)...

==> Gestalt distance from optimal ordering: 80.00% (relative success)...

==> Mean distance from optimal ordering: 88.81% (relative success)...

[Parallel(n_jobs=4)]: Done 5 tasks | elapsed: 3.1s

[Parallel(n_jobs=4)]: Done 42 tasks | elapsed: 16.8s

[Parallel(n_jobs=4)]: Done 100 out of 100 | elapsed: 41.9s finished

Evaluating environment: MAB(nbArms: 5, arms: [B(0.005), B(0.01), B(0.015), B(0.84), B(0.85)], minArm: 0.005, maxArm: 0.85)

- Adding player #1 = #1<CentralizedMultiplePlay(Thompson)> ...

Using this already created player 'player' = #1<CentralizedMultiplePlay(Thompson)> ...

- Adding player #2 = #2<CentralizedMultiplePlay(Thompson)> ...

Using this already created player 'player' = #2<CentralizedMultiplePlay(Thompson)> ...

Estimated order by the policy #1<CentralizedMultiplePlay(Thompson)> after 10000 steps: [1 0 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 84.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 95.00% (relative success)...

==> Spearman distance from optimal ordering: 96.26% (relative success)...

==> Gestalt distance from optimal ordering: 80.00% (relative success)...

==> Mean distance from optimal ordering: 88.81% (relative success)...

Estimated order by the policy #2<CentralizedMultiplePlay(Thompson)> after 10000 steps: [0 1 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

[Parallel(n_jobs=4)]: Done 5 tasks | elapsed: 3.1s

[Parallel(n_jobs=4)]: Done 42 tasks | elapsed: 17.1s

[Parallel(n_jobs=4)]: Done 100 out of 100 | elapsed: 38.2s finished

Evaluating environment: MAB(nbArms: 5, arms: [B(0.3), B(0.4), B(0.5), B(0.6), B(0.7)], minArm: 0.3, maxArm: 0.7)

- Adding player #1 = #1<CentralizedIMP(Thompson)> ...

Using this already created player 'player' = #1<CentralizedIMP(Thompson)> ...

- Adding player #2 = #2<CentralizedIMP(Thompson)> ...

Using this already created player 'player' = #2<CentralizedIMP(Thompson)> ...

Estimated order by the policy #1<CentralizedIMP(Thompson)> after 10000 steps: [0 1 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

Estimated order by the policy #2<CentralizedIMP(Thompson)> after 10000 steps: [0 1 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

[Parallel(n_jobs=4)]: Done 5 tasks | elapsed: 3.2s

[Parallel(n_jobs=4)]: Done 42 tasks | elapsed: 16.1s

[Parallel(n_jobs=4)]: Done 100 out of 100 | elapsed: 38.0s finished

Evaluating environment: MAB(nbArms: 5, arms: [B(0.1), B(0.3), B(0.5), B(0.7), B(0.9)], minArm: 0.1, maxArm: 0.9)

- Adding player #1 = #1<CentralizedIMP(Thompson)> ...

Using this already created player 'player' = #1<CentralizedIMP(Thompson)> ...

- Adding player #2 = #2<CentralizedIMP(Thompson)> ...

Using this already created player 'player' = #2<CentralizedIMP(Thompson)> ...

Estimated order by the policy #1<CentralizedIMP(Thompson)> after 10000 steps: [0 2 1 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 84.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 95.00% (relative success)...

==> Spearman distance from optimal ordering: 96.26% (relative success)...

==> Gestalt distance from optimal ordering: 80.00% (relative success)...

==> Mean distance from optimal ordering: 88.81% (relative success)...

Estimated order by the policy #2<CentralizedIMP(Thompson)> after 10000 steps: [0 1 2 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 100.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 98.57% (relative success)...

==> Spearman distance from optimal ordering: 100.00% (relative success)...

==> Gestalt distance from optimal ordering: 100.00% (relative success)...

==> Mean distance from optimal ordering: 99.64% (relative success)...

[Parallel(n_jobs=4)]: Done 5 tasks | elapsed: 3.7s

[Parallel(n_jobs=4)]: Done 42 tasks | elapsed: 17.9s

[Parallel(n_jobs=4)]: Done 100 out of 100 | elapsed: 39.3s finished

Evaluating environment: MAB(nbArms: 5, arms: [B(0.005), B(0.01), B(0.015), B(0.84), B(0.85)], minArm: 0.005, maxArm: 0.85)

- Adding player #1 = #1<CentralizedIMP(Thompson)> ...

Using this already created player 'player' = #1<CentralizedIMP(Thompson)> ...

- Adding player #2 = #2<CentralizedIMP(Thompson)> ...

Using this already created player 'player' = #2<CentralizedIMP(Thompson)> ...

Estimated order by the policy #1<CentralizedIMP(Thompson)> after 10000 steps: [0 2 1 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 84.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 95.00% (relative success)...

==> Spearman distance from optimal ordering: 96.26% (relative success)...

==> Gestalt distance from optimal ordering: 80.00% (relative success)...

==> Mean distance from optimal ordering: 88.81% (relative success)...

Estimated order by the policy #2<CentralizedIMP(Thompson)> after 10000 steps: [0 2 1 3 4] ...

==> Optimal arm identification: 100.00% (relative success)...

==> Manhattan distance from optimal ordering: 84.00% (relative success)...

==> Kendell Tau distance from optimal ordering: 95.00% (relative success)...

==> Spearman distance from optimal ordering: 96.26% (relative success)...

==> Gestalt distance from optimal ordering: 80.00% (relative success)...

==> Mean distance from optimal ordering: 88.81% (relative success)...

[Parallel(n_jobs=4)]: Done 5 tasks | elapsed: 3.0s

[Parallel(n_jobs=4)]: Done 42 tasks | elapsed: 18.0s

[Parallel(n_jobs=4)]: Done 100 out of 100 | elapsed: 44.6s finished

CPU times: user 40.5 s, sys: 1.83 s, total: 42.3 s

Wall time: 10min 28s

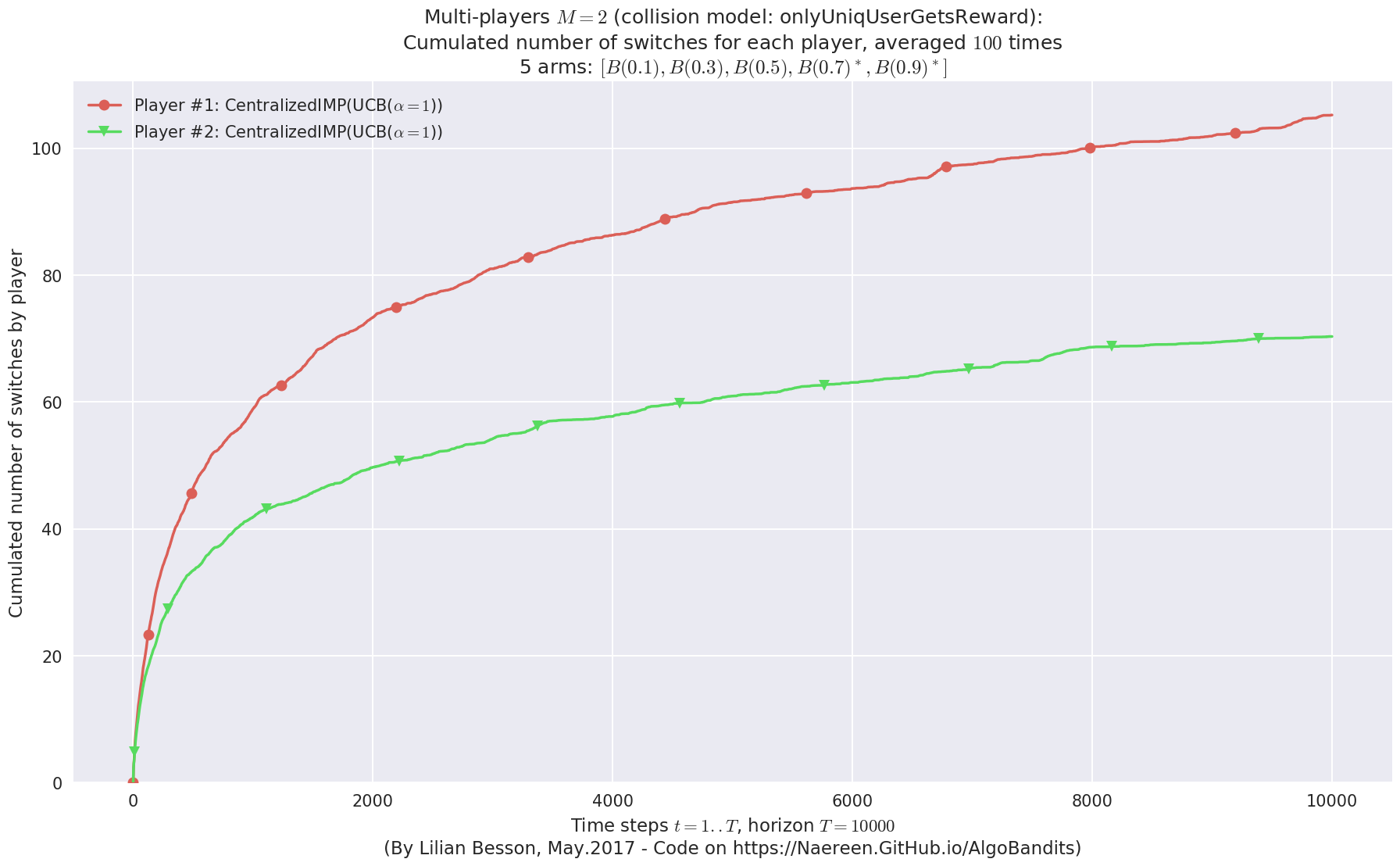

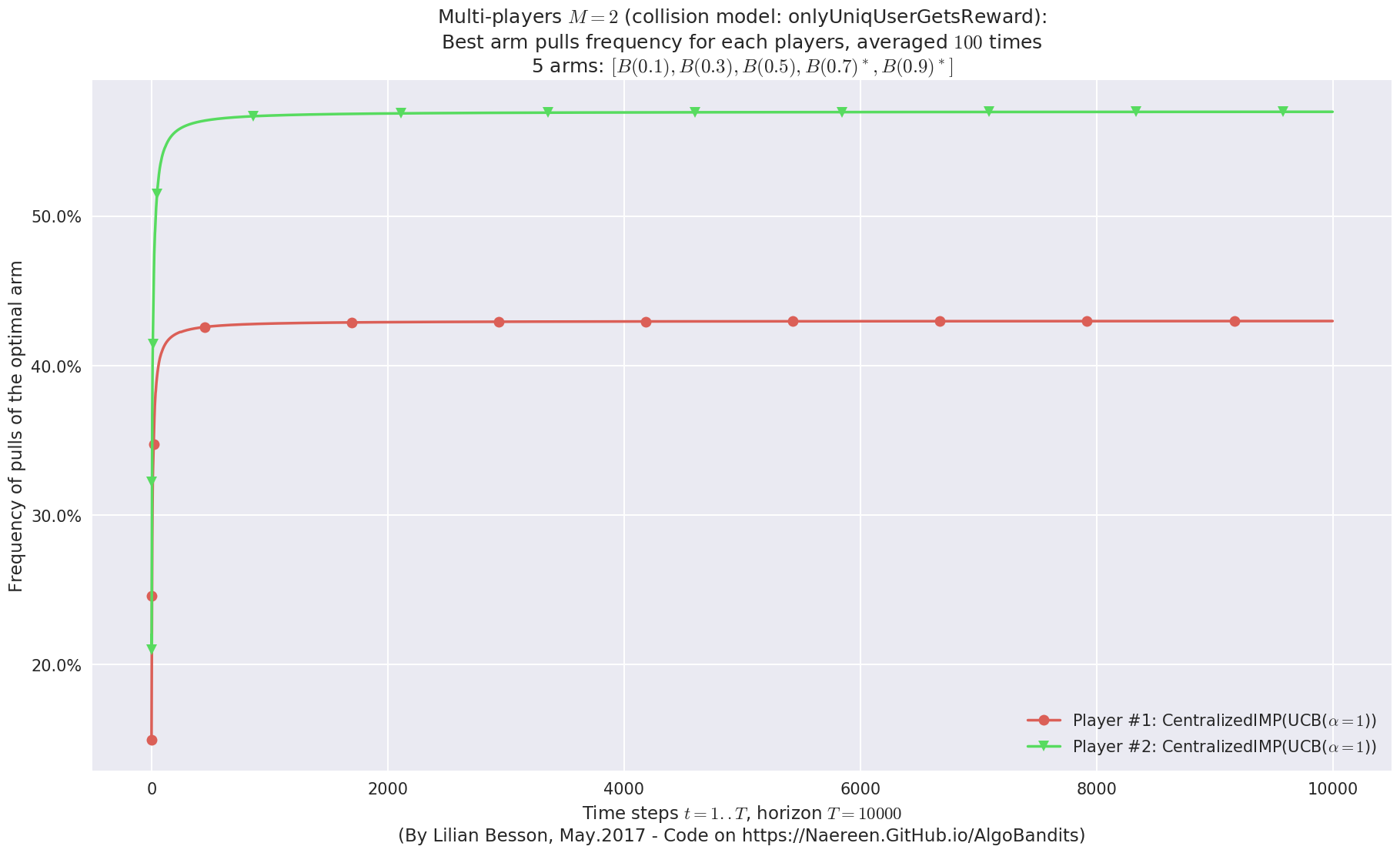

Plotting the results¶

And finally, visualize them, with the plotting method of a

EvaluatorMultiPlayers object:

In [22]:

def plotAll(evaluation, envId):

evaluation.printFinalRanking(envId)

# Rewards

evaluation.plotRewards(envId)

# Fairness

#evaluation.plotFairness(envId, fairness="STD")

# Centralized regret

evaluation.plotRegretCentralized(envId, subTerms=True)

#evaluation.plotRegretCentralized(envId, semilogx=True, subTerms=True)

# Number of switches

#evaluation.plotNbSwitchs(envId, cumulated=False)

evaluation.plotNbSwitchs(envId, cumulated=True)

# Frequency of selection of the best arms

evaluation.plotBestArmPulls(envId)

# Number of collisions - not for Centralized* policies

#evaluation.plotNbCollisions(envId, cumulated=False)

#evaluation.plotNbCollisions(envId, cumulated=True)

# Frequency of collision in each arm

#evaluation.plotFrequencyCollisions(envId, piechart=True)

First problem¶

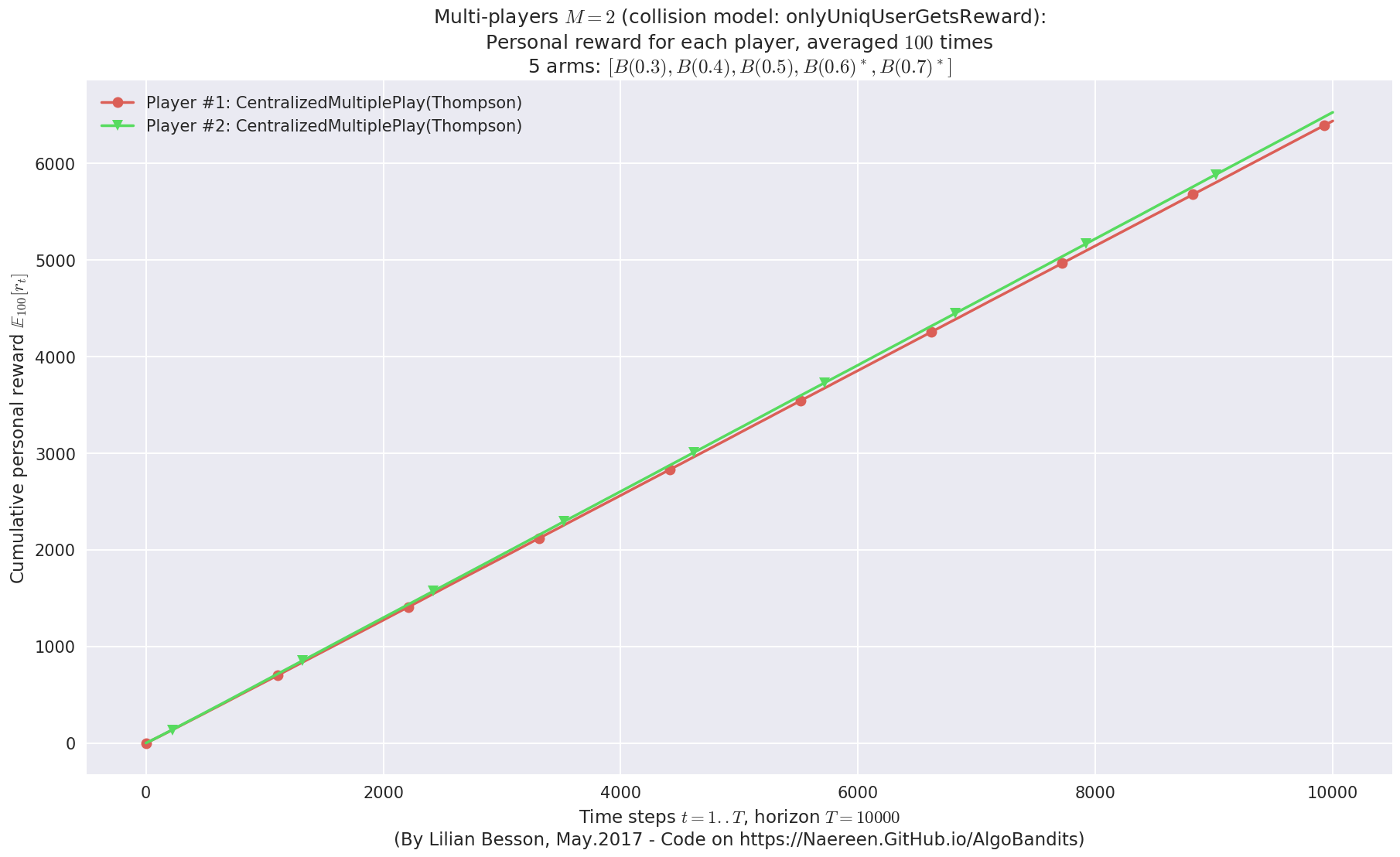

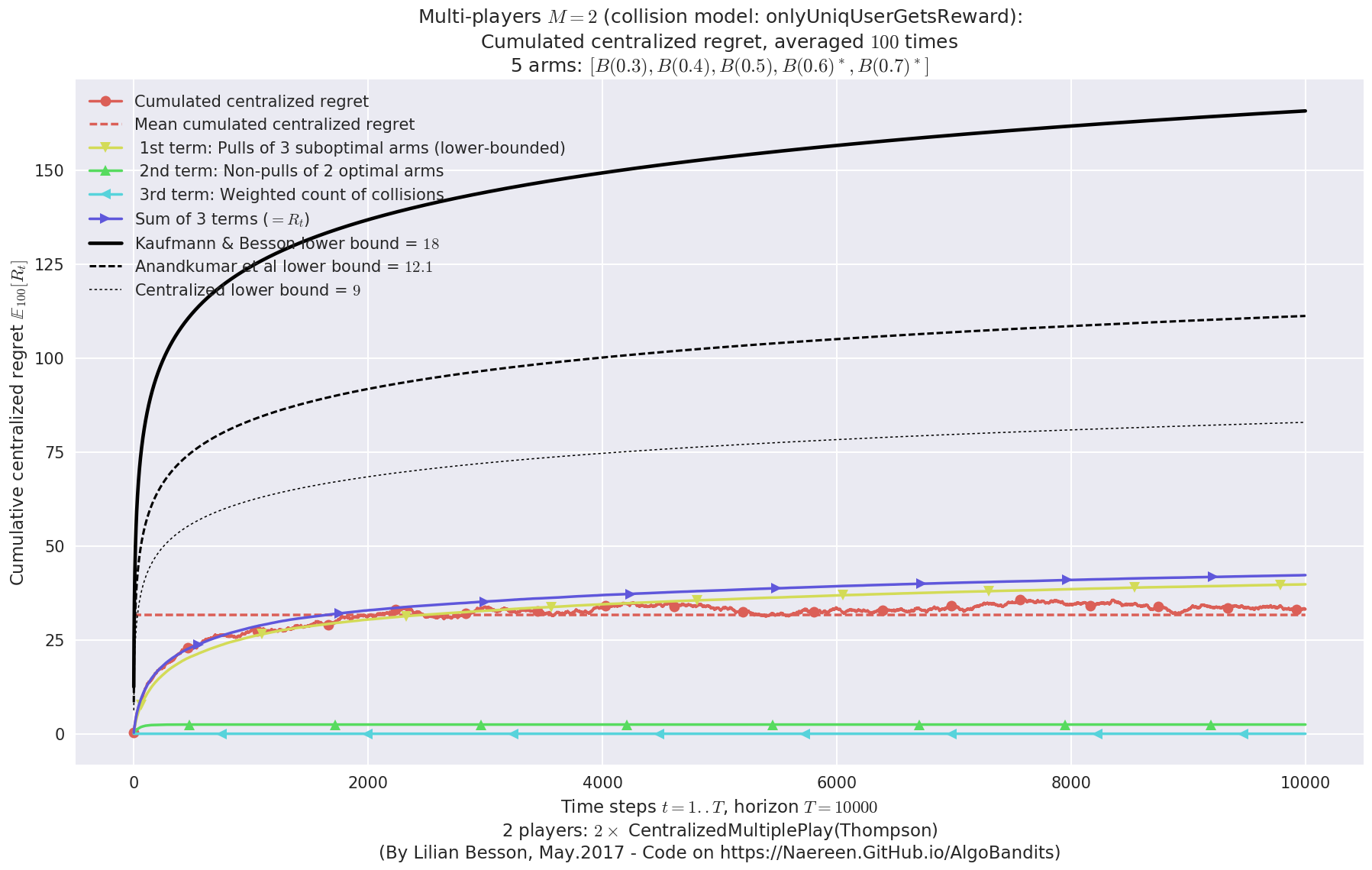

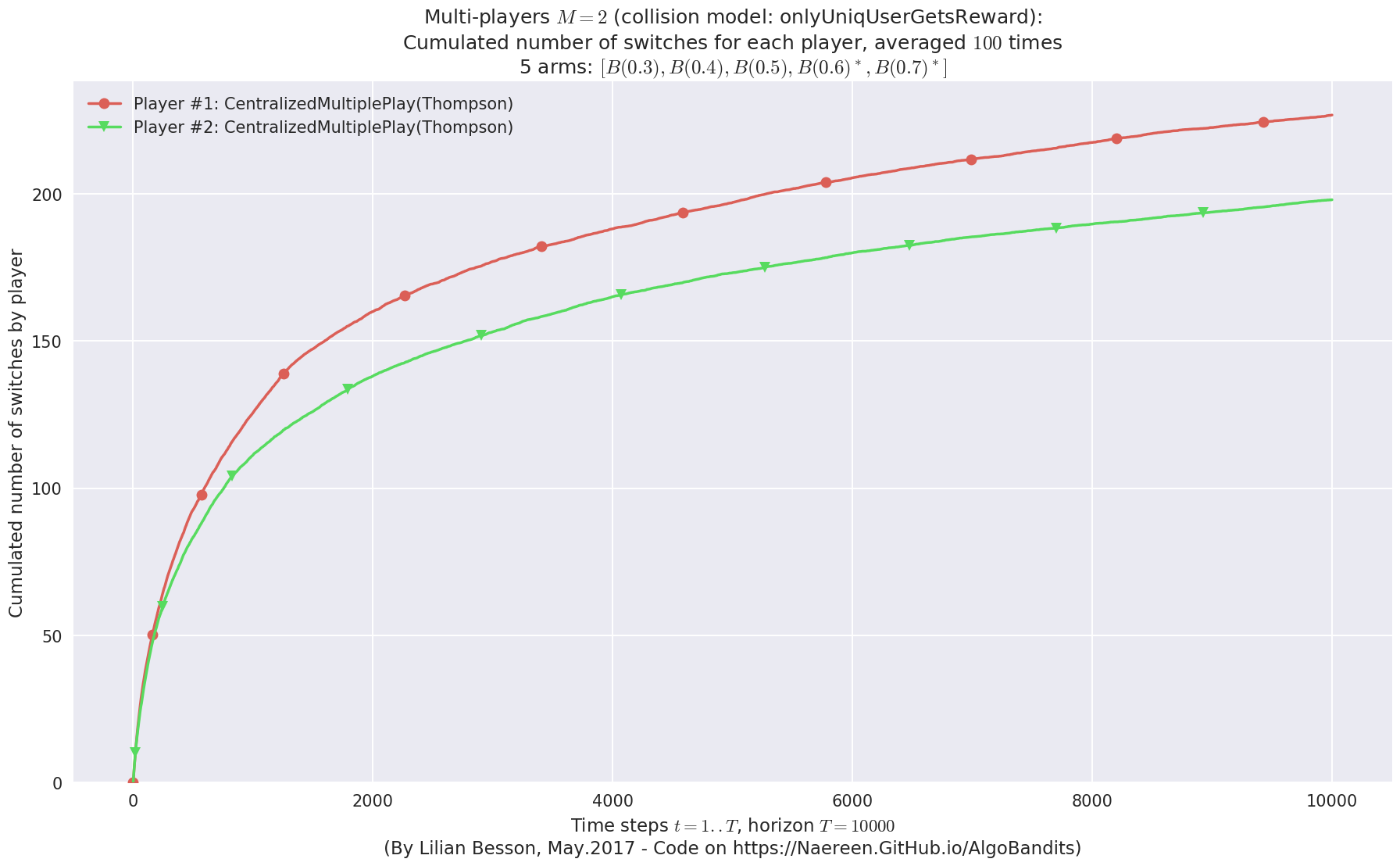

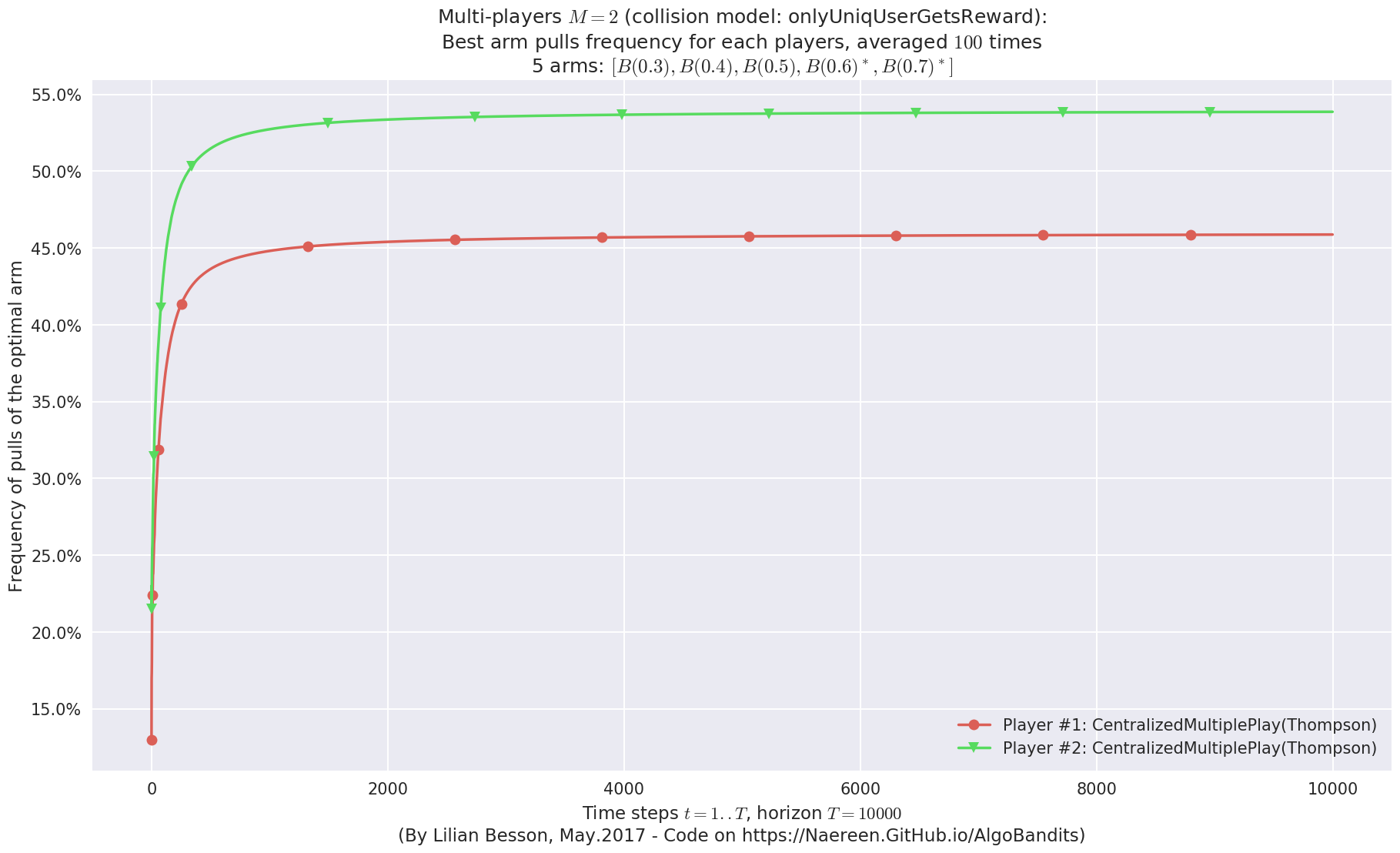

\(\mu = [0.3, 0.4, 0.5, 0.6, 0.7]\) was an easy Bernoulli problem.

In [23]:

for playersId in tqdm(range(len(evs)), desc="Policies"):

evaluation = evaluators[0][playersId]

plotAll(evaluation, 0)

Final ranking for this environment #0 :

- Player #1, '#1<CentralizedMultiplePlay(UCB($\alpha=1$))>' was ranked 1 / 2 for this simulation (last rewards = 6470.17).

- Player #2, '#2<CentralizedMultiplePlay(UCB($\alpha=1$))>' was ranked 2 / 2 for this simulation (last rewards = 6393.89).

- For 2 players, Anandtharam et al. centralized lower-bound gave = 9 ...

- For 2 players, our lower bound gave = 18 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 12.1 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 9.46 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 60.00% ...

- [Anandtharam et al] centralized lowerbound = 18,

- Our decentralized lowerbound = 12.1,

- [Anandkumar et al] decentralized lowerbound = 9

Final ranking for this environment #0 :

- Player #1, '#1<CentralizedIMP(UCB($\alpha=1$))>' was ranked 1 / 2 for this simulation (last rewards = 6486.51).

- Player #2, '#2<CentralizedIMP(UCB($\alpha=1$))>' was ranked 2 / 2 for this simulation (last rewards = 6368).

- For 2 players, Anandtharam et al. centralized lower-bound gave = 9 ...

- For 2 players, our lower bound gave = 18 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 12.1 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 9.46 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 60.00% ...

- [Anandtharam et al] centralized lowerbound = 18,

- Our decentralized lowerbound = 12.1,

- [Anandkumar et al] decentralized lowerbound = 9

Final ranking for this environment #0 :

- Player #2, '#2<CentralizedMultiplePlay(Thompson)>' was ranked 1 / 2 for this simulation (last rewards = 6496.3).

- Player #1, '#1<CentralizedMultiplePlay(Thompson)>' was ranked 2 / 2 for this simulation (last rewards = 6406.86).

- For 2 players, Anandtharam et al. centralized lower-bound gave = 9 ...

- For 2 players, our lower bound gave = 18 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 12.1 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 9.46 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 60.00% ...

- [Anandtharam et al] centralized lowerbound = 18,

- Our decentralized lowerbound = 12.1,

- [Anandkumar et al] decentralized lowerbound = 9

Final ranking for this environment #0 :

- Player #2, '#2<CentralizedIMP(Thompson)>' was ranked 1 / 2 for this simulation (last rewards = 6458.99).

- Player #1, '#1<CentralizedIMP(Thompson)>' was ranked 2 / 2 for this simulation (last rewards = 6434.01).

- For 2 players, Anandtharam et al. centralized lower-bound gave = 9 ...

- For 2 players, our lower bound gave = 18 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 12.1 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 9.46 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 60.00% ...

- [Anandtharam et al] centralized lowerbound = 18,

- Our decentralized lowerbound = 12.1,

- [Anandkumar et al] decentralized lowerbound = 9

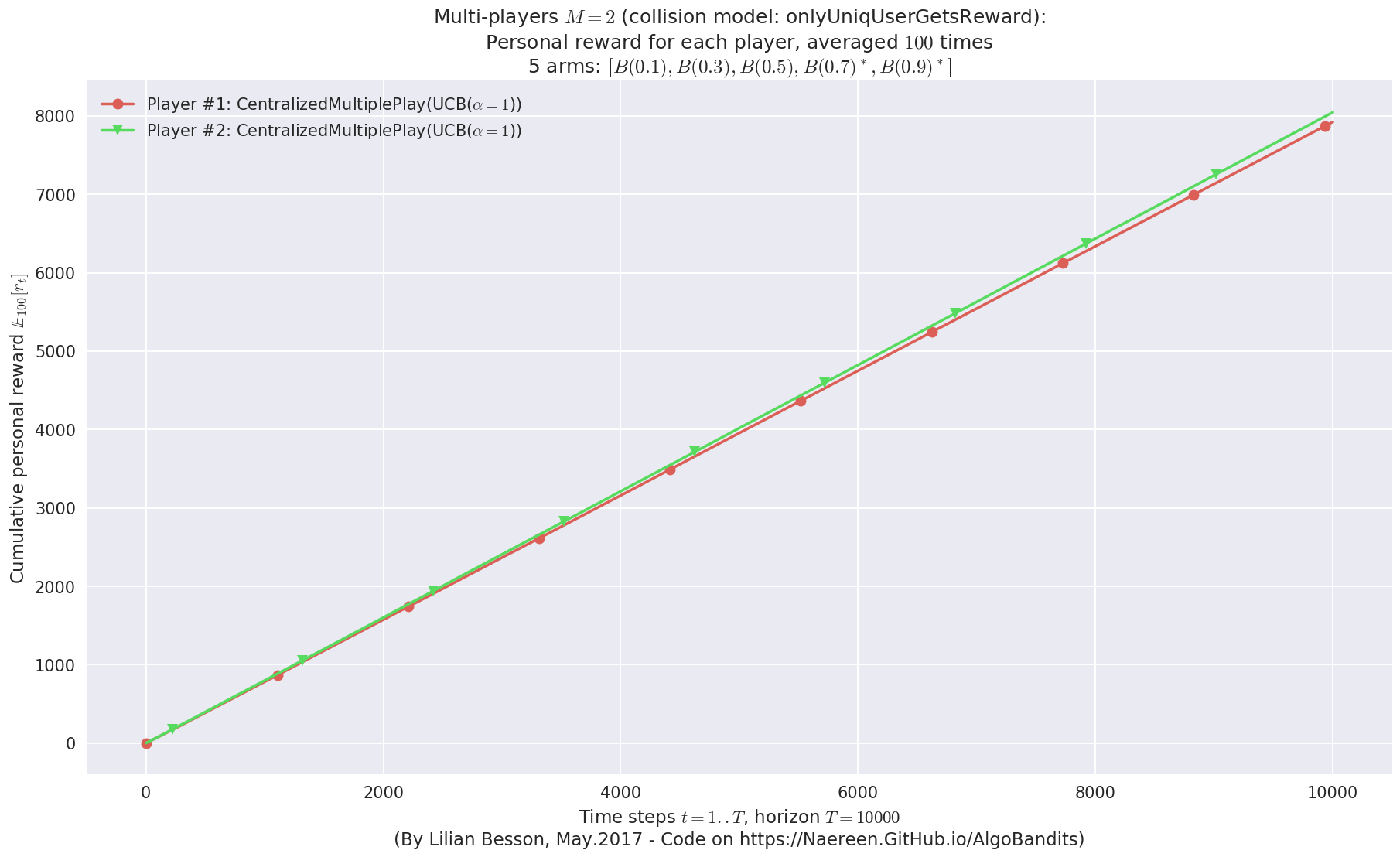

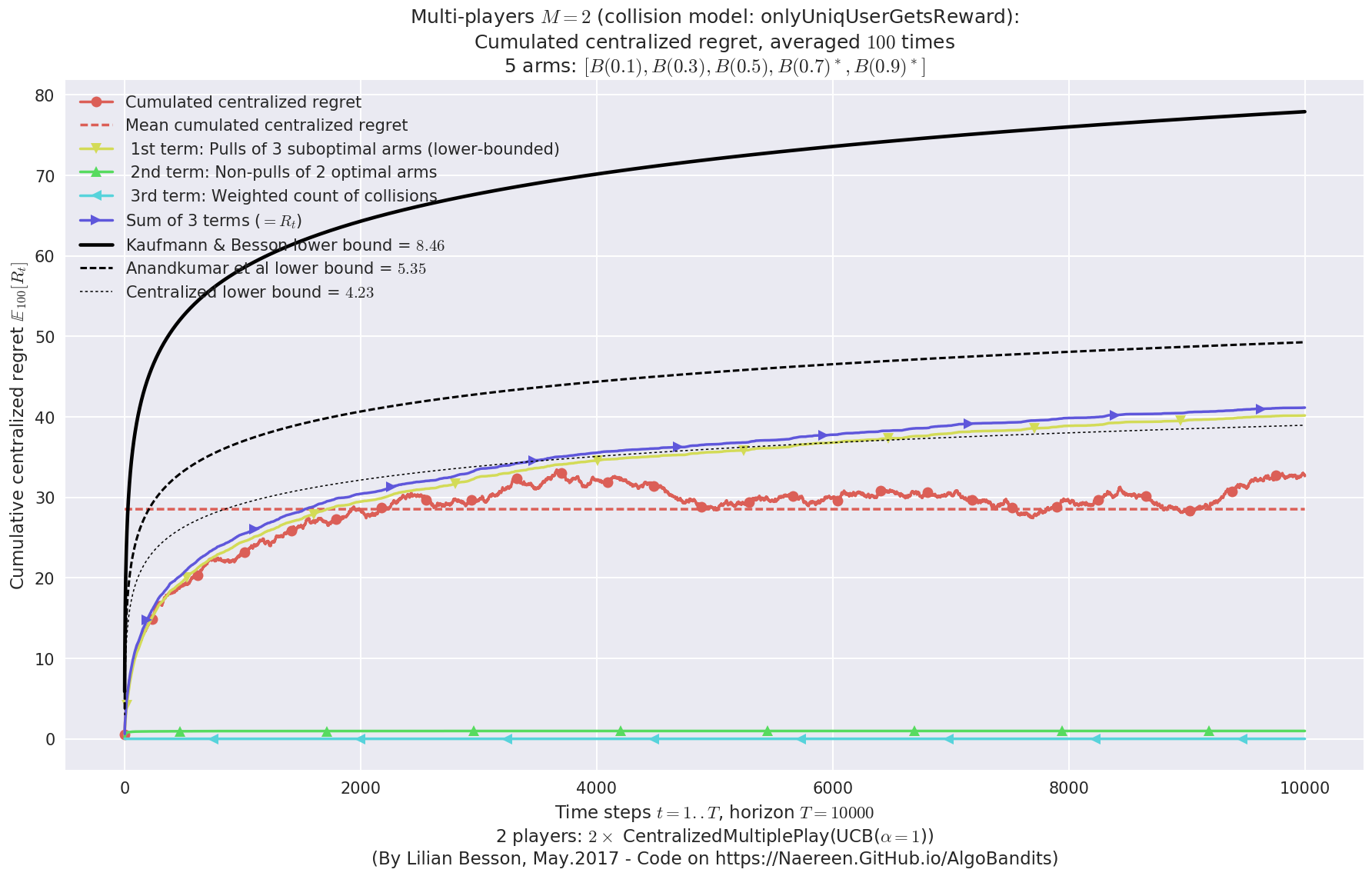

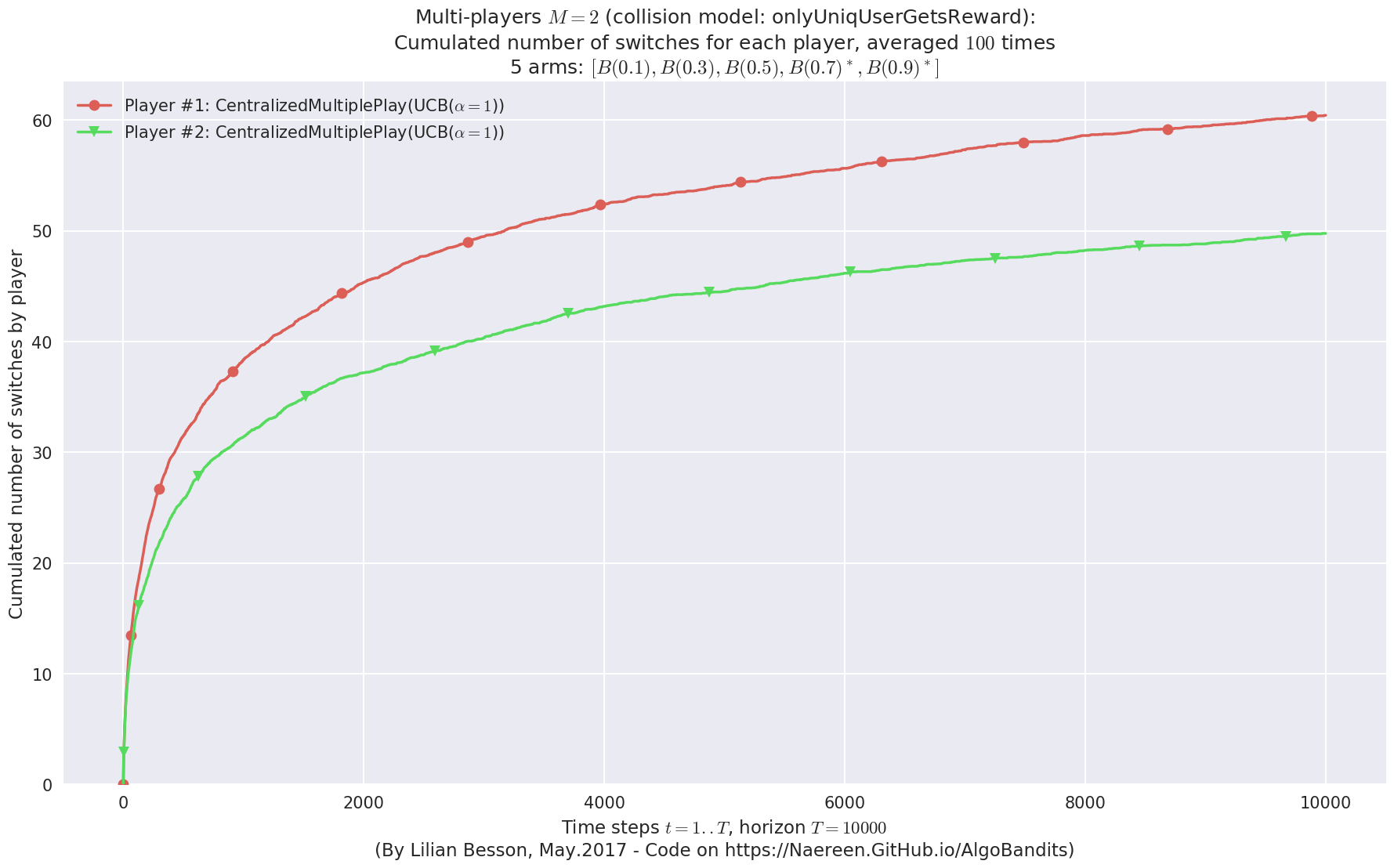

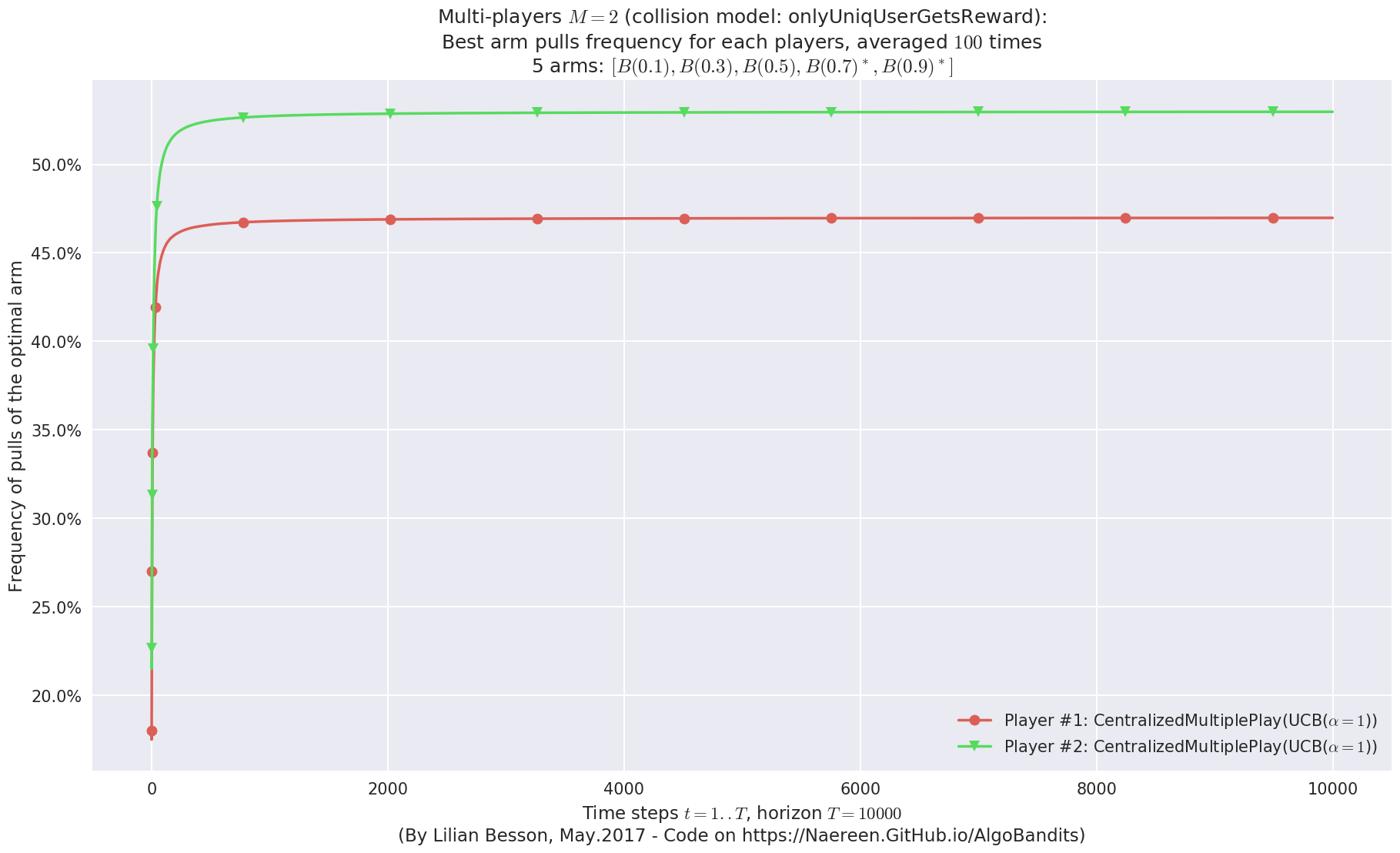

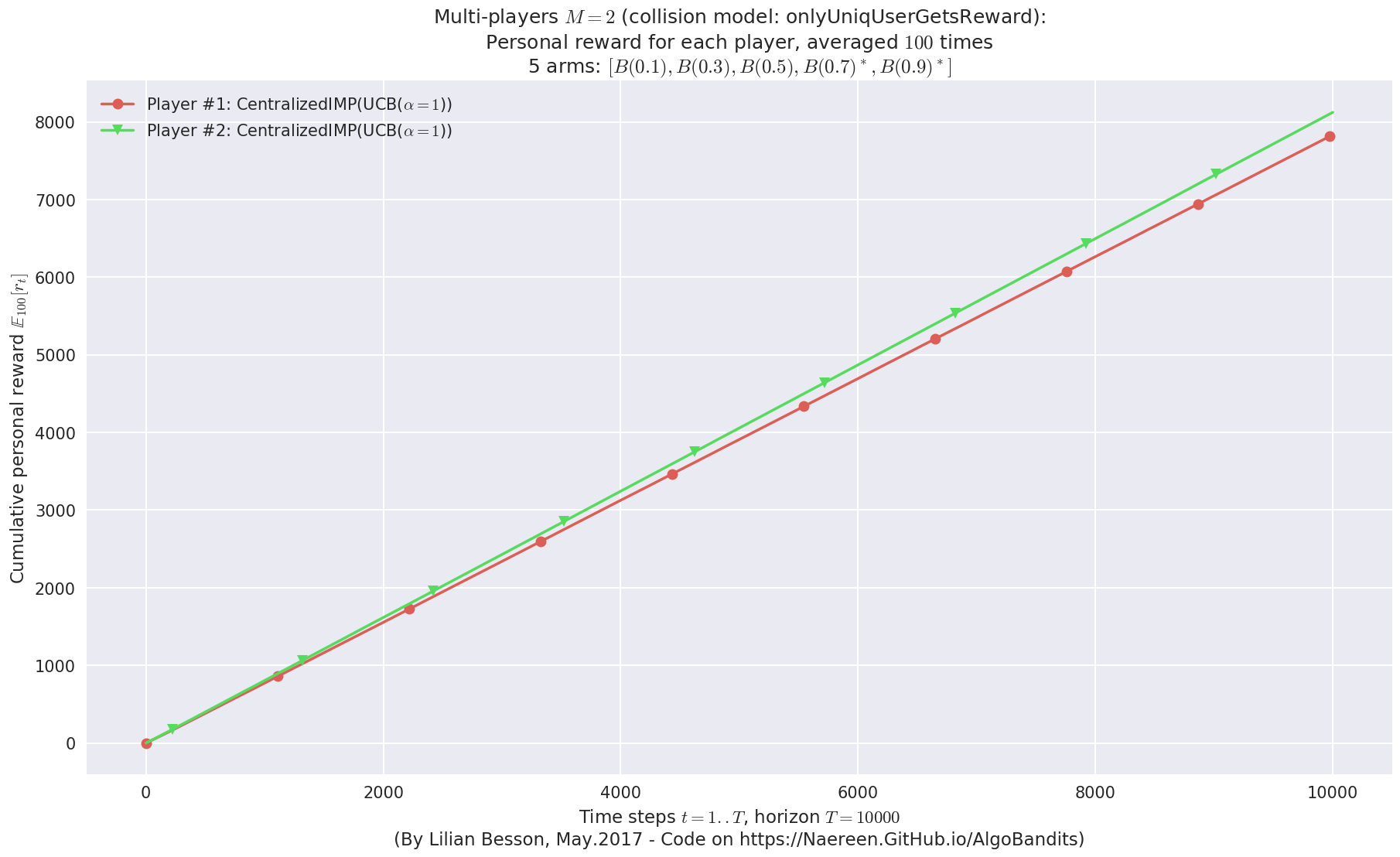

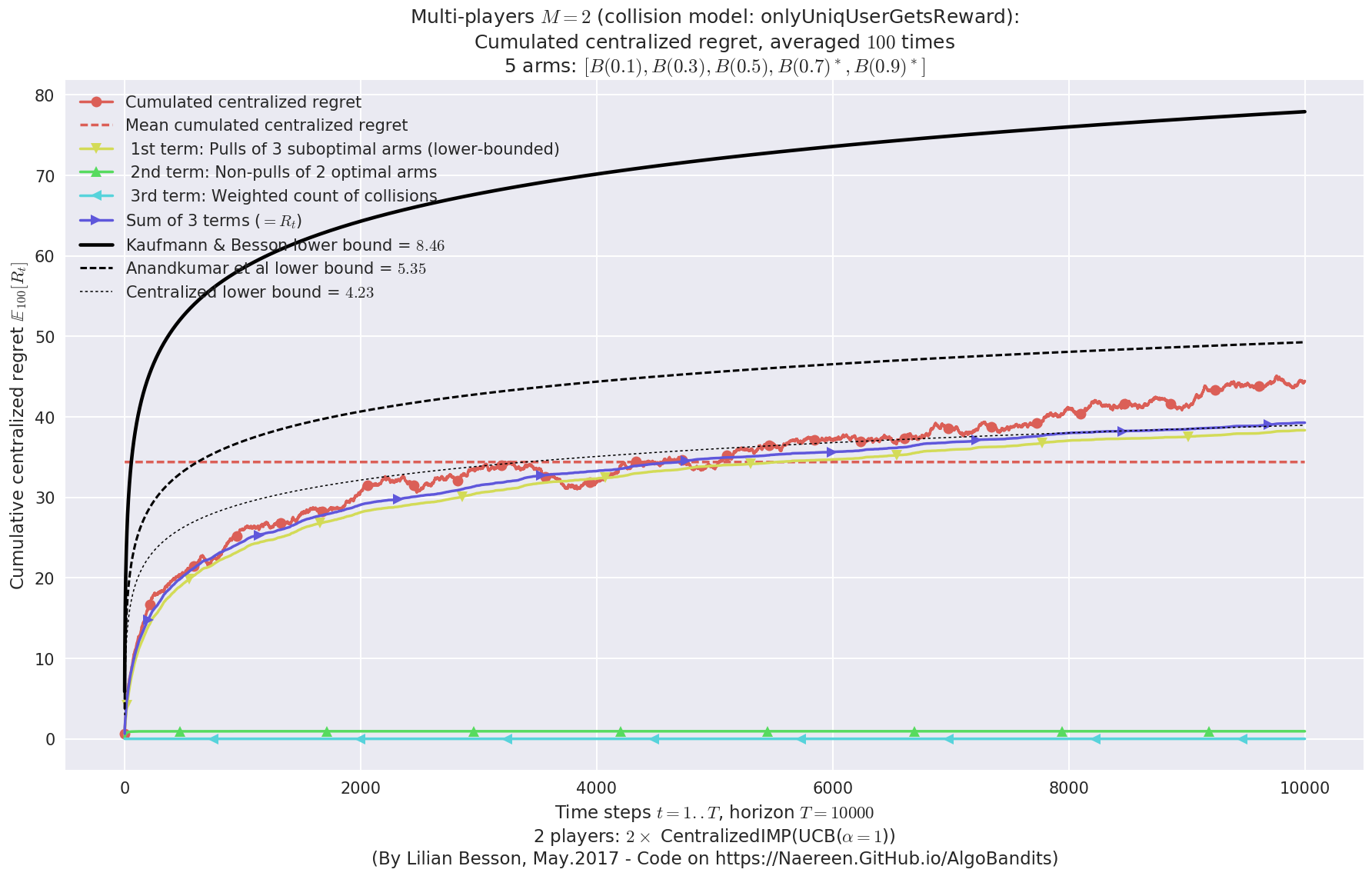

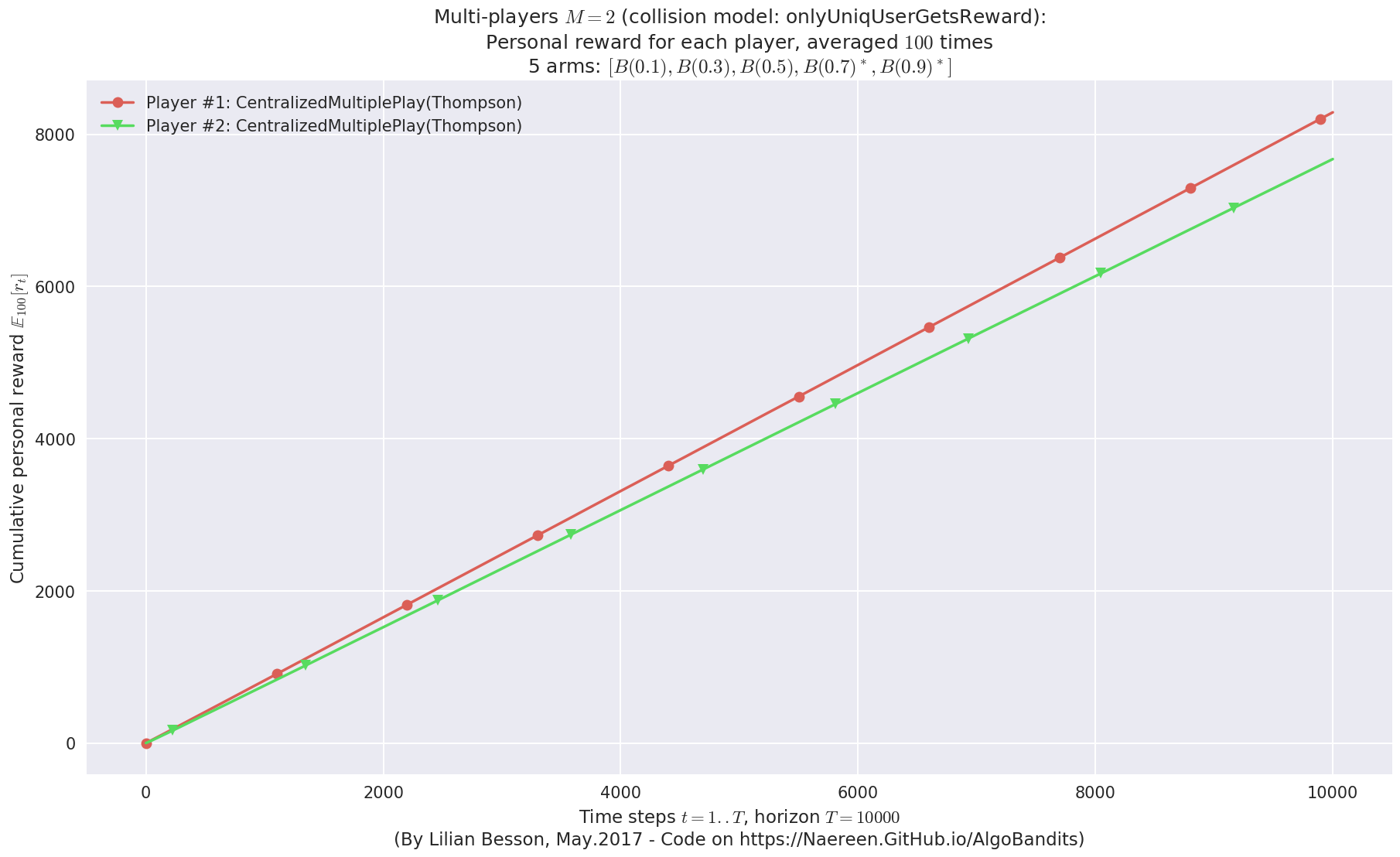

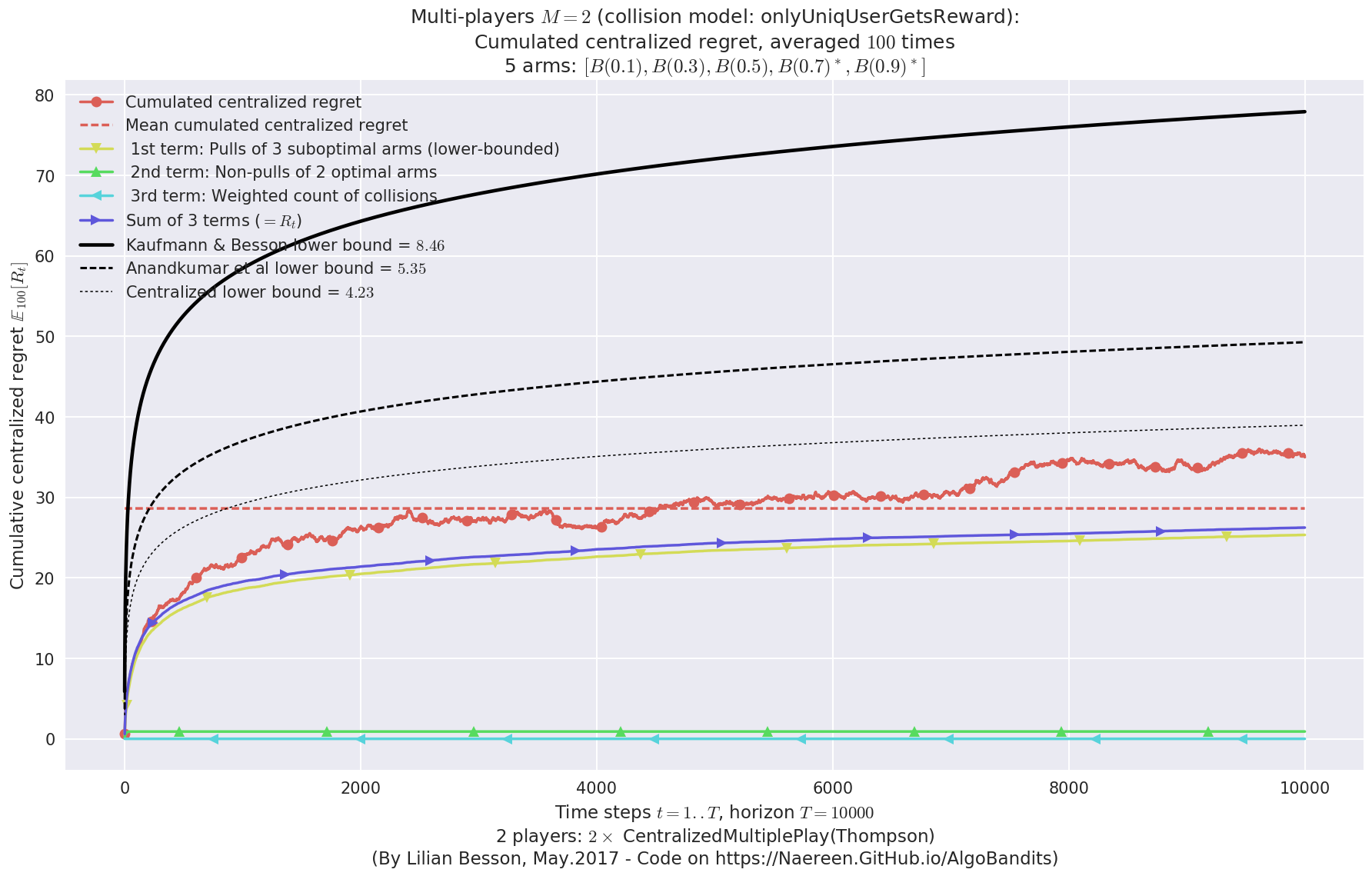

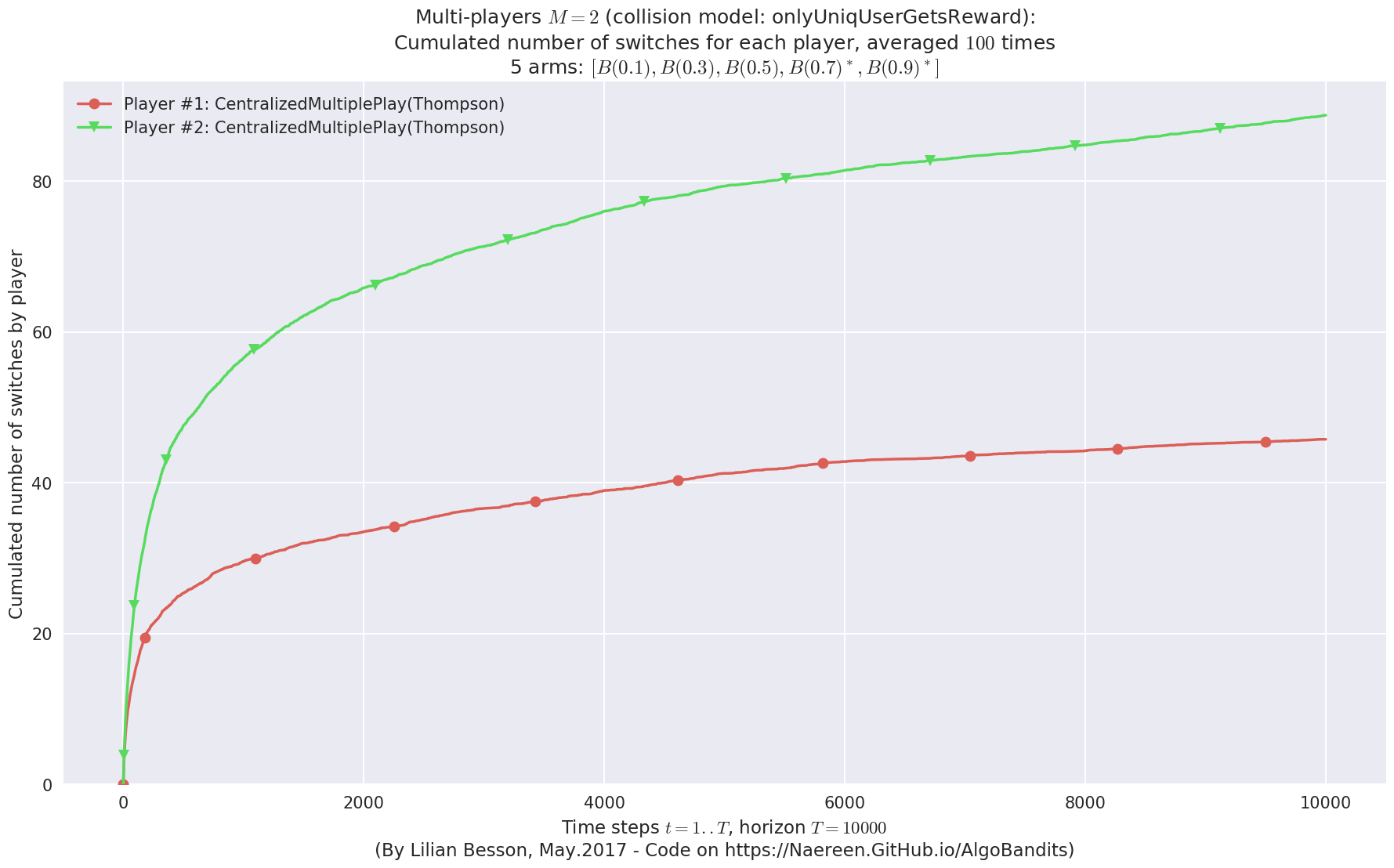

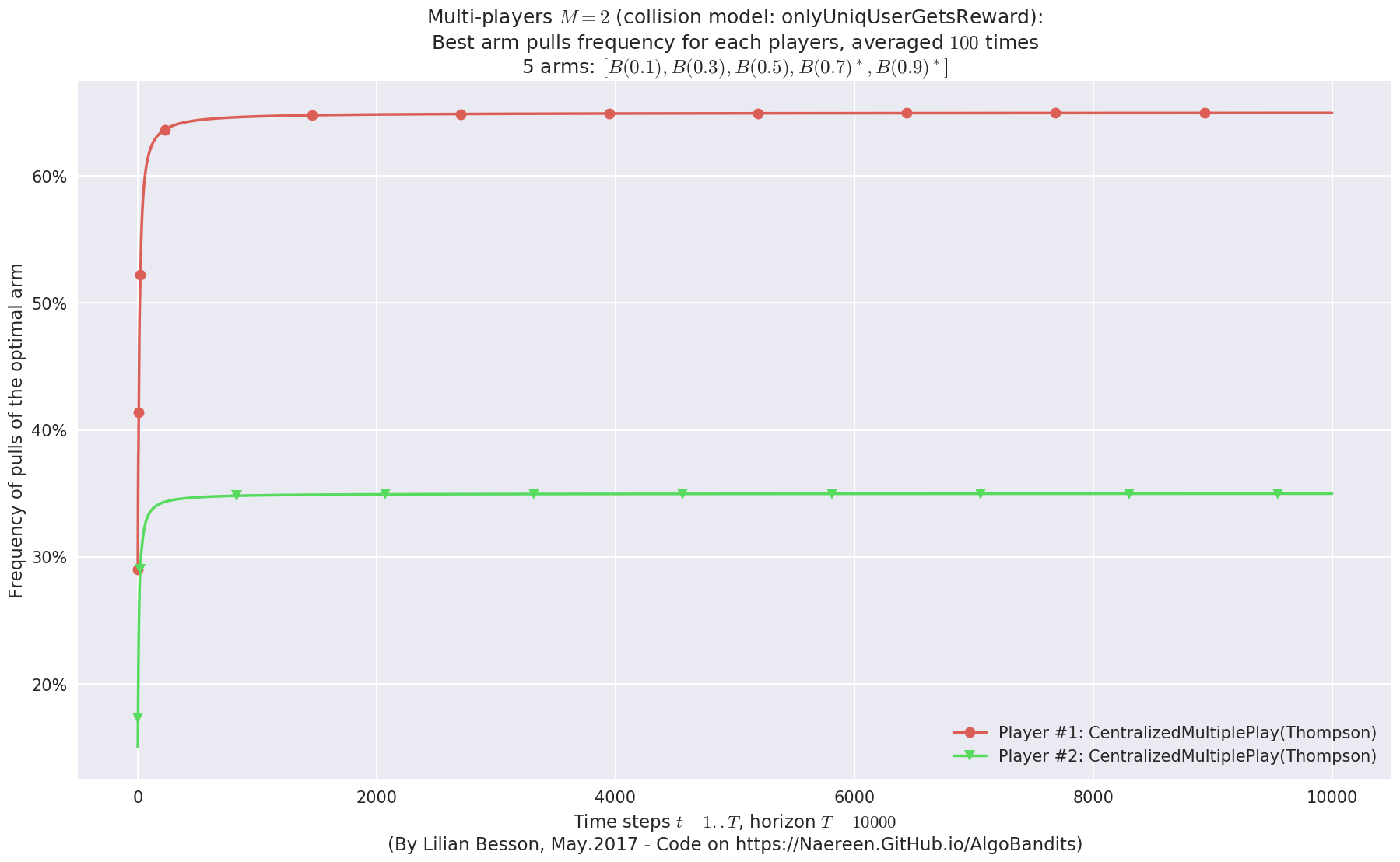

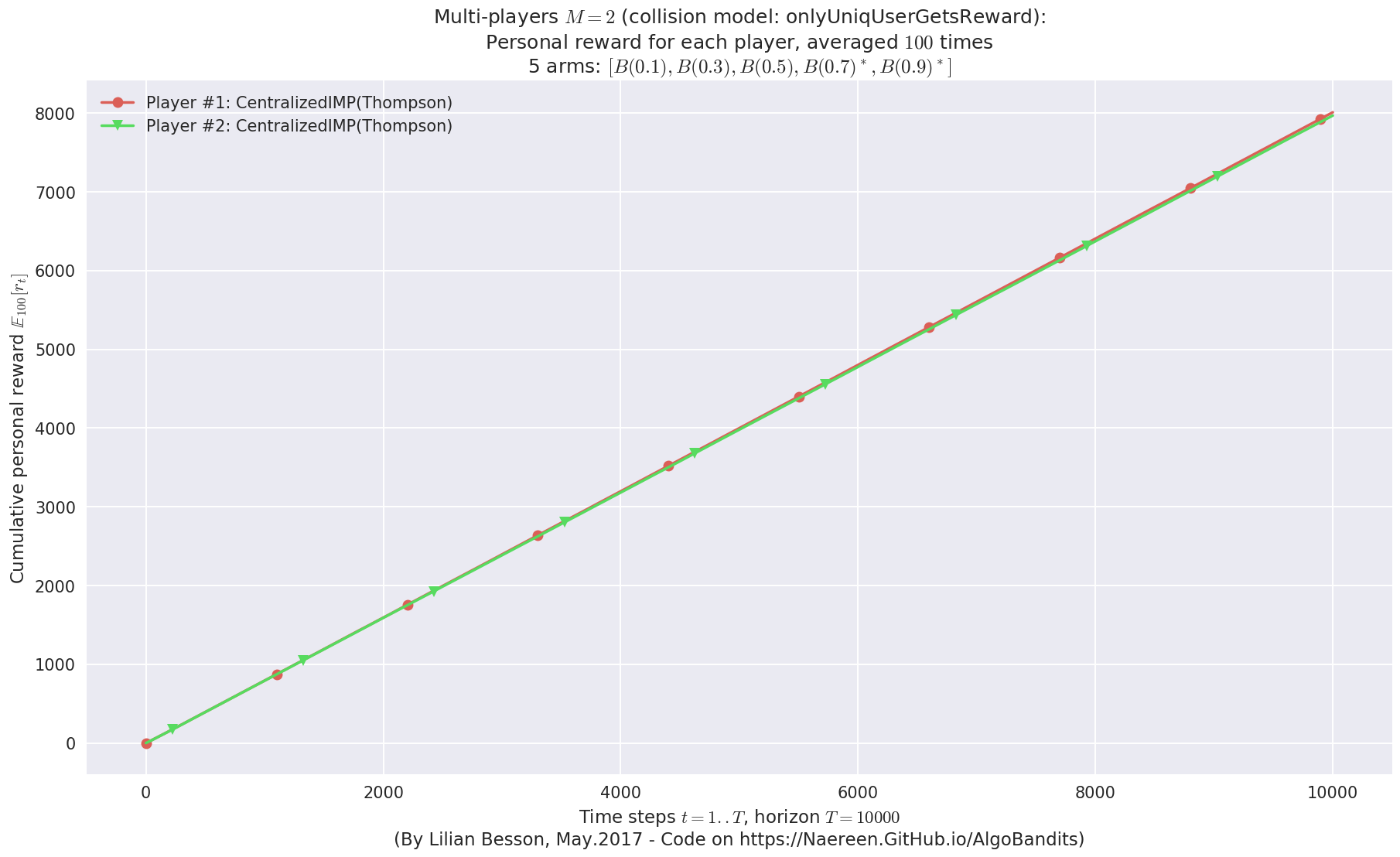

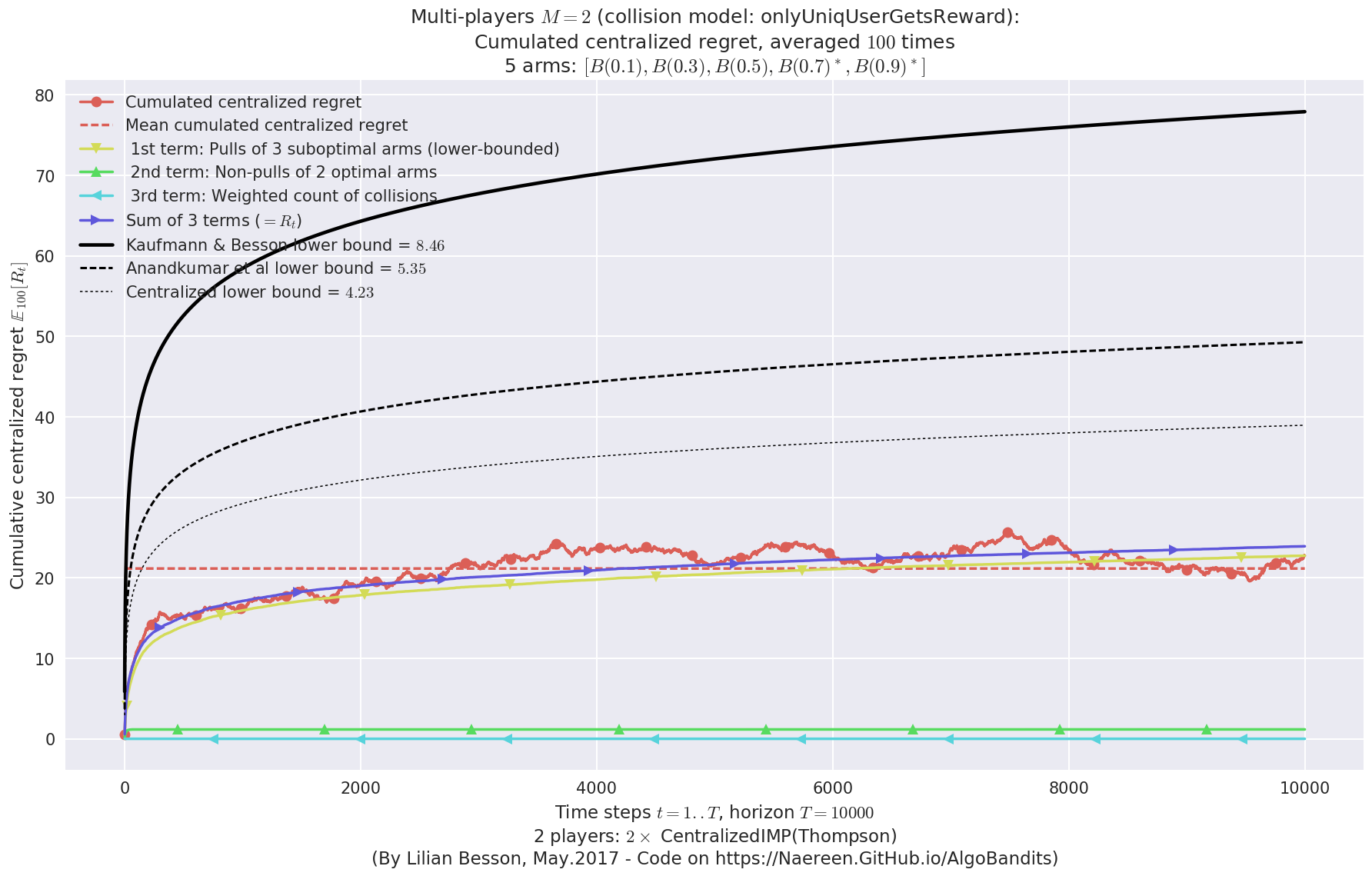

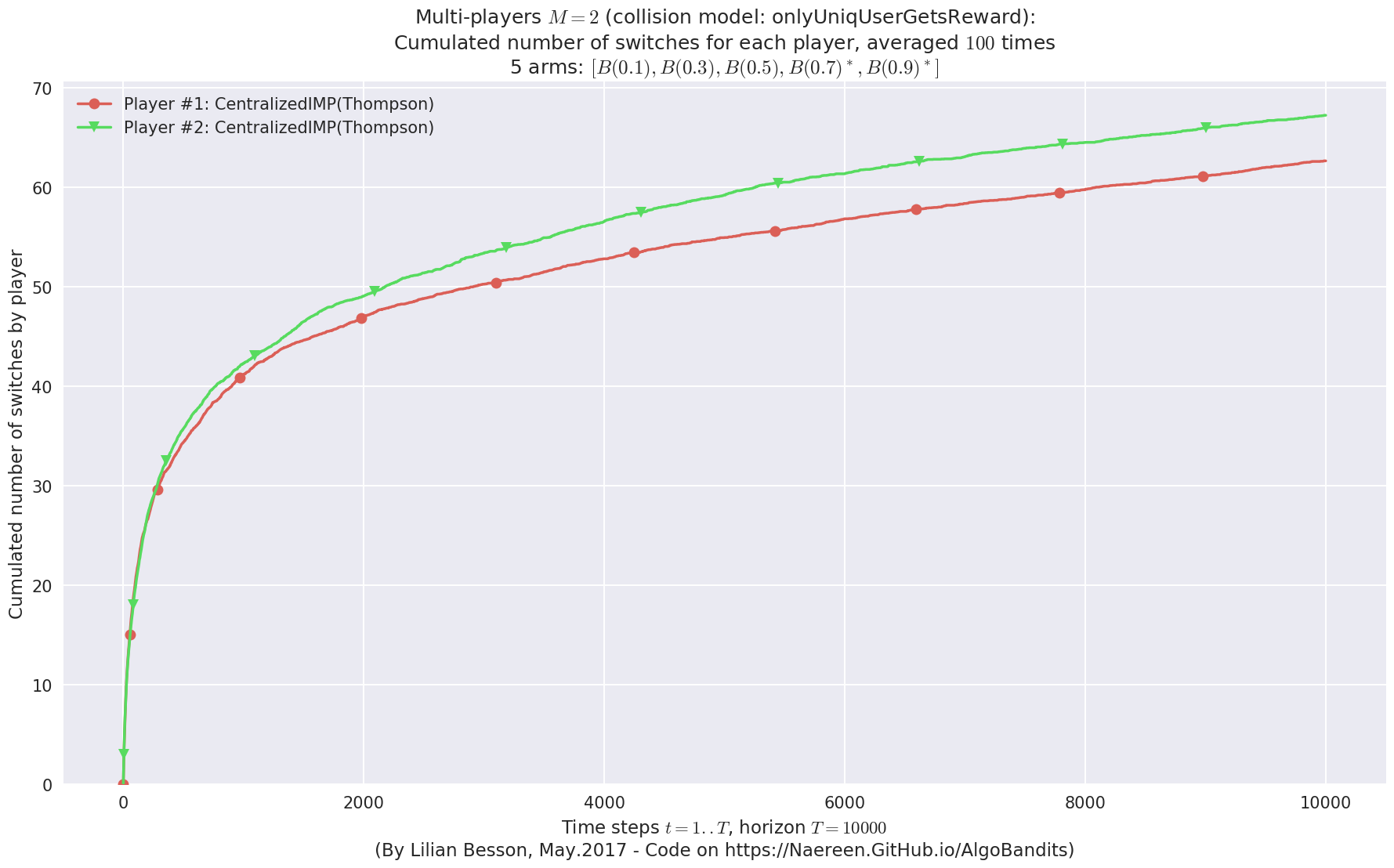

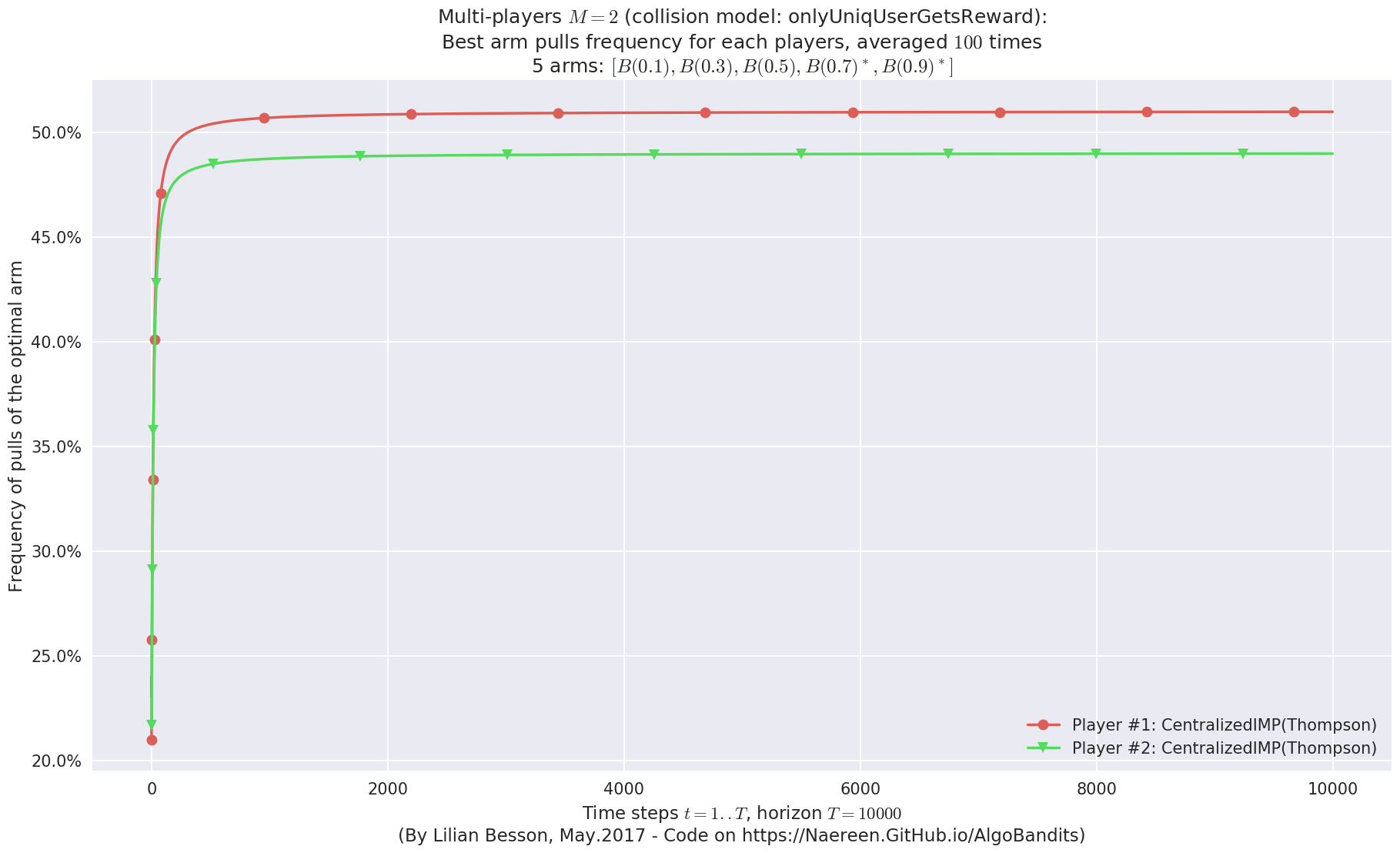

Second problem¶

\(\mu = [0.1, 0.3, 0.5, 0.7, 0.9]\) was an easier Bernoulli problem, with larger gap \(\Delta = 0.2\).

In [24]:

for playersId in tqdm(range(len(evs)), desc="Policies"):

evaluation = evaluators[1][playersId]

plotAll(evaluation, 1)

Final ranking for this environment #1 :

- Player #2, '#2<CentralizedMultiplePlay(UCB($\alpha=1$))>' was ranked 1 / 2 for this simulation (last rewards = 8005.87).

- Player #1, '#1<CentralizedMultiplePlay(UCB($\alpha=1$))>' was ranked 2 / 2 for this simulation (last rewards = 7883.31).

- For 2 players, Anandtharam et al. centralized lower-bound gave = 4.23 ...

- For 2 players, our lower bound gave = 8.46 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 5.35 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 3.12 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 40.00% ...

- [Anandtharam et al] centralized lowerbound = 8.46,

- Our decentralized lowerbound = 5.35,

- [Anandkumar et al] decentralized lowerbound = 4.23

Final ranking for this environment #1 :

- Player #2, '#2<CentralizedIMP(UCB($\alpha=1$))>' was ranked 1 / 2 for this simulation (last rewards = 8082.85).

- Player #1, '#1<CentralizedIMP(UCB($\alpha=1$))>' was ranked 2 / 2 for this simulation (last rewards = 7794.39).

- For 2 players, Anandtharam et al. centralized lower-bound gave = 4.23 ...

- For 2 players, our lower bound gave = 8.46 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 5.35 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 3.12 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 40.00% ...

- [Anandtharam et al] centralized lowerbound = 8.46,

- Our decentralized lowerbound = 5.35,

- [Anandkumar et al] decentralized lowerbound = 4.23

Final ranking for this environment #1 :

- Player #1, '#1<CentralizedMultiplePlay(Thompson)>' was ranked 1 / 2 for this simulation (last rewards = 8248.81).

- Player #2, '#2<CentralizedMultiplePlay(Thompson)>' was ranked 2 / 2 for this simulation (last rewards = 7637.28).

- For 2 players, Anandtharam et al. centralized lower-bound gave = 4.23 ...

- For 2 players, our lower bound gave = 8.46 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 5.35 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 3.12 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 40.00% ...

- [Anandtharam et al] centralized lowerbound = 8.46,

- Our decentralized lowerbound = 5.35,

- [Anandkumar et al] decentralized lowerbound = 4.23

Final ranking for this environment #1 :

- Player #1, '#1<CentralizedIMP(Thompson)>' was ranked 1 / 2 for this simulation (last rewards = 7970).

- Player #2, '#2<CentralizedIMP(Thompson)>' was ranked 2 / 2 for this simulation (last rewards = 7929.56).

- For 2 players, Anandtharam et al. centralized lower-bound gave = 4.23 ...

- For 2 players, our lower bound gave = 8.46 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 5.35 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 3.12 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 40.00% ...

- [Anandtharam et al] centralized lowerbound = 8.46,

- Our decentralized lowerbound = 5.35,

- [Anandkumar et al] decentralized lowerbound = 4.23

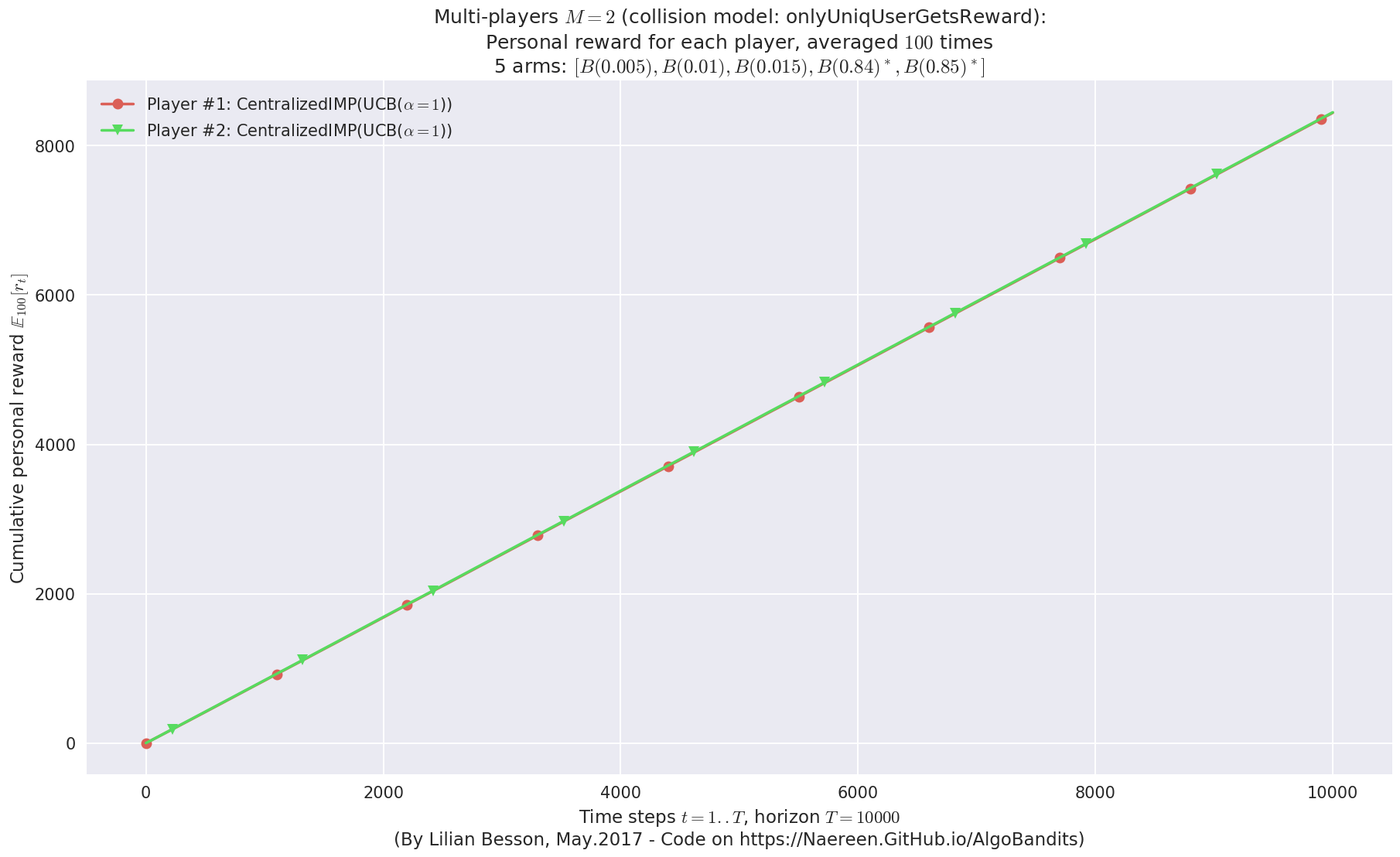

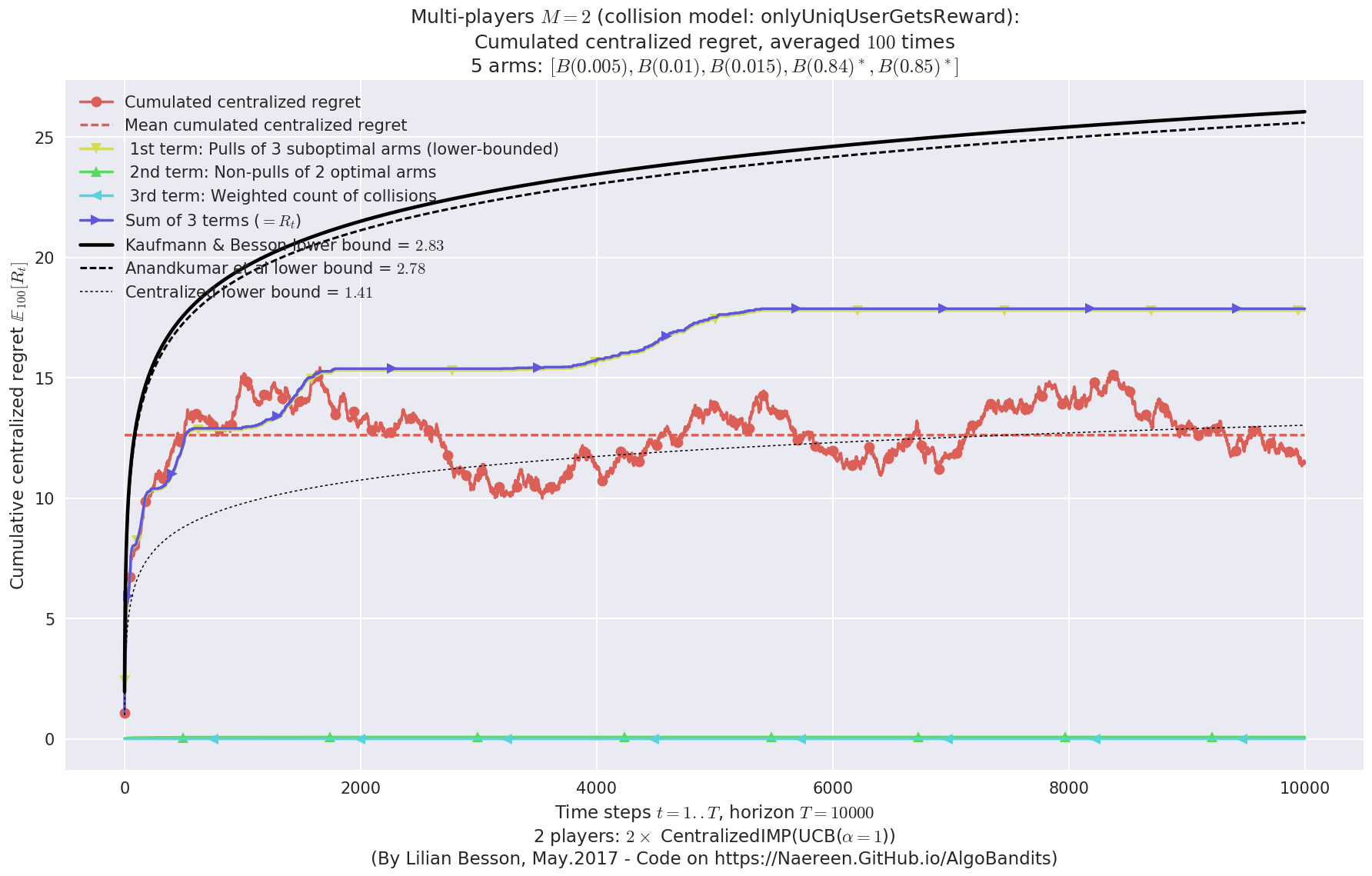

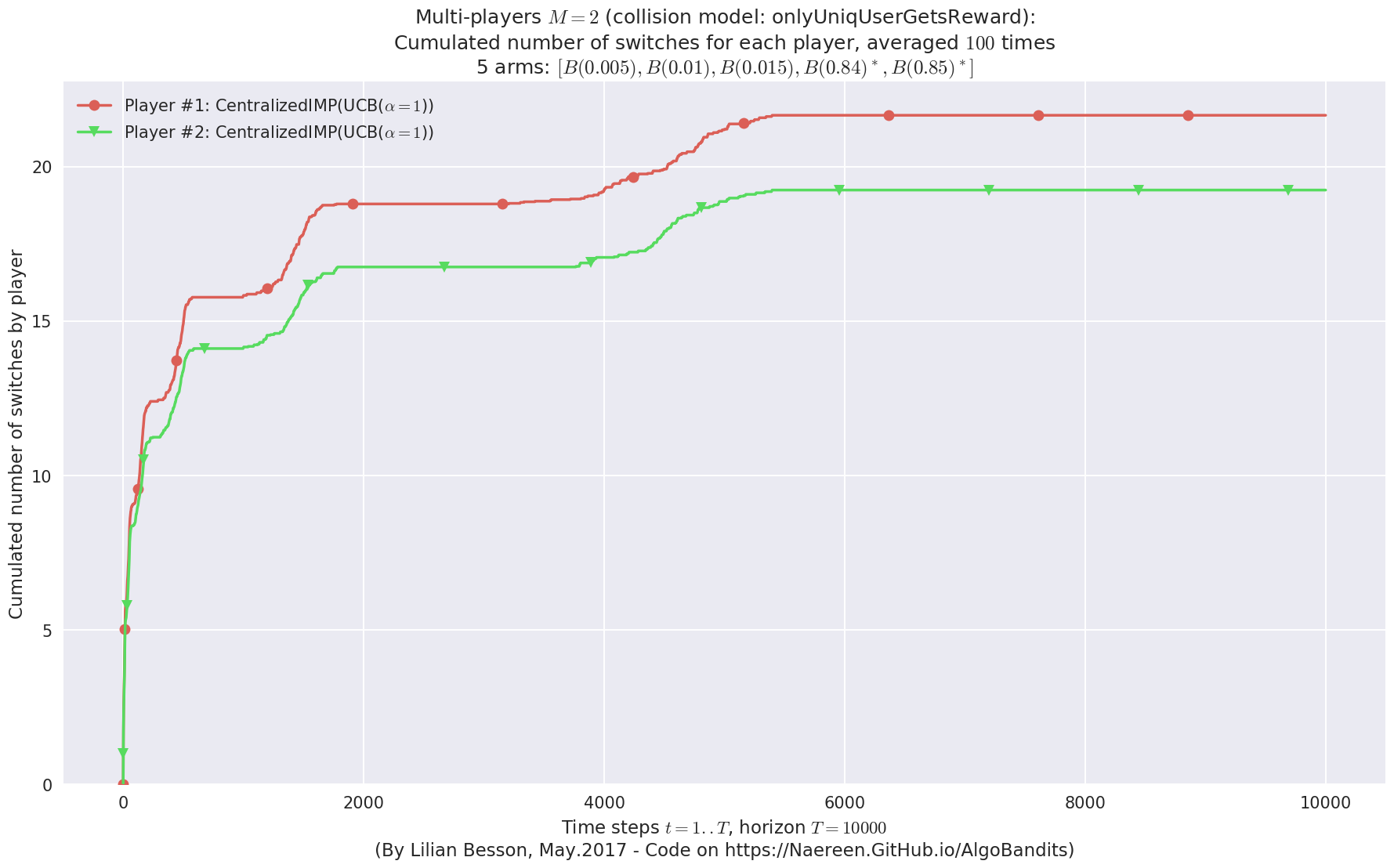

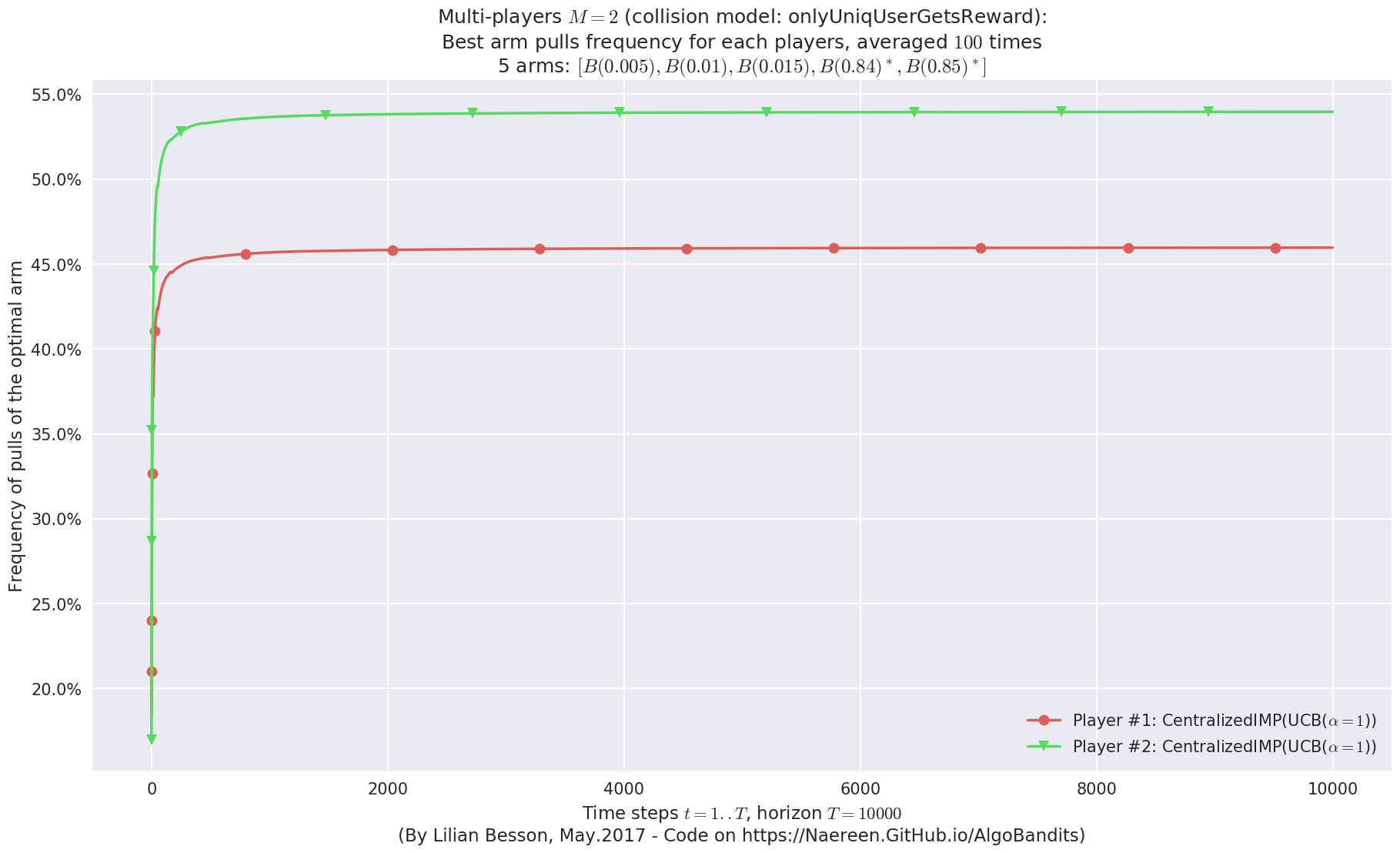

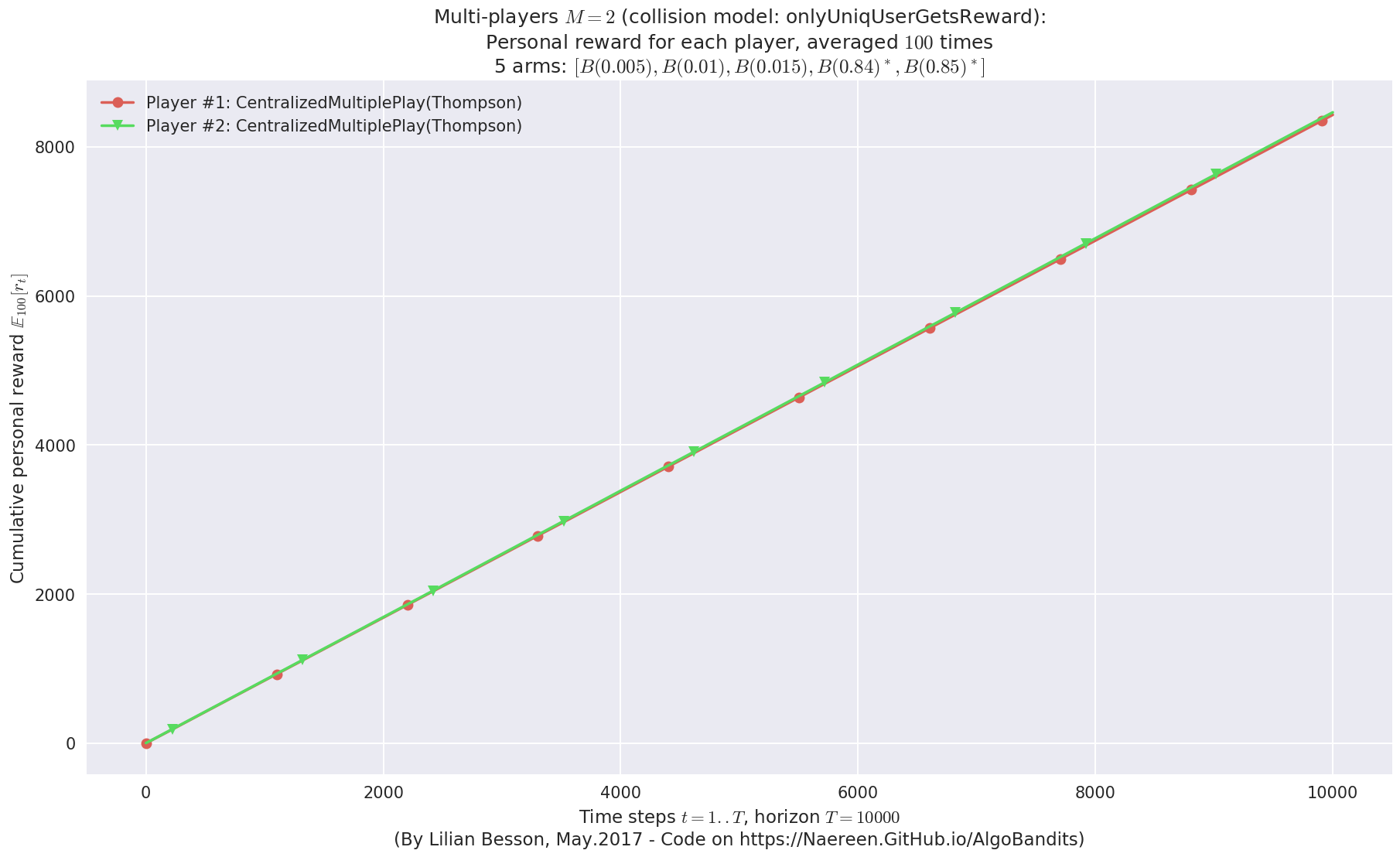

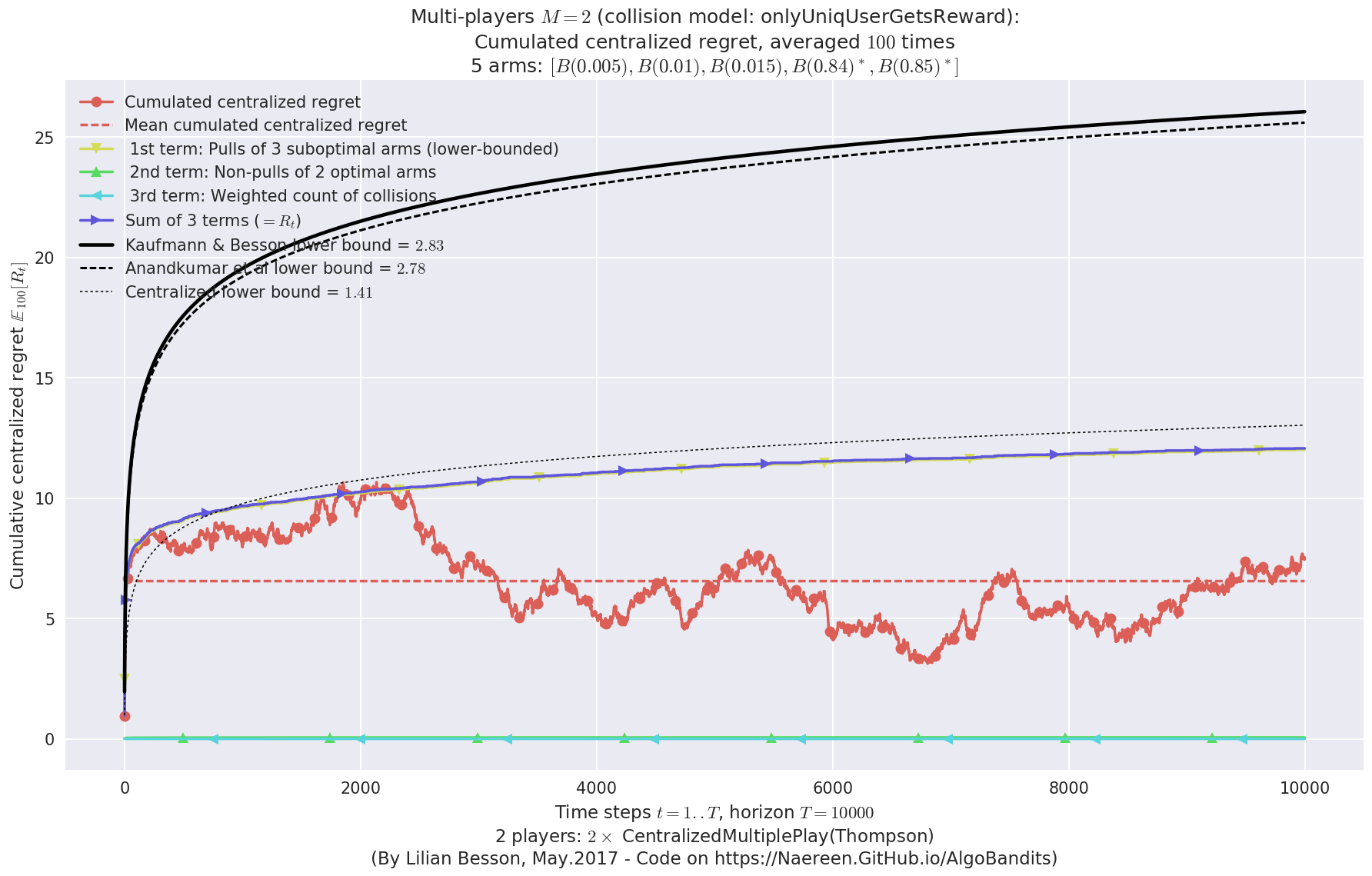

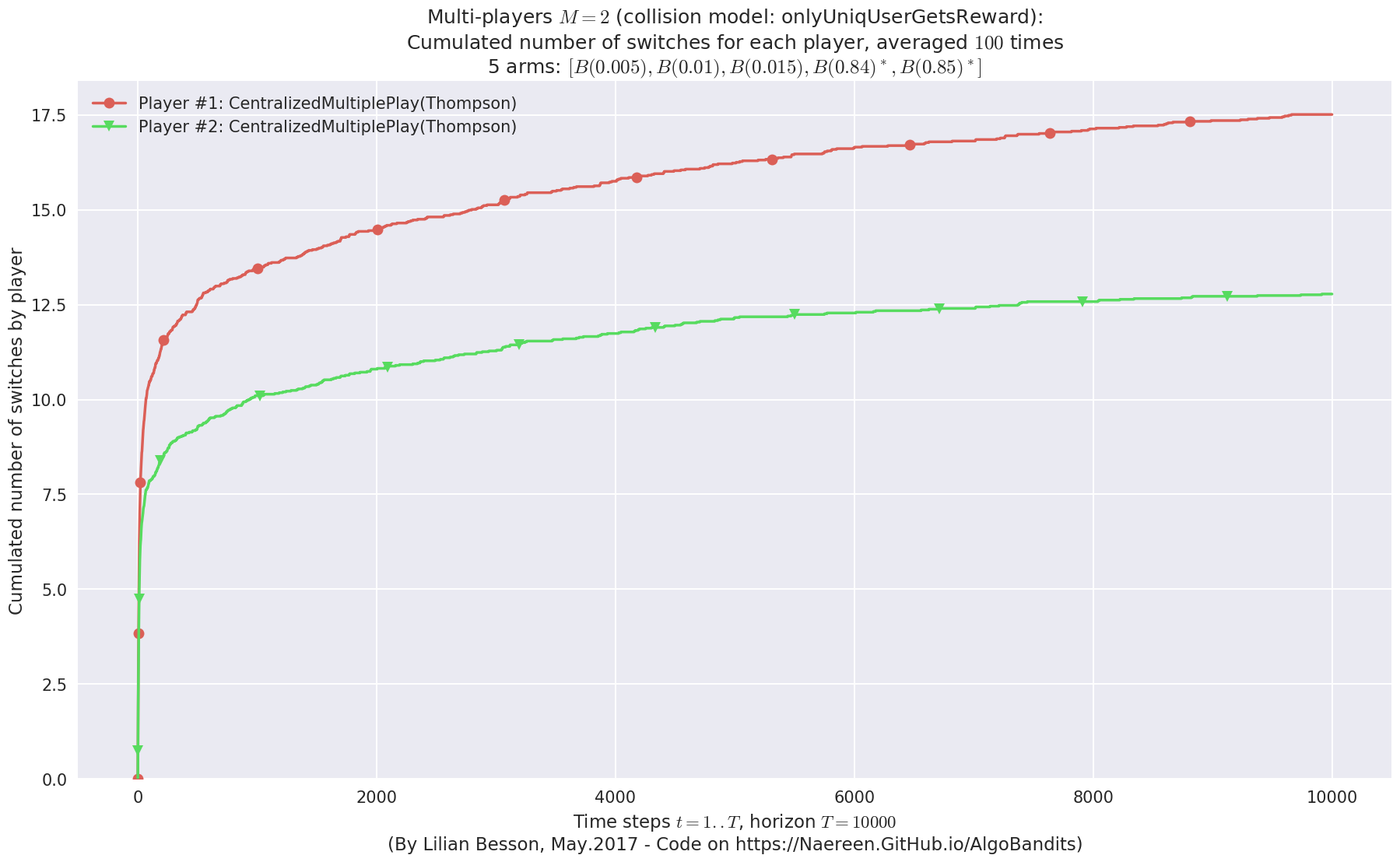

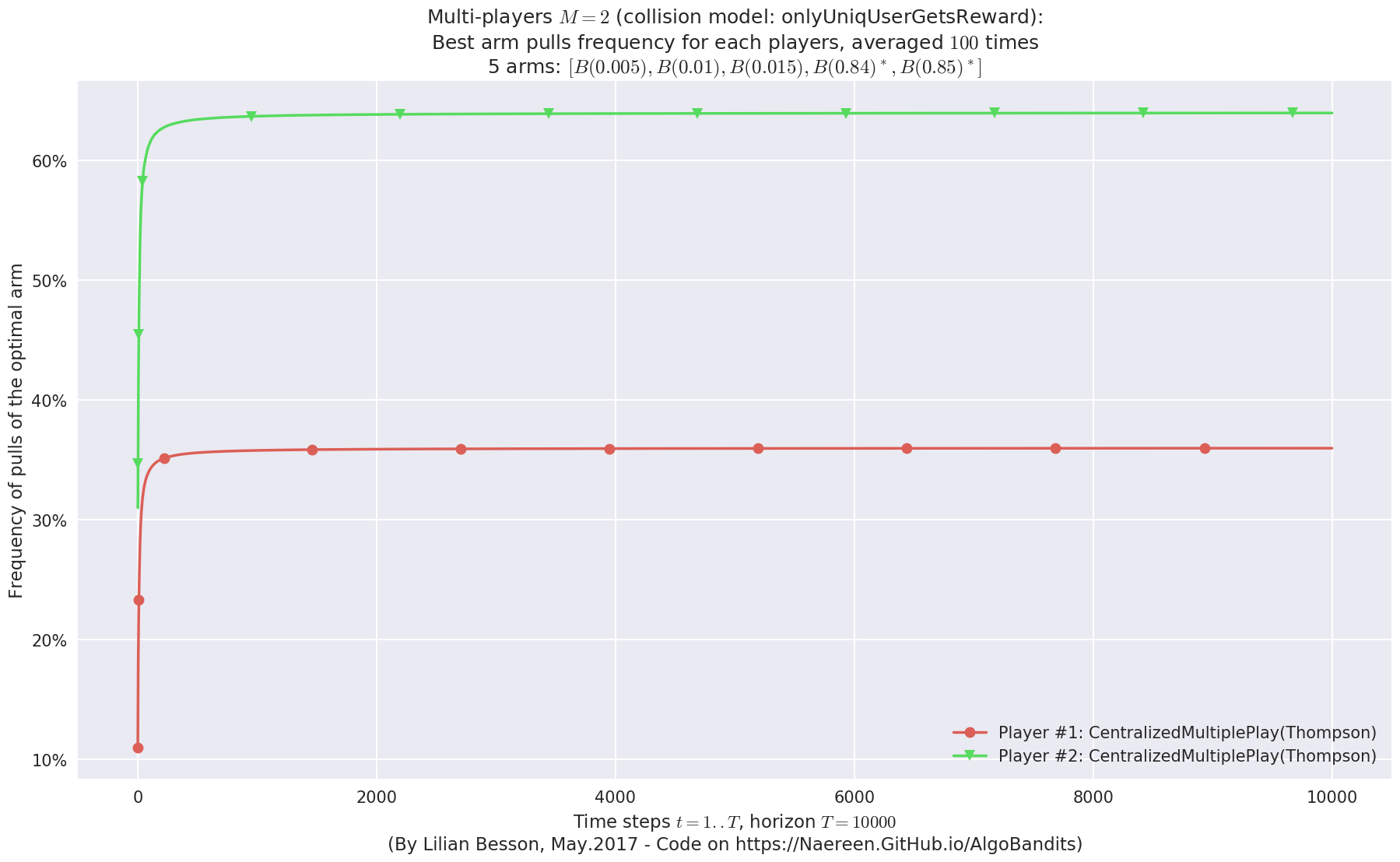

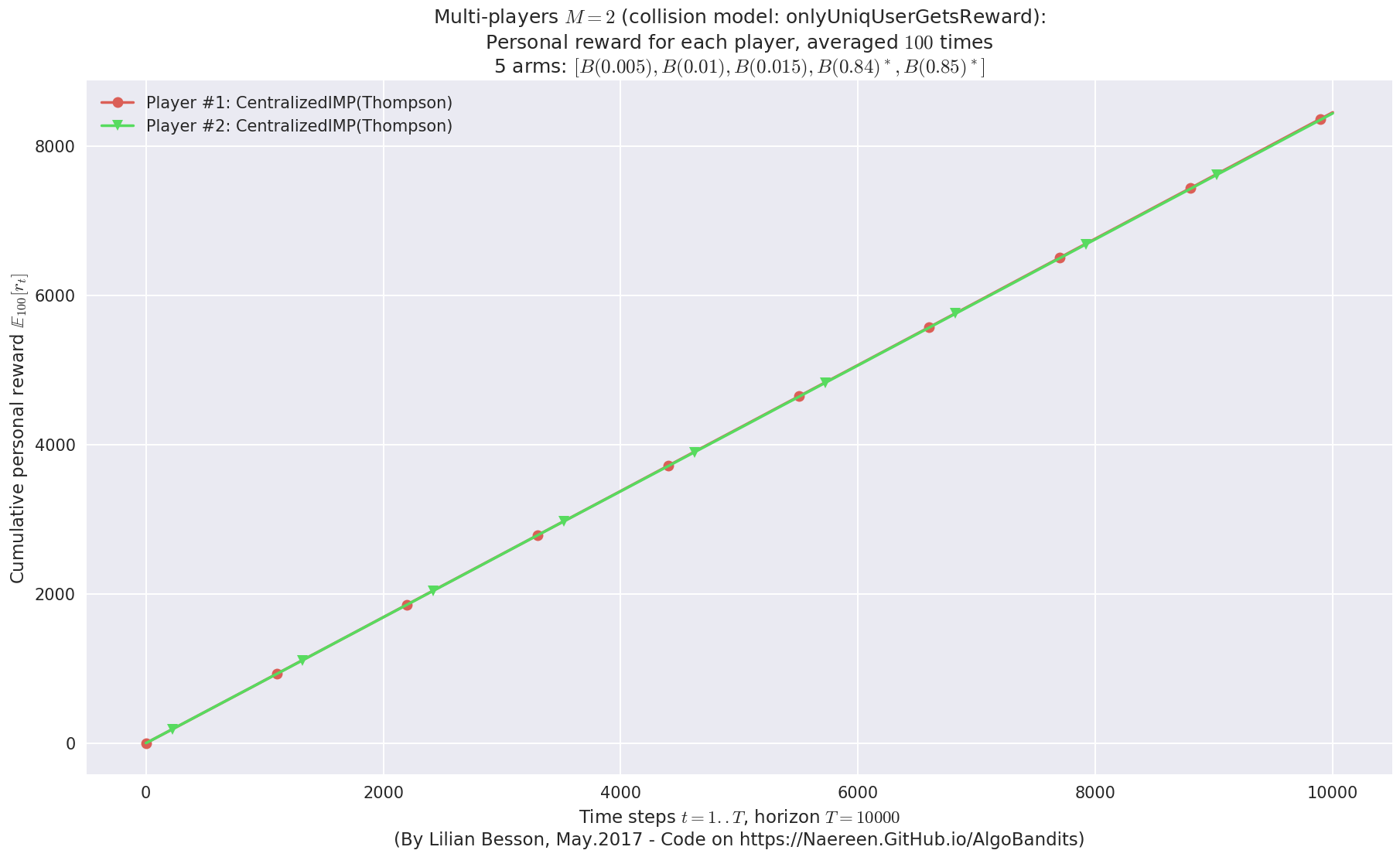

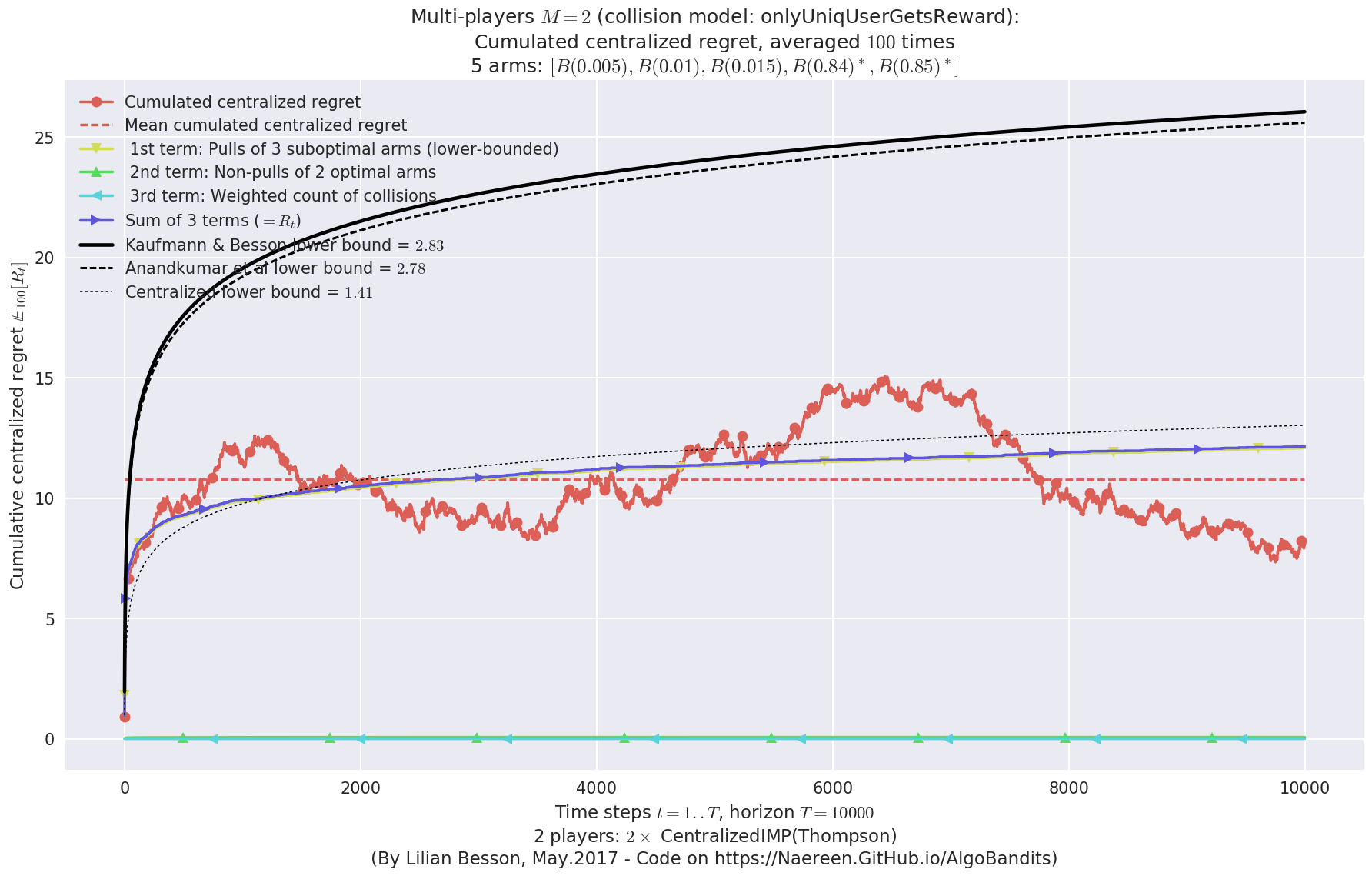

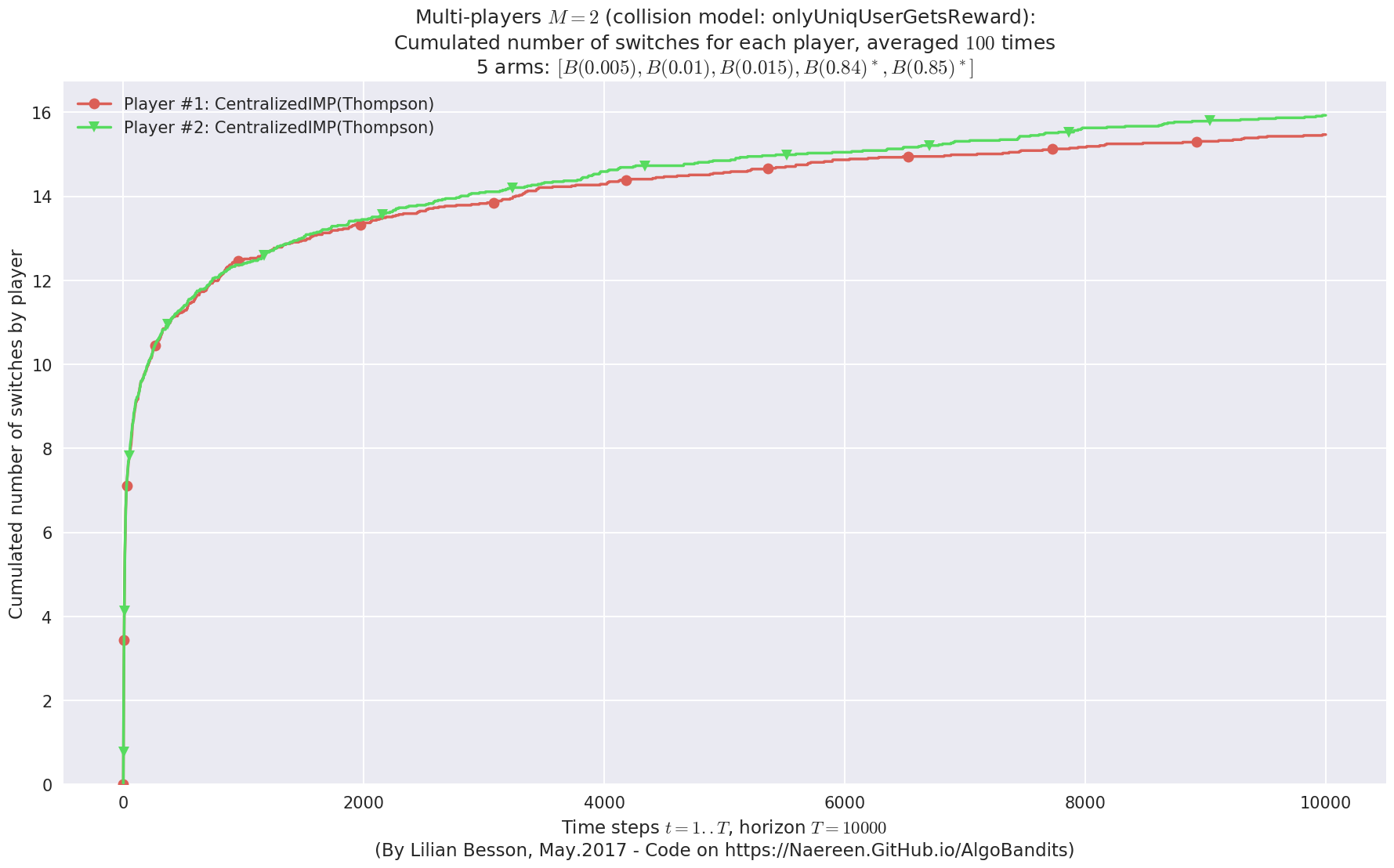

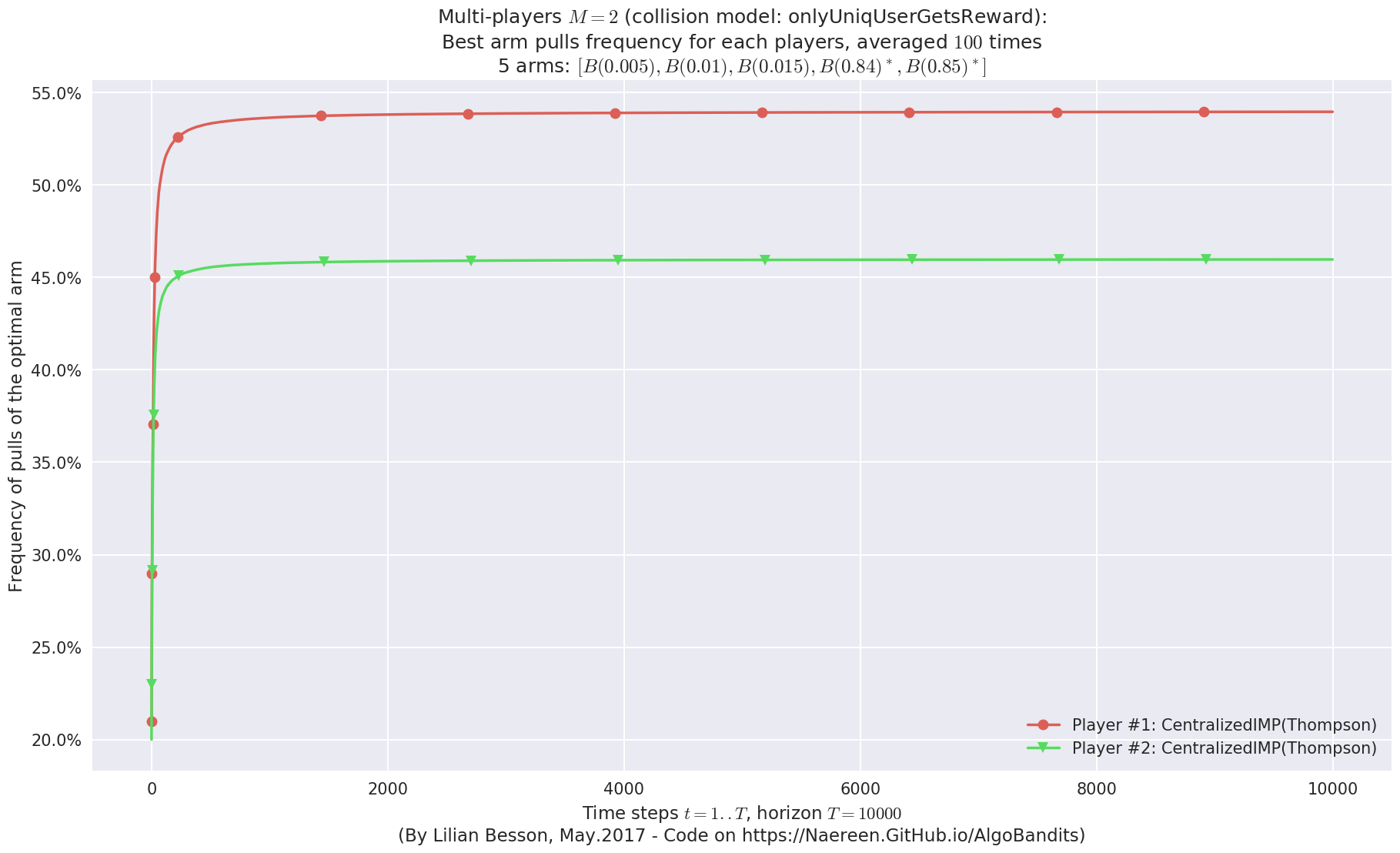

Third problem¶

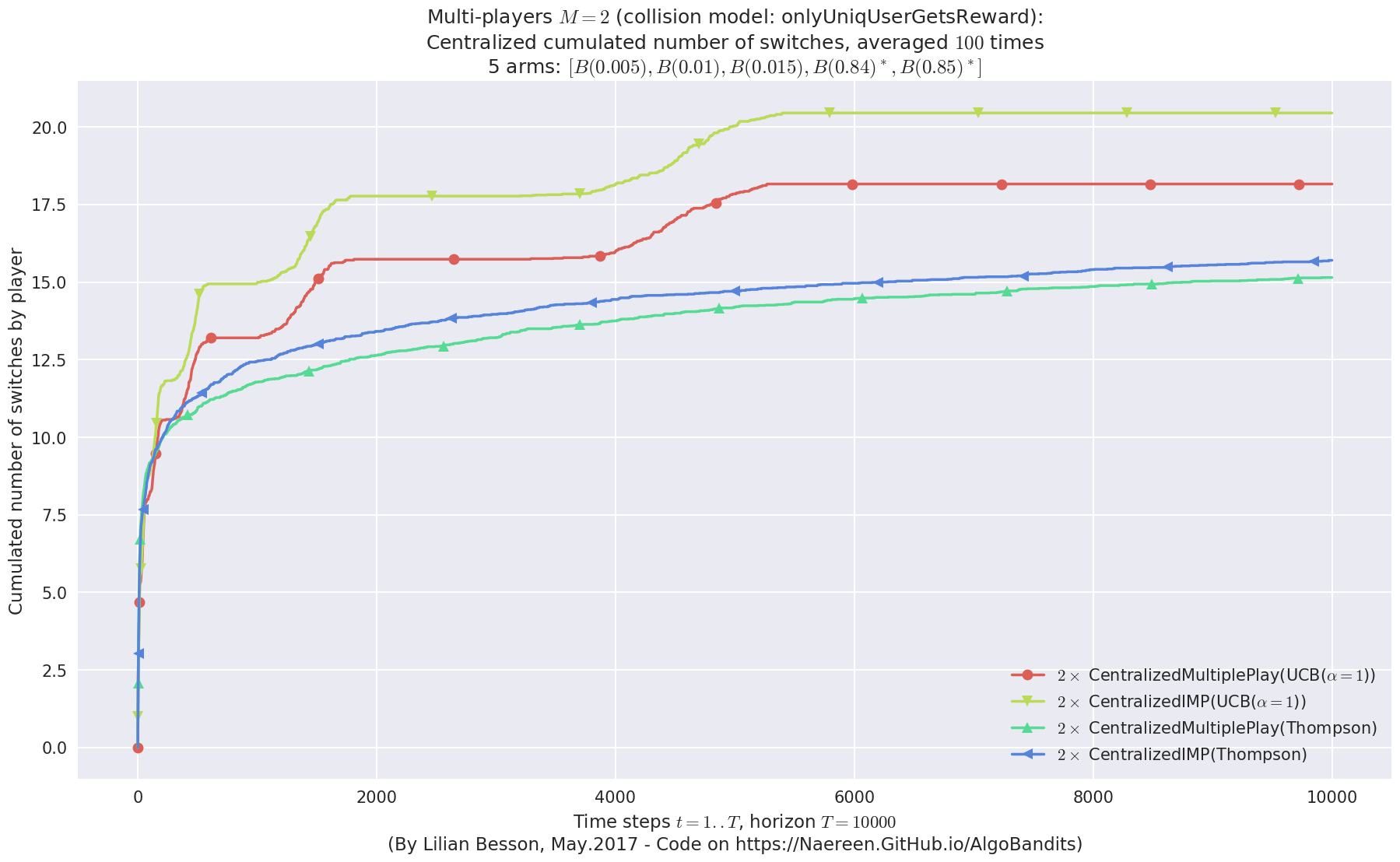

\(\mu = [0.005, 0.01, 0.015, 0.84, 0.85]\) is an harder Bernoulli problem, as there is a huge gap between suboptimal and optimal arms.

In [25]:

for playersId in tqdm(range(len(evs)), desc="Policies"):

evaluation = evaluators[2][playersId]

plotAll(evaluation, 2)

Final ranking for this environment #2 :

- Player #2, '#2<CentralizedMultiplePlay(UCB($\alpha=1$))>' was ranked 1 / 2 for this simulation (last rewards = 8400.78).

- Player #1, '#1<CentralizedMultiplePlay(UCB($\alpha=1$))>' was ranked 2 / 2 for this simulation (last rewards = 8396.29).

- For 2 players, Anandtharam et al. centralized lower-bound gave = 1.41 ...

- For 2 players, our lower bound gave = 2.83 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 2.78 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 27.3 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 29.40% ...

- [Anandtharam et al] centralized lowerbound = 2.83,

- Our decentralized lowerbound = 2.78,

- [Anandkumar et al] decentralized lowerbound = 1.41

Final ranking for this environment #2 :

- Player #2, '#2<CentralizedIMP(UCB($\alpha=1$))>' was ranked 1 / 2 for this simulation (last rewards = 8405.84).

- Player #1, '#1<CentralizedIMP(UCB($\alpha=1$))>' was ranked 2 / 2 for this simulation (last rewards = 8399.73).

- For 2 players, Anandtharam et al. centralized lower-bound gave = 1.41 ...

- For 2 players, our lower bound gave = 2.83 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 2.78 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 27.3 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 29.40% ...

- [Anandtharam et al] centralized lowerbound = 2.83,

- Our decentralized lowerbound = 2.78,

- [Anandkumar et al] decentralized lowerbound = 1.41

Final ranking for this environment #2 :

- Player #2, '#2<CentralizedMultiplePlay(Thompson)>' was ranked 1 / 2 for this simulation (last rewards = 8421.93).

- Player #1, '#1<CentralizedMultiplePlay(Thompson)>' was ranked 2 / 2 for this simulation (last rewards = 8388.24).

- For 2 players, Anandtharam et al. centralized lower-bound gave = 1.41 ...

- For 2 players, our lower bound gave = 2.83 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 2.78 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 27.3 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 29.40% ...

- [Anandtharam et al] centralized lowerbound = 2.83,

- Our decentralized lowerbound = 2.78,

- [Anandkumar et al] decentralized lowerbound = 1.41

Final ranking for this environment #2 :

- Player #1, '#1<CentralizedIMP(Thompson)>' was ranked 1 / 2 for this simulation (last rewards = 8411.13).

- Player #2, '#2<CentralizedIMP(Thompson)>' was ranked 2 / 2 for this simulation (last rewards = 8398.26).

- For 2 players, Anandtharam et al. centralized lower-bound gave = 1.41 ...

- For 2 players, our lower bound gave = 2.83 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 2.78 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 27.3 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 29.40% ...

- [Anandtharam et al] centralized lowerbound = 2.83,

- Our decentralized lowerbound = 2.78,

- [Anandkumar et al] decentralized lowerbound = 1.41

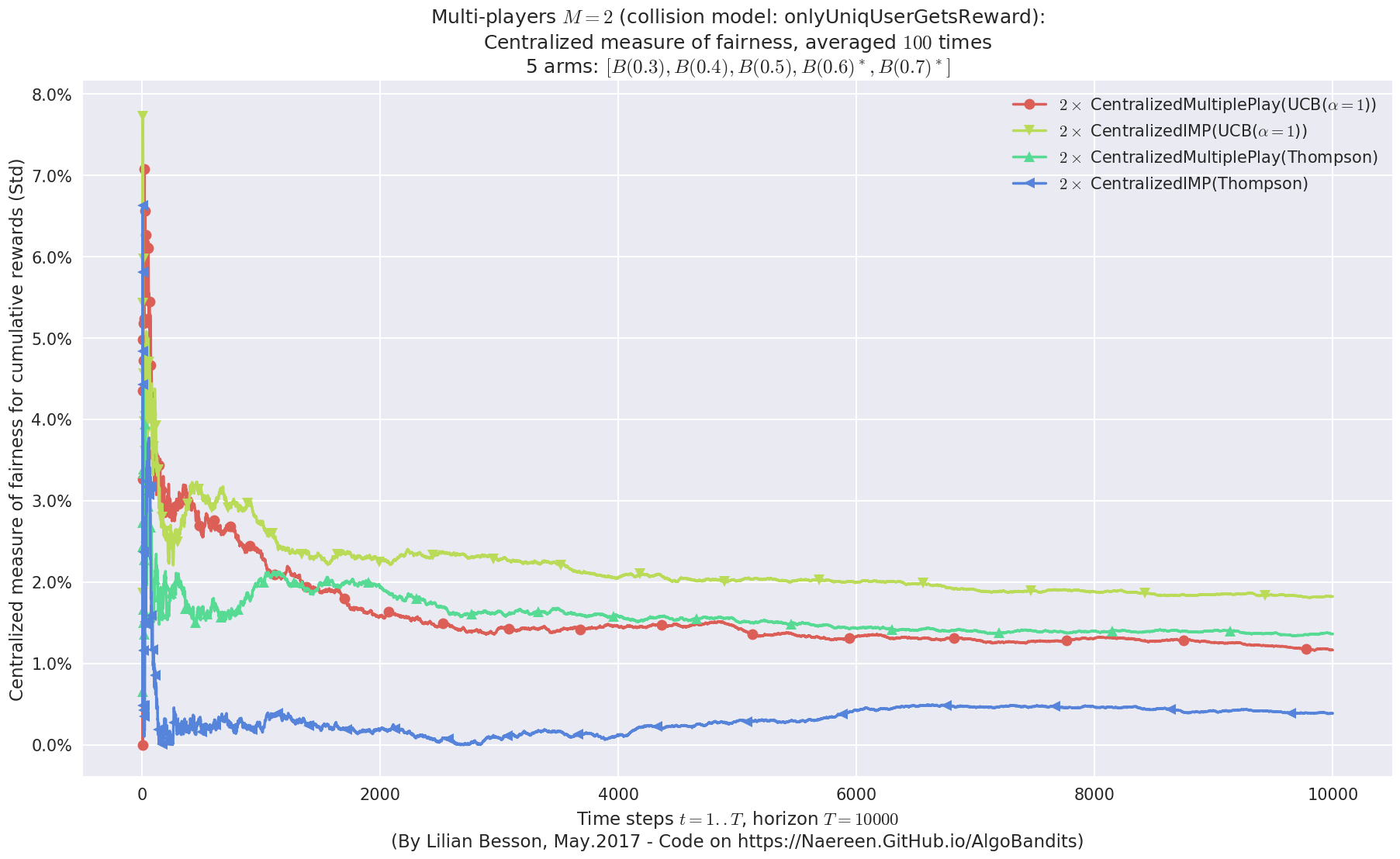

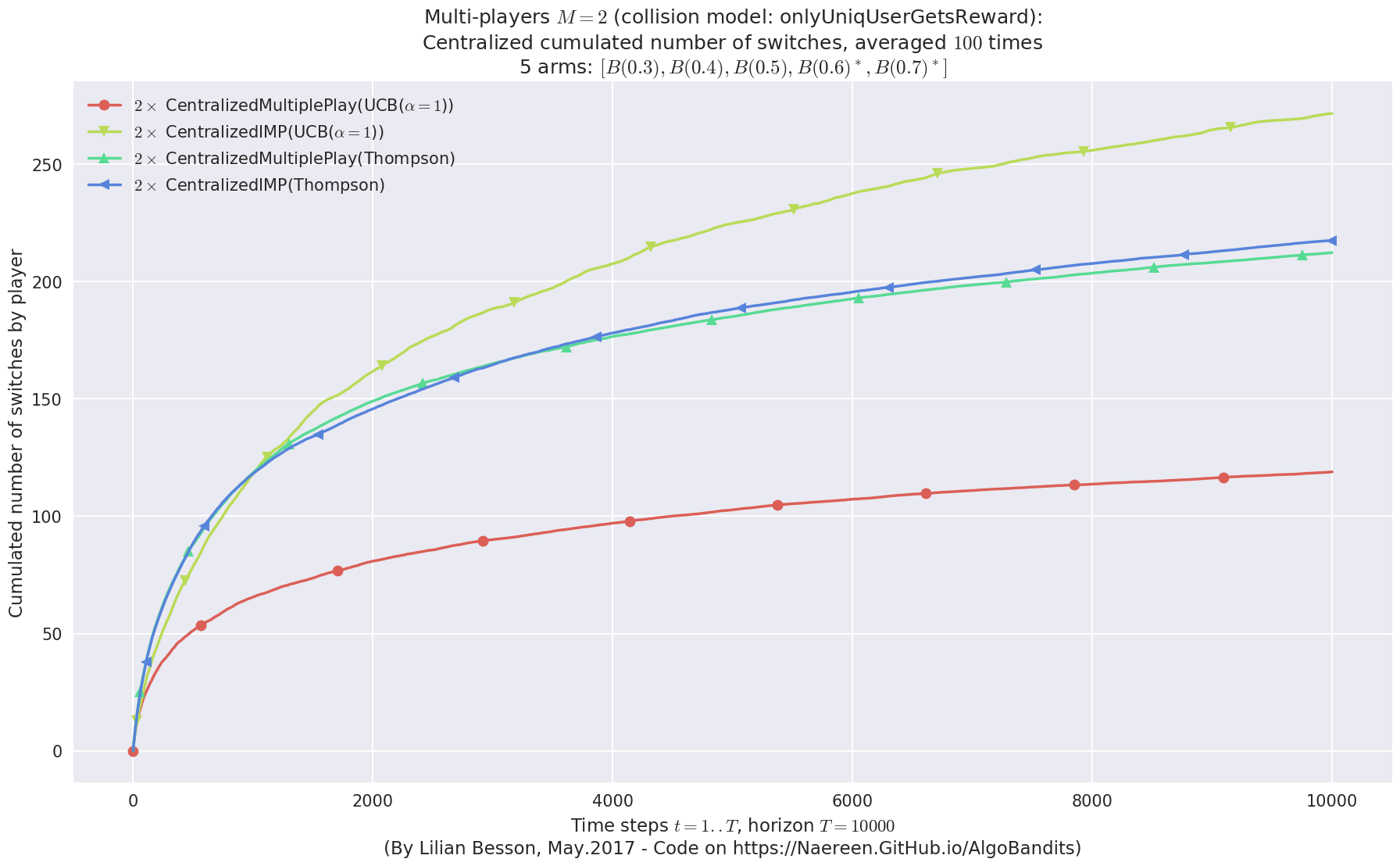

Comparing their performances¶

In [26]:

def plotCombined(e0, eothers, envId):

# Centralized regret

e0.plotRegretCentralized(envId, evaluators=eothers)

# Fairness

e0.plotFairness(envId, fairness="STD", evaluators=eothers)

# Number of switches

e0.plotNbSwitchsCentralized(envId, cumulated=True, evaluators=eothers)

# Number of collisions - not for Centralized* policies

#e0.plotNbCollisions(envId, cumulated=True, evaluators=eothers)

In [27]:

N = len(configuration["environment"])

for envId, env in enumerate(configuration["environment"]):

e0, eothers = evaluators[envId][0], evaluators[envId][1:]

plotCombined(e0, eothers, envId)

- For 2 players, Anandtharam et al. centralized lower-bound gave = 9 ...

- For 2 players, our lower bound gave = 18 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 12.1 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 9.46 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 60.00% ...

- [Anandtharam et al] centralized lowerbound = 18,

- Our decentralized lowerbound = 12.1,

- [Anandkumar et al] decentralized lowerbound = 9

- For 2 players, Anandtharam et al. centralized lower-bound gave = 4.23 ...

- For 2 players, our lower bound gave = 8.46 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 5.35 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 3.12 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 40.00% ...

- [Anandtharam et al] centralized lowerbound = 8.46,

- Our decentralized lowerbound = 5.35,

- [Anandkumar et al] decentralized lowerbound = 4.23

- For 2 players, Anandtharam et al. centralized lower-bound gave = 1.41 ...

- For 2 players, our lower bound gave = 2.83 ...

- For 2 players, the initial lower bound in Theorem 6 from [Anandkumar et al., 2010] gave = 2.78 ...

This MAB problem has:

- a [Lai & Robbins] complexity constant C(mu) = 27.3 for 1-player problem ...

- a Optimal Arm Identification factor H_OI(mu) = 29.40% ...

- [Anandtharam et al] centralized lowerbound = 2.83,

- Our decentralized lowerbound = 2.78,

- [Anandkumar et al] decentralized lowerbound = 1.41

That’s it for this demo!