Table of Contents¶

1 Demonstrations of Single-Player Simulations for Non-Stationary-Bandits

1.1 Creating the problem

1.1.1 Parameters for the simulation

1.1.2 Two MAB problems with Bernoulli arms and piecewise stationary means

1.1.3 Some MAB algorithms

1.1.3.1 Parameters of the algorithms

1.1.3.2 Algorithms

1.2 Checking if the problems are too hard or not

1.3 Creating the Evaluator object

1.4 Solving the problem

1.4.1 First problem

1.4.2 Second problem

1.5 Plotting the results

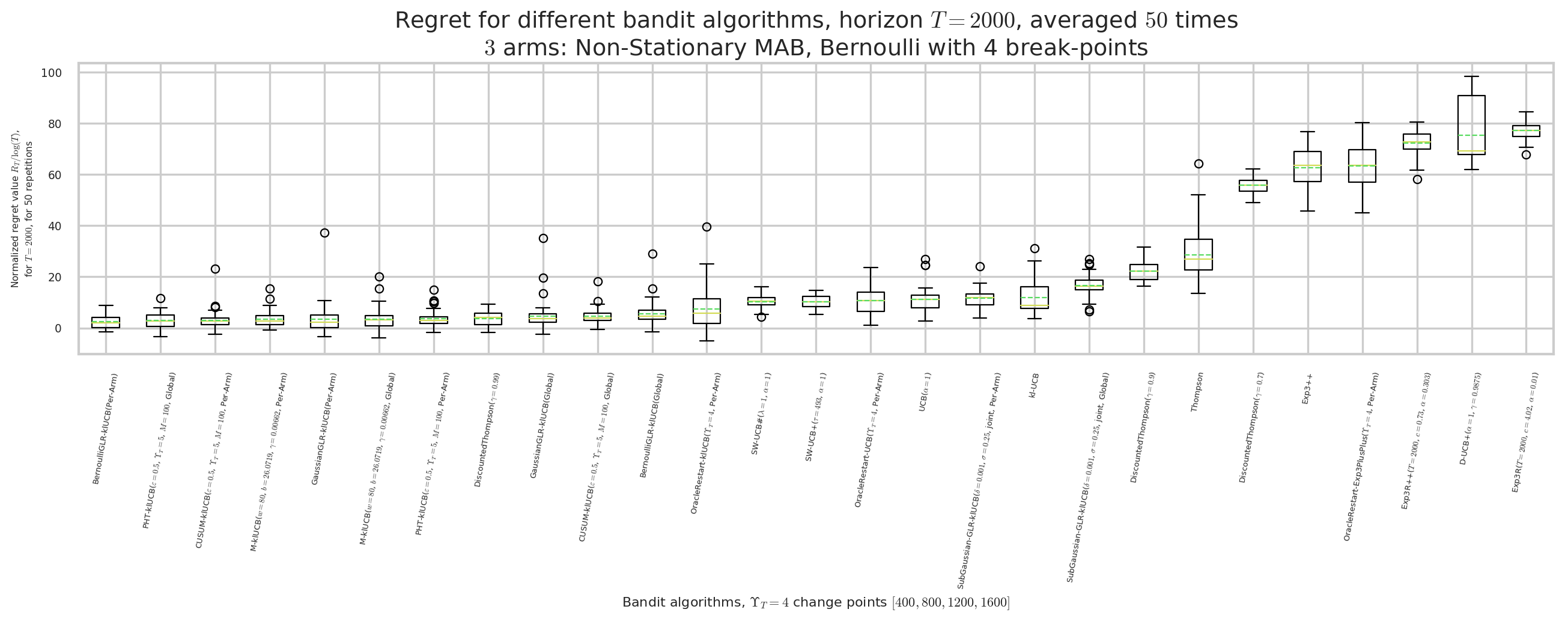

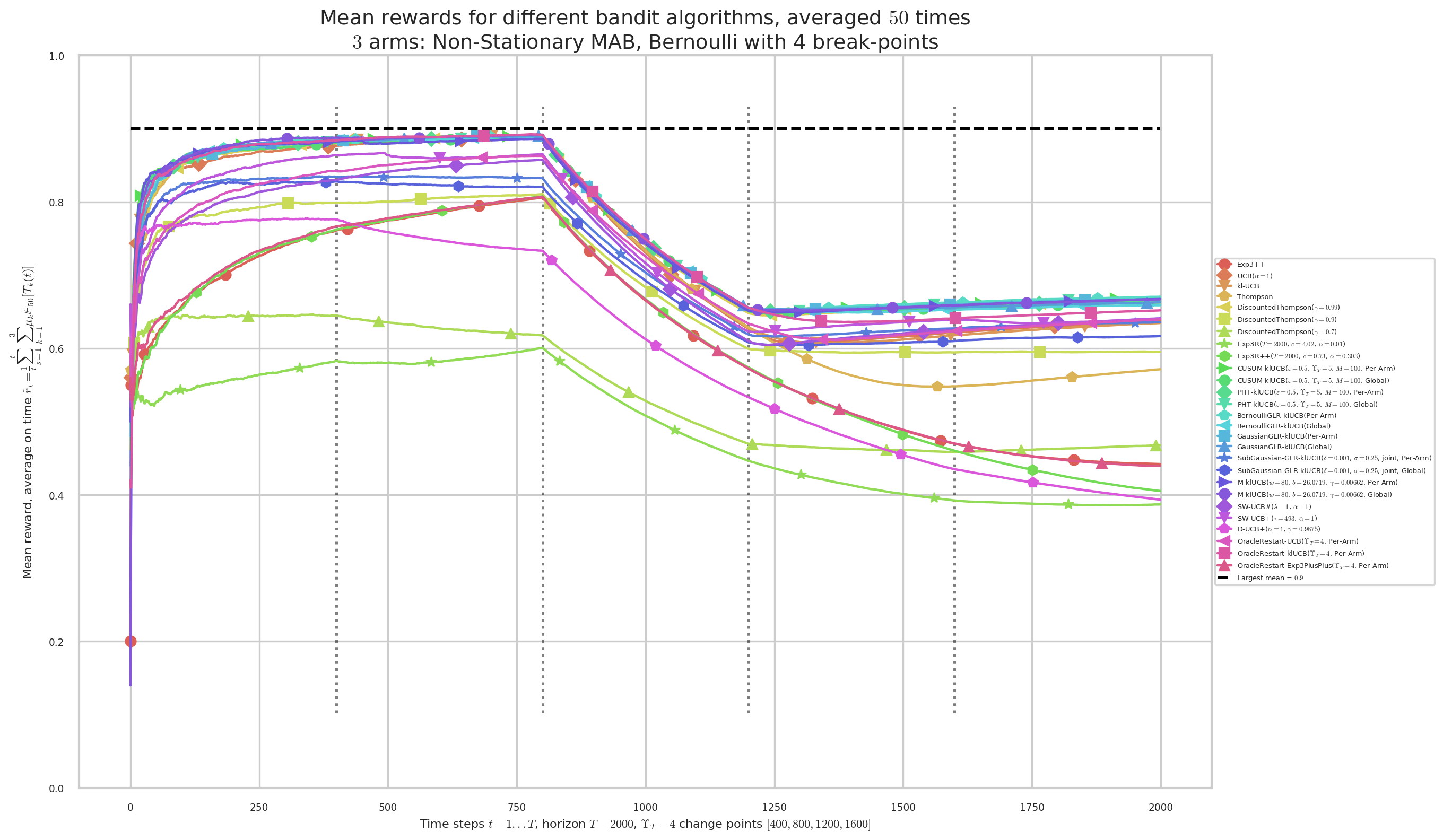

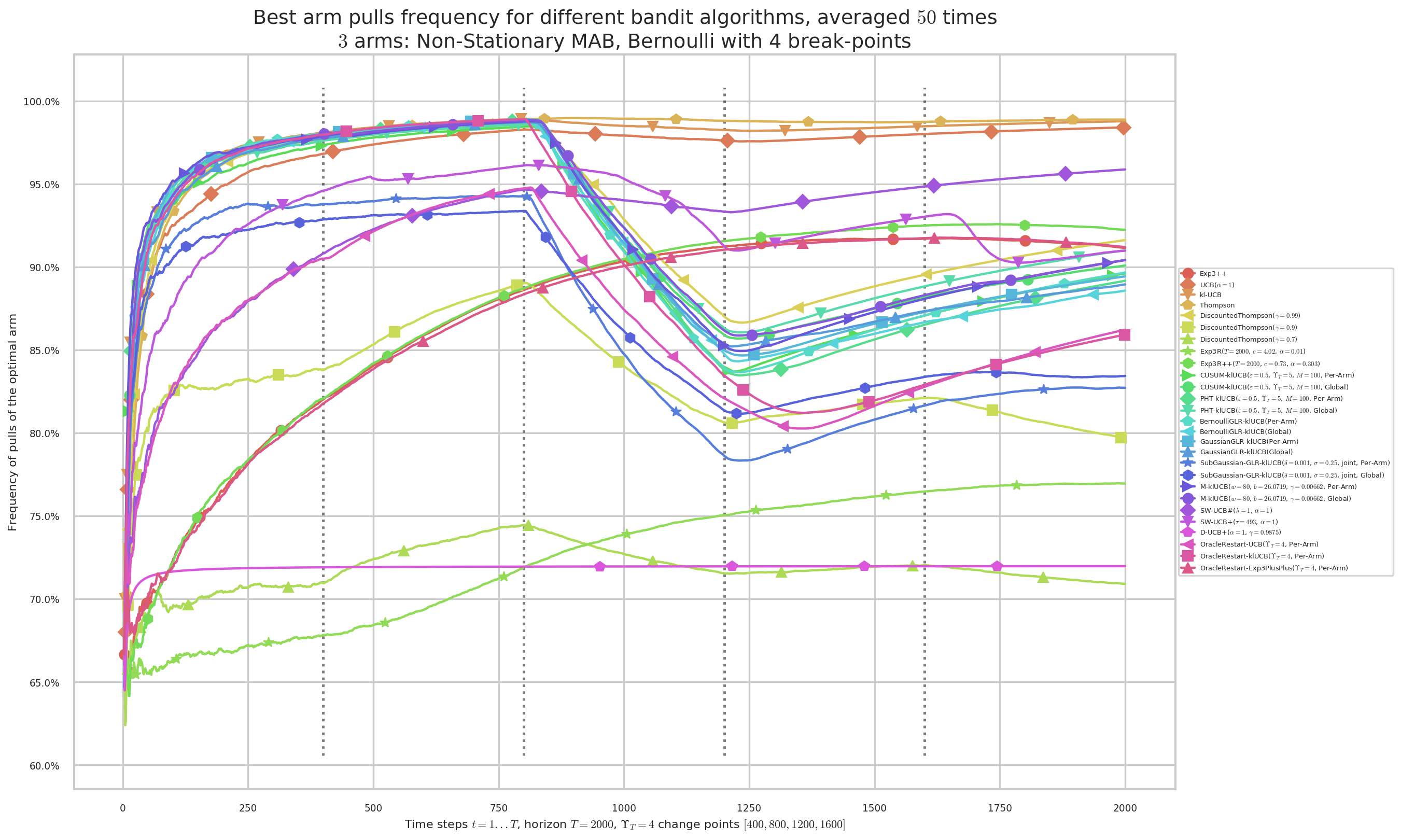

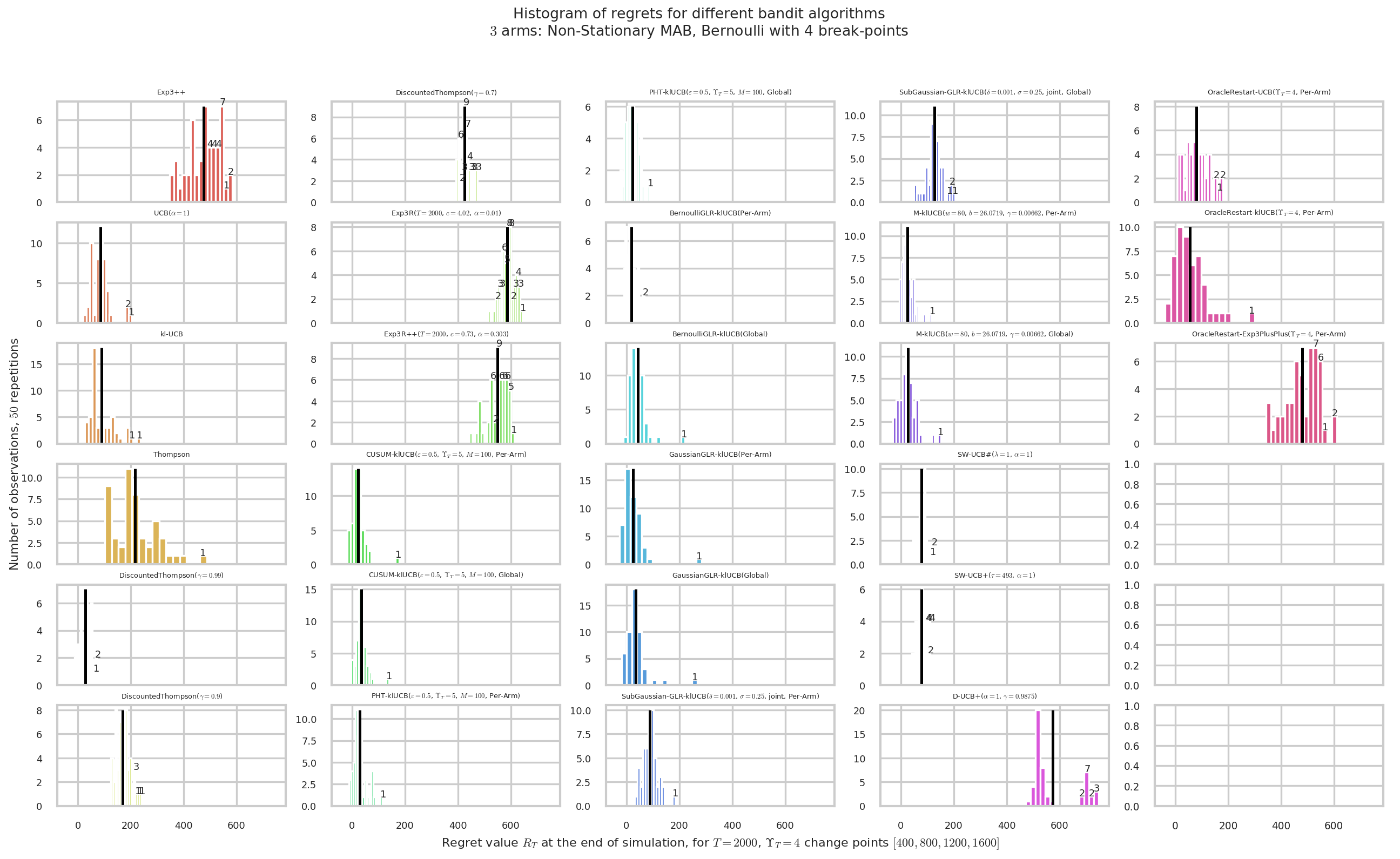

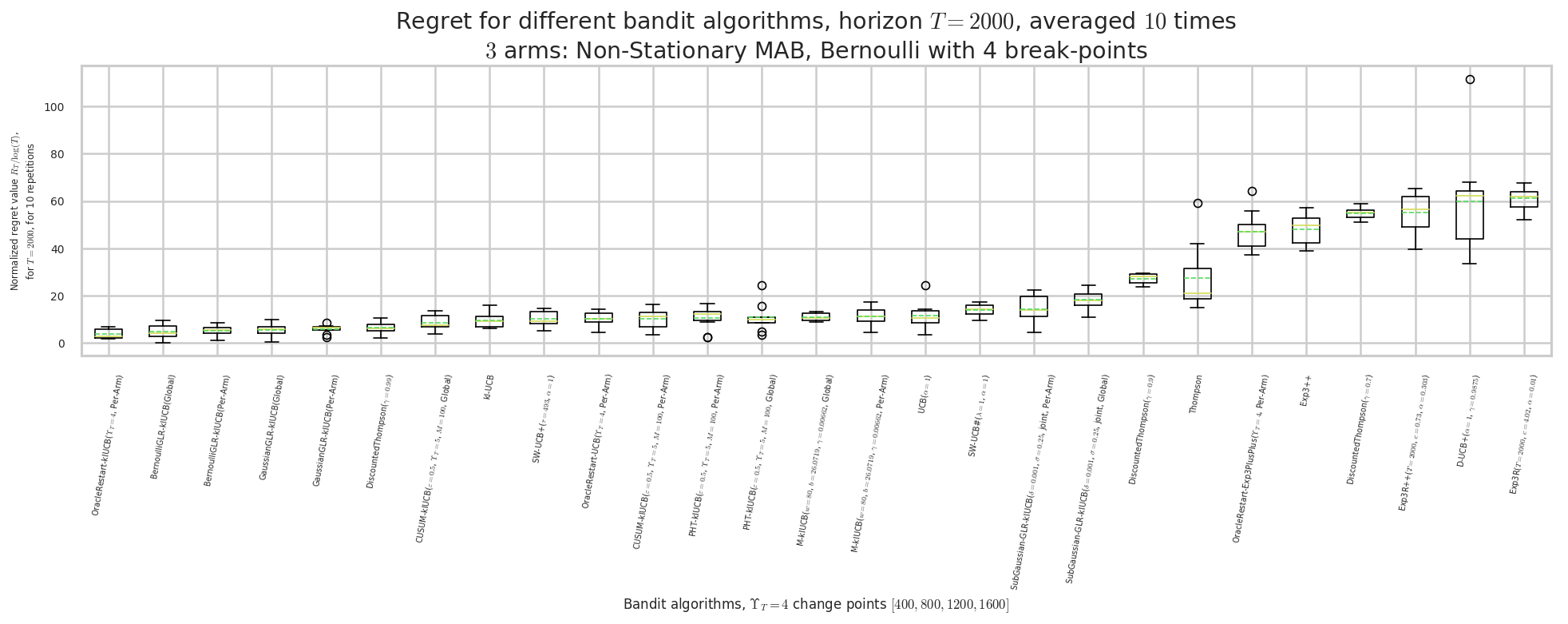

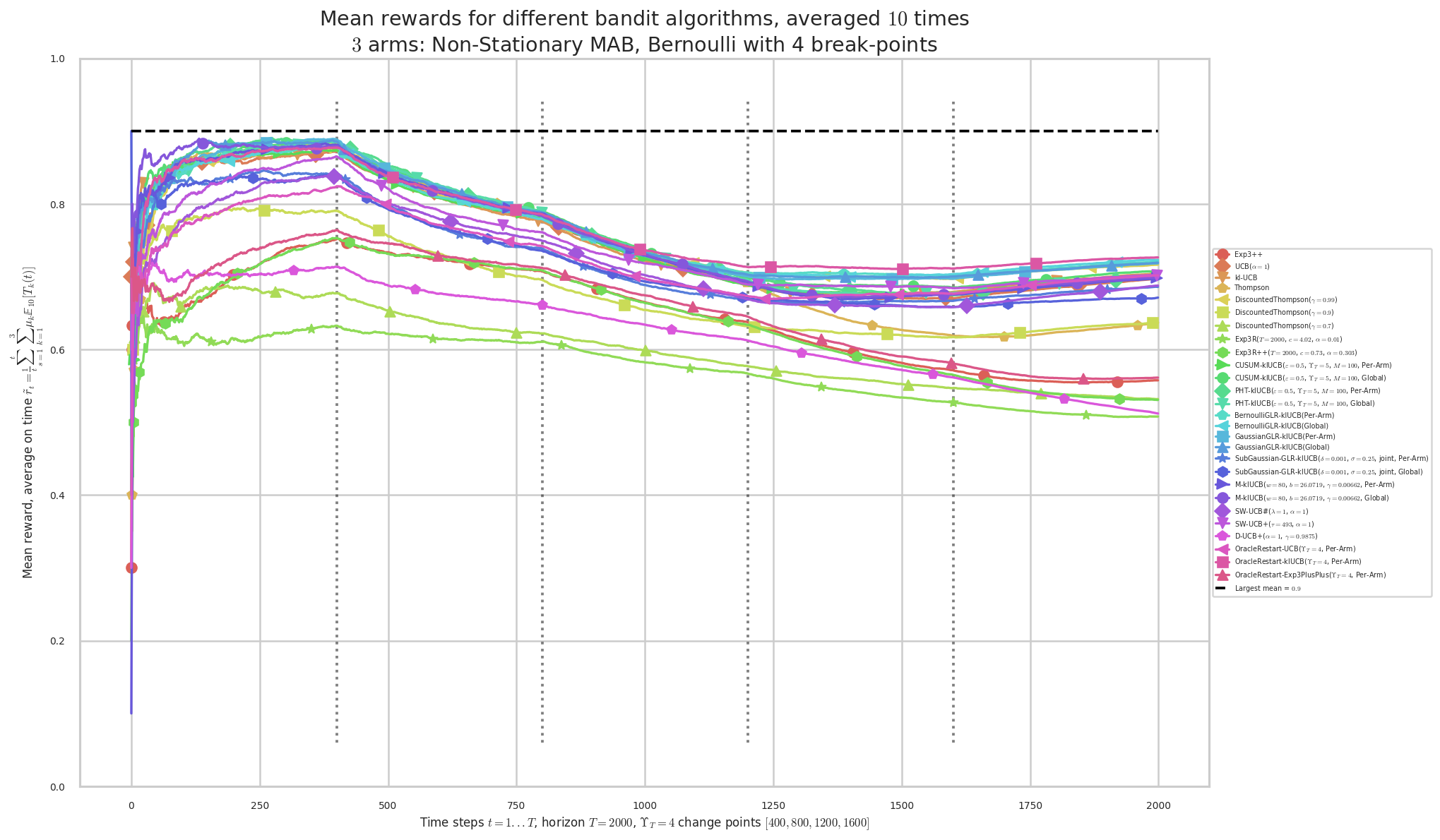

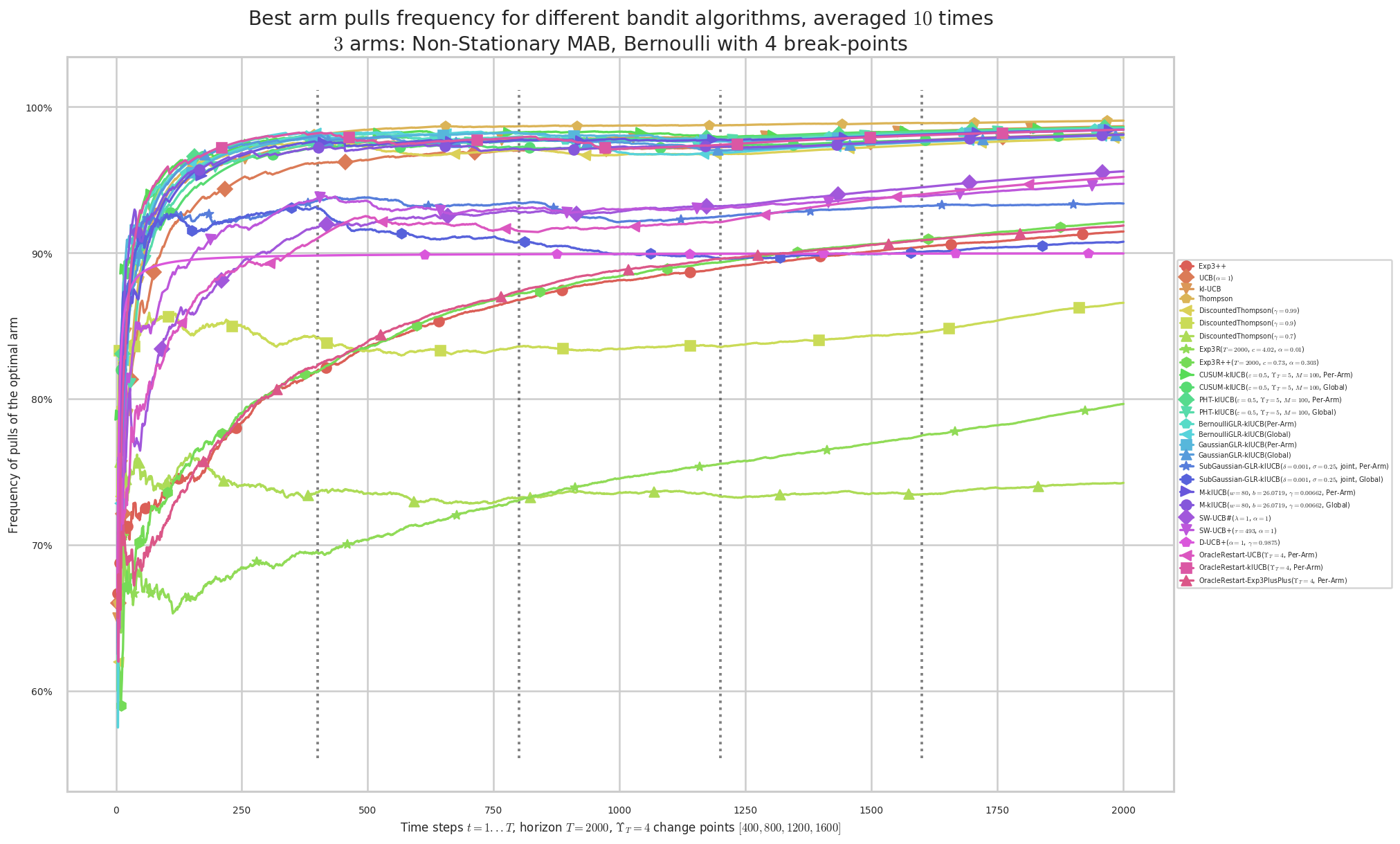

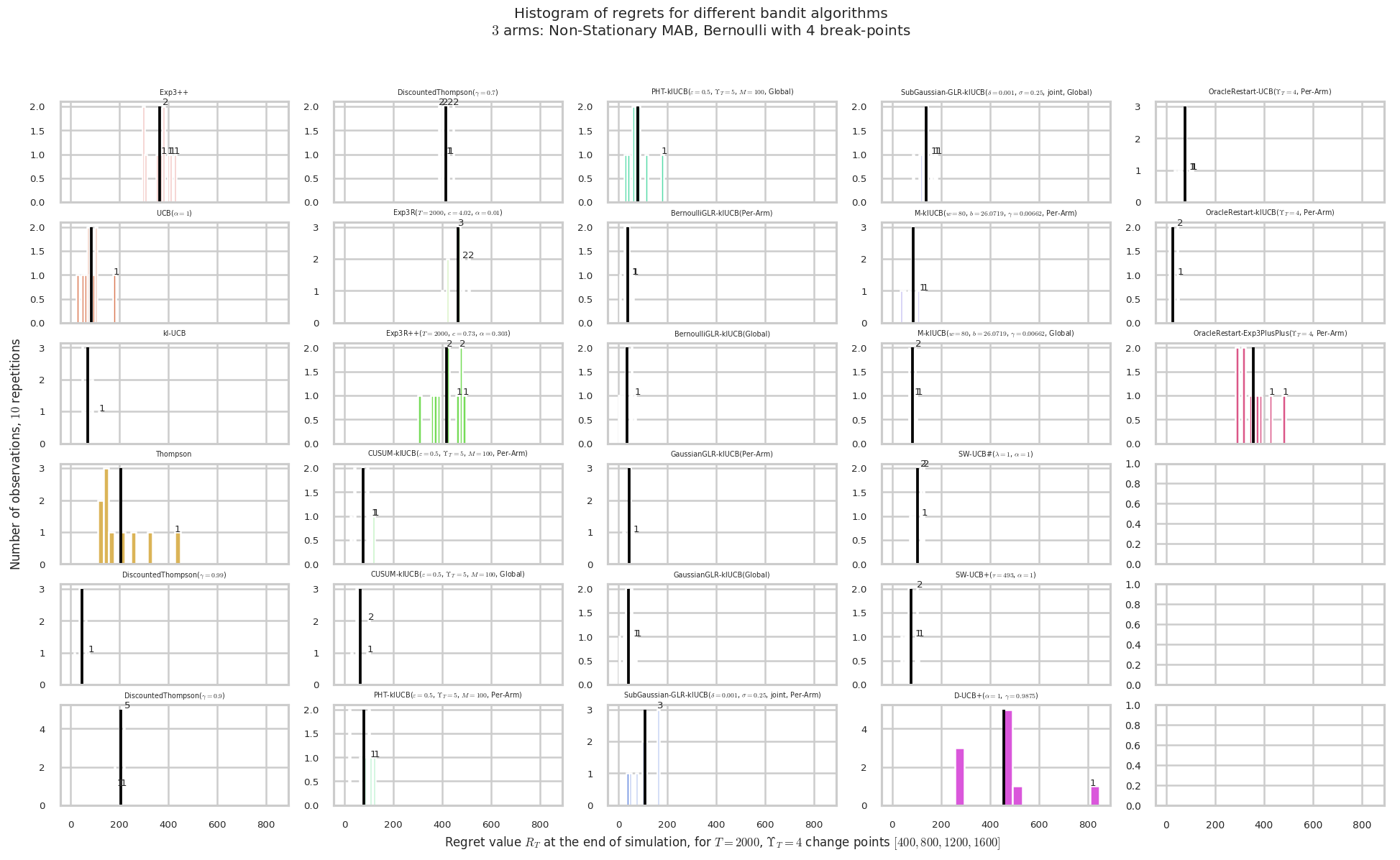

1.5.1 First problem with change on only one arm (Local Restart should be better)

1.5.2 Second problem with changes on all arms (Global restart should be better)

Demonstrations of Single-Player Simulations for Non-Stationary-Bandits¶

This notebook shows how to 1) define, 2) launch, and 3) plot the results of numerical simulations of piecewise stationary (multi-armed) bandits problems using my framework SMPyBandits. For more details on the maths behind this problem, see this page in the documentation: SMPyBandits.GitHub.io/NonStationaryBandits.html.

First, be sure to be in the main folder, or to have SMPyBandits installed, and import Evaluator from Environment package.

WARNING If you are running this notebook locally, in the `notebooks <https://github.com/SMPyBandits/SMPyBandits/tree/master/notebooks>`__ folder in the `SMPyBandits <https://github.com/SMPyBandits/SMPyBandits/>`__ source, you need to do:

[1]:

import sys

sys.path.insert(0, '..')

If you are running this notebook elsewhere, SMPyBandits can be pip installed easily: (this is especially true if you run this notebook from Google Colab or MyBinder).

[2]:

try:

import SMPyBandits

except ImportError:

!pip3 install SMPyBandits

Info: Using the Jupyter notebook version of the tqdm() decorator, tqdm_notebook() ...

Let’s just check the versions of the installed modules:

[3]:

!pip3 install watermark > /dev/null

[4]:

%load_ext watermark

%watermark -v -m -p SMPyBandits,numpy,matplotlib -a "Lilian Besson"

Lilian Besson

CPython 3.6.7

IPython 7.2.0

SMPyBandits 0.9.4

numpy 1.15.4

matplotlib 3.0.2

compiler : GCC 8.2.0

system : Linux

release : 4.15.0-42-generic

machine : x86_64

processor : x86_64

CPU cores : 4

interpreter: 64bit

We can now import all the modules we need for this demonstration.

[5]:

import numpy as np

[44]:

FIGSIZE = (19.80, 10.80)

DPI = 160

[45]:

# Large figures for pretty notebooks

import matplotlib as mpl

mpl.rcParams['figure.figsize'] = FIGSIZE

mpl.rcParams['figure.dpi'] = DPI

[46]:

# Local imports

from SMPyBandits.Environment import Evaluator, tqdm

[47]:

# Large figures for pretty notebooks

import matplotlib as mpl

mpl.rcParams['figure.figsize'] = FIGSIZE

mpl.rcParams['figure.dpi'] = DPI

[48]:

# Large figures for pretty notebooks

import matplotlib as mpl

mpl.rcParams['figure.figsize'] = FIGSIZE

mpl.rcParams['figure.dpi'] = DPI

We also need arms, for instance Bernoulli-distributed arm:

[10]:

# Import arms

from SMPyBandits.Arms import Bernoulli

And finally we need some single-player Reinforcement Learning algorithms:

[11]:

# Import algorithms

from SMPyBandits.Policies import *

Creating the problem¶

Parameters for the simulation¶

\(T = 2000\) is the time horizon,

\(N = 100\) is the number of repetitions, or 1 to debug the simulations,

N_JOBS = 4is the number of cores used to parallelize the code,\(5\) piecewise stationary sequences will have length 400

[49]:

from multiprocessing import cpu_count

CPU_COUNT = cpu_count()

N_JOBS = CPU_COUNT if CPU_COUNT <= 4 else CPU_COUNT - 4

print("Using {} jobs in parallel...".format(N_JOBS))

Using 4 jobs in parallel...

[51]:

HORIZON = 2000

REPETITIONS = 50

print("Using T = {}, and N = {} repetitions".format(HORIZON, REPETITIONS))

Using T = 2000, and N = 50 repetitions

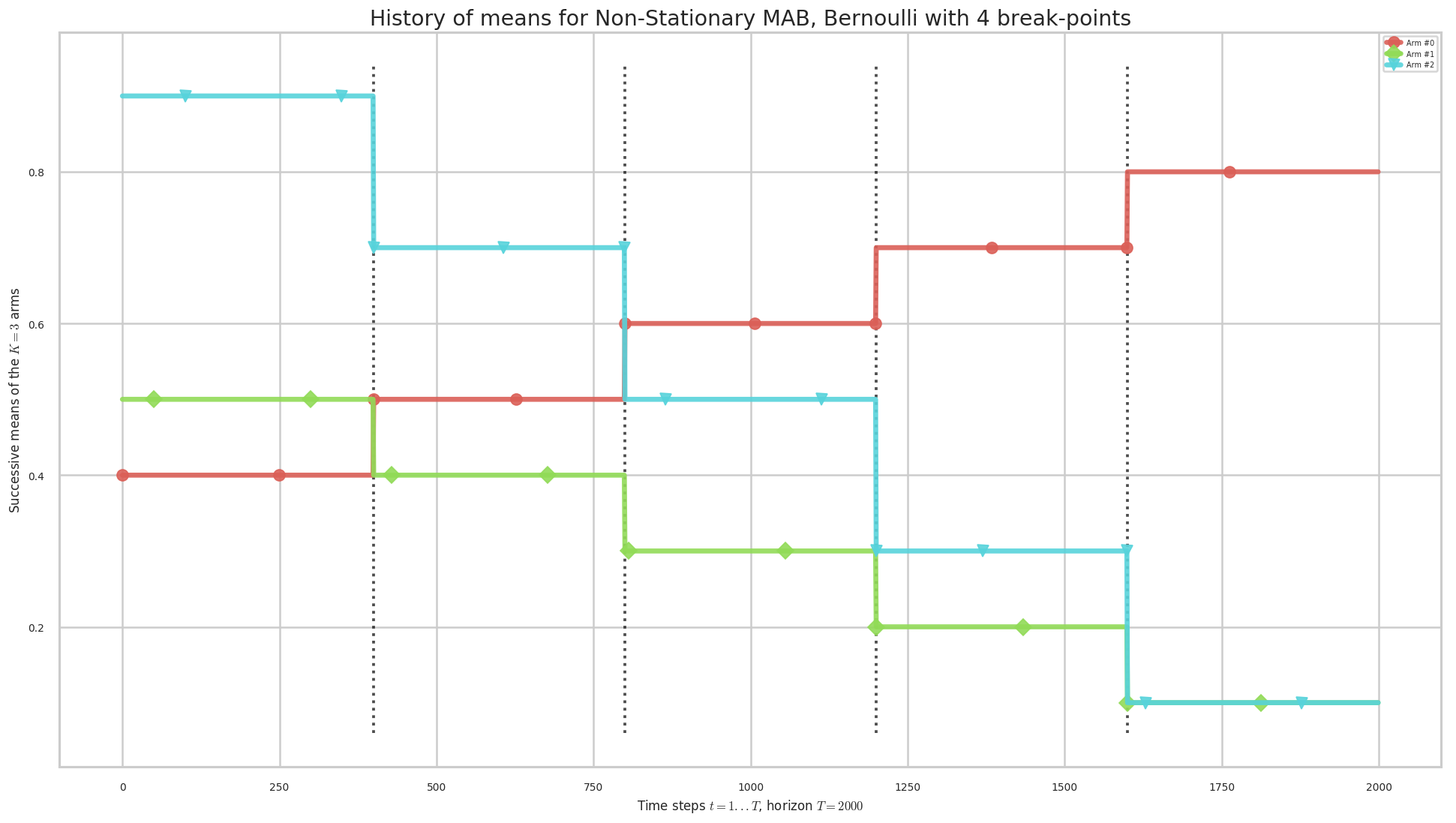

Two MAB problems with Bernoulli arms and piecewise stationary means¶

We consider in this example \(2\) problems, with Bernoulli arms, of different piecewise stationary means.

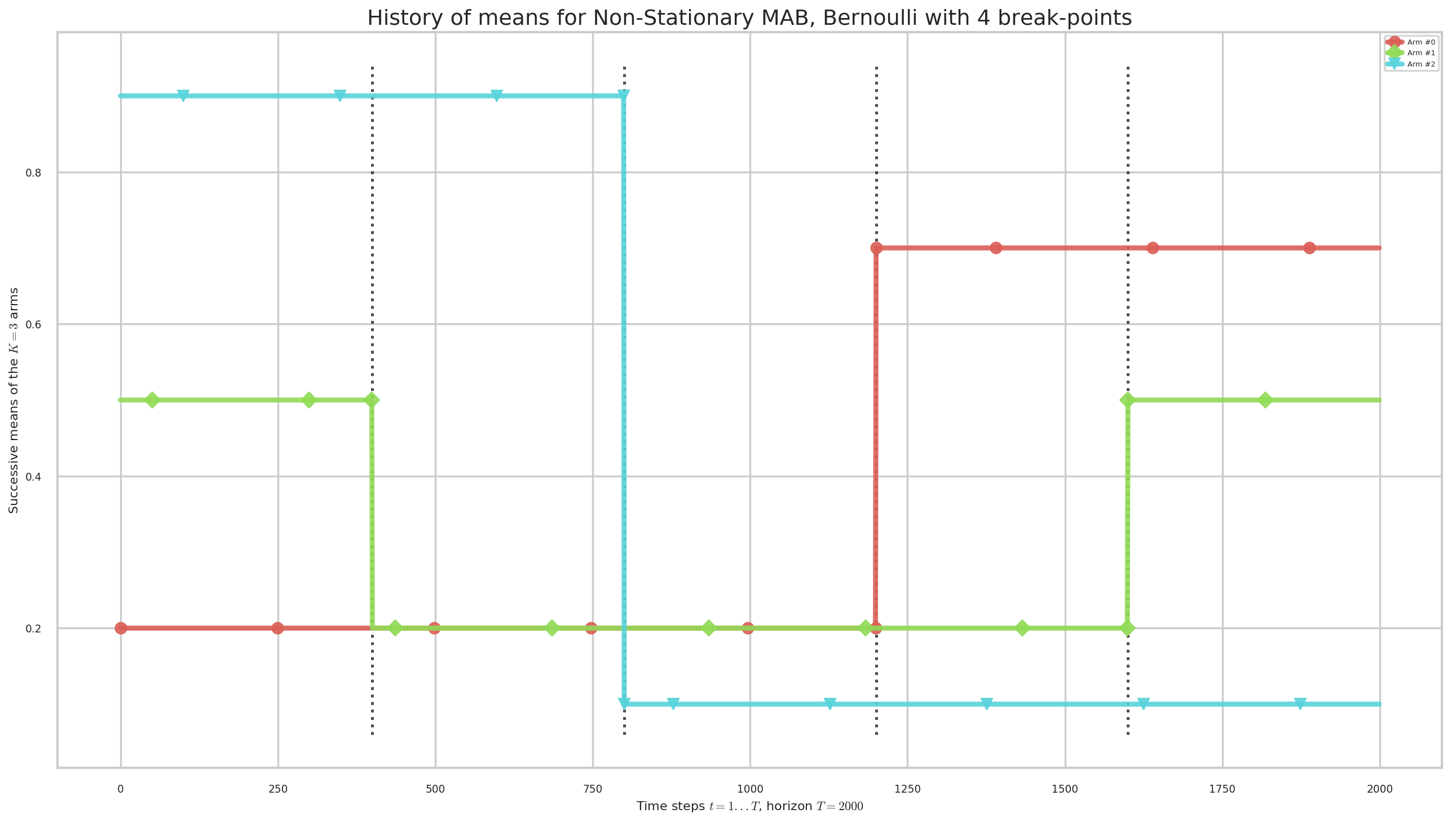

The first problem has changes on only one arm at every breakpoint times,

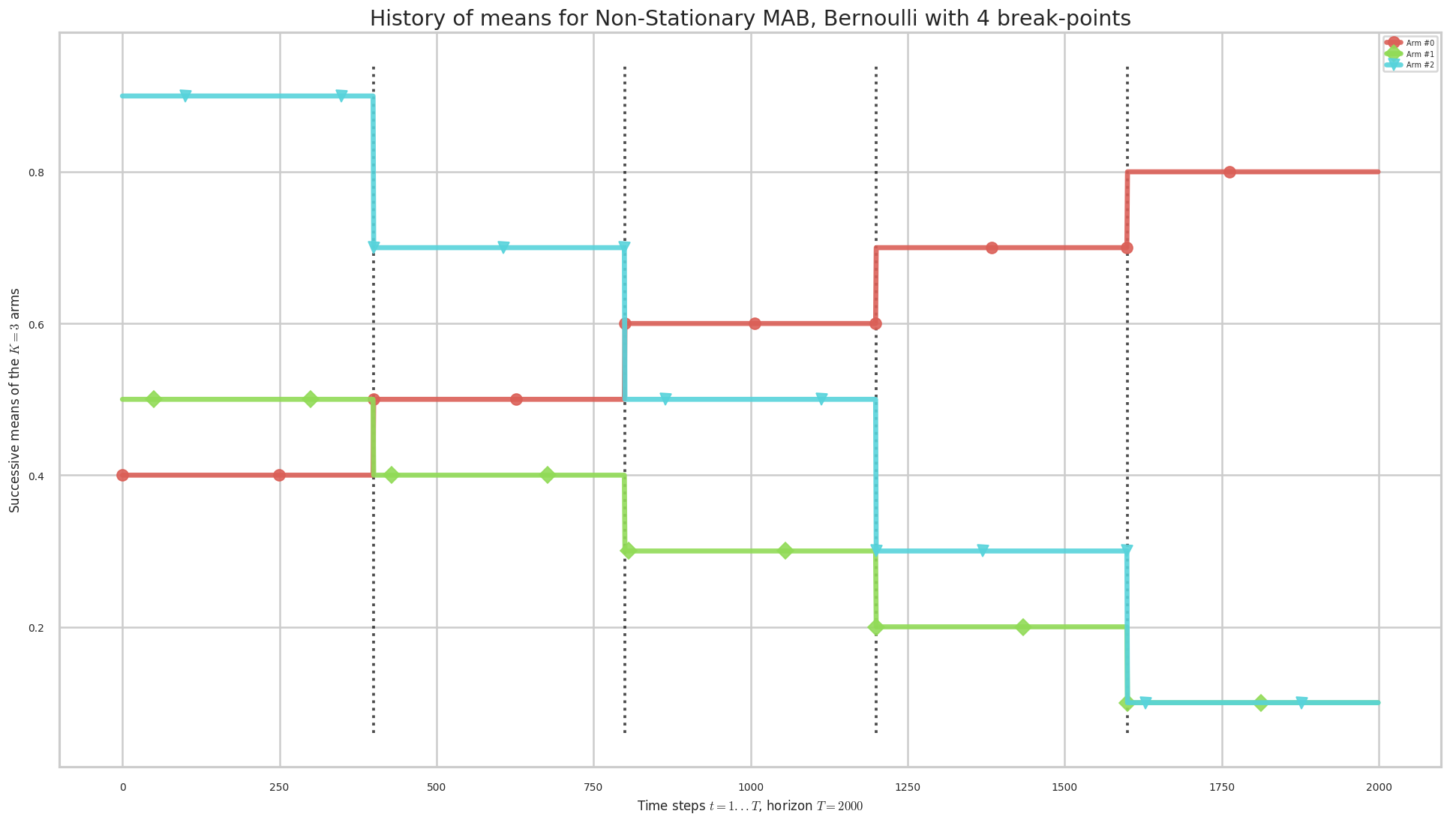

The second problem has changes on all arms at every breakpoint times.

[53]:

ENVIRONMENTS = []

[54]:

ENVIRONMENT_0 = { # A simple piece-wise stationary problem

"arm_type": Bernoulli,

"params": {

"listOfMeans": [

[0.2, 0.5, 0.9], # 0 to 399

[0.2, 0.2, 0.9], # 400 to 799

[0.2, 0.2, 0.1], # 800 to 1199

[0.7, 0.2, 0.1], # 1200 to 1599

[0.7, 0.5, 0.1], # 1600 to end

],

"changePoints": [

int(0 * HORIZON / 2000.0),

int(400 * HORIZON / 2000.0),

int(800 * HORIZON / 2000.0),

int(1200 * HORIZON / 2000.0),

int(1600 * HORIZON / 2000.0),

],

}

}

[55]:

# Pb 2 changes are on all or almost arms at a time

ENVIRONMENT_1 = { # A simple piece-wise stationary problem

"arm_type": Bernoulli,

"params": {

"listOfMeans": [

[0.4, 0.5, 0.9], # 0 to 399

[0.5, 0.4, 0.7], # 400 to 799

[0.6, 0.3, 0.5], # 800 to 1199

[0.7, 0.2, 0.3], # 1200 to 1599

[0.8, 0.1, 0.1], # 1600 to end

],

"changePoints": [

int(0 * HORIZON / 2000.0),

int(400 * HORIZON / 2000.0),

int(800 * HORIZON / 2000.0),

int(1200 * HORIZON / 2000.0),

int(1600 * HORIZON / 2000.0),

],

}

}

[56]:

ENVIRONMENTS = [

ENVIRONMENT_0,

ENVIRONMENT_1,

]

list_nb_arms = [len(env["params"]["listOfMeans"][0]) for env in ENVIRONMENTS]

NB_ARMS = max(list_nb_arms)

assert all(n == NB_ARMS for n in list_nb_arms), "Error: it is NOT supported to have successive problems with a different number of arms!"

print("==> Using K = {} arms".format(NB_ARMS))

NB_BREAK_POINTS = max(len(env["params"]["changePoints"]) for env in ENVIRONMENTS)

print("==> Using Upsilon_T = {} change points".format(NB_BREAK_POINTS))

CHANGE_POINTS = np.unique(np.array(list(set.union(*(set(env["params"]["changePoints"]) for env in ENVIRONMENTS)))))

print("==> Using the following {} change points".format(list(CHANGE_POINTS)))

==> Using K = 3 arms

==> Using Upsilon_T = 5 change points

==> Using the following [0, 400, 800, 1200, 1600] change points

Some MAB algorithms¶

We want compare some classical MAB algorithms (\(\mathrm{UCB}_1\), Thompson Sampling and \(\mathrm{kl}\)-\(\mathrm{UCB}\)) that are designed to solve stationary problems against other algorithms designed to solve piecewise-stationary problems.

Parameters of the algorithms¶

[15]:

klucb = klucb_mapping.get(str(ENVIRONMENTS[0]['arm_type']), klucbBern)

klucb

[15]:

<function SMPyBandits.Policies.kullback.klucbBern>

[61]:

WINDOW_SIZE = int(80 * np.ceil(HORIZON / 10000))

print("M-UCB will use a window of size {}".format(WINDOW_SIZE))

M-UCB will use a window of size 80

Algorithms¶

[62]:

POLICIES = [ # XXX Regular adversarial bandits algorithms!

{ "archtype": Exp3PlusPlus, "params": {} },

] + [ # XXX Regular stochastic bandits algorithms!

{ "archtype": UCBalpha, "params": { "alpha": 1, } },

{ "archtype": klUCB, "params": { "klucb": klucb, } },

{ "archtype": Thompson, "params": { "posterior": Beta, } },

] + [ # XXX This is still highly experimental!

{ "archtype": DiscountedThompson, "params": {

"posterior": DiscountedBeta, "gamma": gamma

} }

for gamma in [0.99, 0.9, 0.7]

] + [ # --- The Exp3R algorithm works reasonably well

{ "archtype": Exp3R, "params": { "horizon": HORIZON, } }

] + [ # --- XXX The Exp3RPlusPlus variant of Exp3R algorithm works also reasonably well

{ "archtype": Exp3RPlusPlus, "params": { "horizon": HORIZON, } }

] + [ # --- XXX Test a few CD-MAB algorithms that need to know NB_BREAK_POINTS

{ "archtype": archtype, "params": {

"horizon": HORIZON,

"max_nb_random_events": NB_BREAK_POINTS,

"policy": policy,

"per_arm_restart": per_arm_restart,

} }

for archtype in [

CUSUM_IndexPolicy,

PHT_IndexPolicy, # OK PHT_IndexPolicy is very much like CUSUM

]

for policy in [

# UCB, # XXX comment to only test klUCB

klUCB,

]

for per_arm_restart in [

True, # Per-arm restart XXX comment to only test global arm

False, # Global restart XXX seems more efficient? (at least more memory efficient!)

]

] + [ # --- XXX Test a few CD-MAB algorithms

{ "archtype": archtype, "params": {

"horizon": HORIZON,

"policy": policy,

"per_arm_restart": per_arm_restart,

} }

for archtype in [

BernoulliGLR_IndexPolicy, # OK BernoulliGLR_IndexPolicy is very much like CUSUM

GaussianGLR_IndexPolicy, # OK GaussianGLR_IndexPolicy is very much like Bernoulli GLR

SubGaussianGLR_IndexPolicy, # OK SubGaussianGLR_IndexPolicy is very much like Gaussian GLR

]

for policy in [

# UCB, # XXX comment to only test klUCB

klUCB,

]

for per_arm_restart in [

True, # Per-arm restart XXX comment to only test global arm

False, # Global restart XXX seems more efficient? (at least more memory efficient!)

]

] + [ # --- XXX The Monitored_IndexPolicy with specific tuning of the input parameters

{ "archtype": Monitored_IndexPolicy, "params": {

"horizon": HORIZON,

"w": WINDOW_SIZE,

"b": np.sqrt(WINDOW_SIZE/2 * np.log(2 * NB_ARMS * HORIZON**2)),

"policy": policy,

"per_arm_restart": per_arm_restart,

} }

for policy in [

# UCB,

klUCB, # XXX comment to only test UCB

]

for per_arm_restart in [

True, # Per-arm restart XXX comment to only test global arm

False, # Global restart XXX seems more efficient? (at least more memory efficient!)

]

] + [ # --- DONE The SW_UCB_Hash algorithm works fine!

{ "archtype": SWHash_IndexPolicy, "params": {

"alpha": alpha, "lmbda": lmbda, "policy": UCB,

} }

for alpha in [1.0]

for lmbda in [1]

] + [ # --- # XXX experimental other version of the sliding window algorithm, knowing the horizon

{ "archtype": SWUCBPlus, "params": {

"horizon": HORIZON, "alpha": alpha,

} }

for alpha in [1.0]

] + [ # --- # XXX experimental discounted UCB algorithm, knowing the horizon

{ "archtype": DiscountedUCBPlus, "params": {

"max_nb_random_events": max_nb_random_events, "alpha": alpha, "horizon": HORIZON,

} }

for alpha in [1.0]

for max_nb_random_events in [NB_BREAK_POINTS]

] + [ # --- DONE the OracleSequentiallyRestartPolicy with klUCB/UCB policy works quite well, but NOT optimally!

{ "archtype": OracleSequentiallyRestartPolicy, "params": {

"changePoints": CHANGE_POINTS, "policy": policy,

"per_arm_restart": per_arm_restart,

# "full_restart_when_refresh": full_restart_when_refresh,

} }

for policy in [

UCB,

klUCB, # XXX comment to only test UCB

Exp3PlusPlus, # XXX comment to only test UCB

]

for per_arm_restart in [True] #, False]

# for full_restart_when_refresh in [True, False]

]

The complete configuration for the problems and these algorithms is then a simple dictionary:

[64]:

configuration = {

# --- Duration of the experiment

"horizon": HORIZON,

# --- Number of repetition of the experiment (to have an average)

"repetitions": REPETITIONS,

# --- Parameters for the use of joblib.Parallel

"n_jobs": N_JOBS, # = nb of CPU cores

"verbosity": 0, # Max joblib verbosity

# --- Arms

"environment": ENVIRONMENTS,

# --- Algorithms

"policies": POLICIES,

# --- Random events

"nb_break_points": NB_BREAK_POINTS,

# --- Should we plot the lower-bounds or not?

"plot_lowerbound": False, # XXX Default

}

configuration

[64]:

{'horizon': 2000,

'repetitions': 50,

'n_jobs': 4,

'verbosity': 0,

'environment': [{'arm_type': SMPyBandits.Arms.Bernoulli.Bernoulli,

'params': {'listOfMeans': [[0.2, 0.5, 0.9],

[0.2, 0.2, 0.9],

[0.2, 0.2, 0.1],

[0.7, 0.2, 0.1],

[0.7, 0.5, 0.1]],

'changePoints': [0, 400, 800, 1200, 1600]}},

{'arm_type': SMPyBandits.Arms.Bernoulli.Bernoulli,

'params': {'listOfMeans': [[0.4, 0.5, 0.9],

[0.5, 0.4, 0.7],

[0.6, 0.3, 0.5],

[0.7, 0.2, 0.3],

[0.8, 0.1, 0.1]],

'changePoints': [0, 400, 800, 1200, 1600]}}],

'policies': [{'archtype': SMPyBandits.Policies.Exp3PlusPlus.Exp3PlusPlus,

'params': {}},

{'archtype': SMPyBandits.Policies.UCBalpha.UCBalpha, 'params': {'alpha': 1}},

{'archtype': SMPyBandits.Policies.klUCB.klUCB,

'params': {'klucb': <function SMPyBandits.Policies.kullback.klucbBern>}},

{'archtype': SMPyBandits.Policies.Thompson.Thompson,

'params': {'posterior': SMPyBandits.Policies.Posterior.Beta.Beta}},

{'archtype': SMPyBandits.Policies.DiscountedThompson.DiscountedThompson,

'params': {'posterior': SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta,

'gamma': 0.99}},

{'archtype': SMPyBandits.Policies.DiscountedThompson.DiscountedThompson,

'params': {'posterior': SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta,

'gamma': 0.9}},

{'archtype': SMPyBandits.Policies.DiscountedThompson.DiscountedThompson,

'params': {'posterior': SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta,

'gamma': 0.7}},

{'archtype': SMPyBandits.Policies.CD_UCB.Exp3R, 'params': {'horizon': 2000}},

{'archtype': SMPyBandits.Policies.CD_UCB.Exp3RPlusPlus,

'params': {'horizon': 2000}},

{'archtype': SMPyBandits.Policies.CD_UCB.CUSUM_IndexPolicy,

'params': {'horizon': 2000,

'max_nb_random_events': 5,

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': True}},

{'archtype': SMPyBandits.Policies.CD_UCB.CUSUM_IndexPolicy,

'params': {'horizon': 2000,

'max_nb_random_events': 5,

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': False}},

{'archtype': SMPyBandits.Policies.CD_UCB.PHT_IndexPolicy,

'params': {'horizon': 2000,

'max_nb_random_events': 5,

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': True}},

{'archtype': SMPyBandits.Policies.CD_UCB.PHT_IndexPolicy,

'params': {'horizon': 2000,

'max_nb_random_events': 5,

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': False}},

{'archtype': SMPyBandits.Policies.CD_UCB.BernoulliGLR_IndexPolicy,

'params': {'horizon': 2000,

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': True}},

{'archtype': SMPyBandits.Policies.CD_UCB.BernoulliGLR_IndexPolicy,

'params': {'horizon': 2000,

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': False}},

{'archtype': SMPyBandits.Policies.CD_UCB.GaussianGLR_IndexPolicy,

'params': {'horizon': 2000,

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': True}},

{'archtype': SMPyBandits.Policies.CD_UCB.GaussianGLR_IndexPolicy,

'params': {'horizon': 2000,

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': False}},

{'archtype': SMPyBandits.Policies.CD_UCB.SubGaussianGLR_IndexPolicy,

'params': {'horizon': 2000,

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': True}},

{'archtype': SMPyBandits.Policies.CD_UCB.SubGaussianGLR_IndexPolicy,

'params': {'horizon': 2000,

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': False}},

{'archtype': SMPyBandits.Policies.Monitored_UCB.Monitored_IndexPolicy,

'params': {'horizon': 2000,

'w': 80,

'b': 26.07187326473663,

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': True}},

{'archtype': SMPyBandits.Policies.Monitored_UCB.Monitored_IndexPolicy,

'params': {'horizon': 2000,

'w': 80,

'b': 26.07187326473663,

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': False}},

{'archtype': SMPyBandits.Policies.SWHash_UCB.SWHash_IndexPolicy,

'params': {'alpha': 1.0,

'lmbda': 1,

'policy': SMPyBandits.Policies.UCB.UCB}},

{'archtype': SMPyBandits.Policies.SlidingWindowUCB.SWUCBPlus,

'params': {'horizon': 2000, 'alpha': 1.0}},

{'archtype': SMPyBandits.Policies.DiscountedUCB.DiscountedUCBPlus,

'params': {'max_nb_random_events': 5, 'alpha': 1.0, 'horizon': 2000}},

{'archtype': SMPyBandits.Policies.OracleSequentiallyRestartPolicy.OracleSequentiallyRestartPolicy,

'params': {'changePoints': array([ 0, 400, 800, 1200, 1600]),

'policy': SMPyBandits.Policies.UCB.UCB,

'per_arm_restart': True}},

{'archtype': SMPyBandits.Policies.OracleSequentiallyRestartPolicy.OracleSequentiallyRestartPolicy,

'params': {'changePoints': array([ 0, 400, 800, 1200, 1600]),

'policy': SMPyBandits.Policies.klUCB.klUCB,

'per_arm_restart': True}},

{'archtype': SMPyBandits.Policies.OracleSequentiallyRestartPolicy.OracleSequentiallyRestartPolicy,

'params': {'changePoints': array([ 0, 400, 800, 1200, 1600]),

'policy': SMPyBandits.Policies.Exp3PlusPlus.Exp3PlusPlus,

'per_arm_restart': True}}],

'nb_break_points': 5,

'plot_lowerbound': False}

[65]:

# (almost) unique hash from the configuration

hashvalue = abs(hash((tuple(configuration.keys()), tuple([(len(k) if isinstance(k, (dict, tuple, list)) else k) for k in configuration.values()]))))

print("This configuration has a hash value = {}".format(hashvalue))

This configuration has a hash value = 427944937445357777

[66]:

import os, os.path

[67]:

subfolder = "SP__K{}_T{}_N{}__{}_algos".format(NB_ARMS, HORIZON, REPETITIONS, len(POLICIES))

PLOT_DIR = "plots"

plot_dir = os.path.join(PLOT_DIR, subfolder)

# Create the sub folder

if os.path.isdir(plot_dir):

print("{} is already a directory here...".format(plot_dir))

elif os.path.isfile(plot_dir):

raise ValueError("[ERROR] {} is a file, cannot use it as a directory !".format(plot_dir))

else:

os.mkdir(plot_dir)

print("Using sub folder = '{}' and plotting in '{}'...".format(subfolder, plot_dir))

Using sub folder = 'SP__K3_T2000_N50__27_algos' and plotting in 'plots/SP__K3_T2000_N50__27_algos'...

[68]:

mainfig = os.path.join(plot_dir, "main")

print("Using main figure name as '{}_{}'...".format(mainfig, hashvalue))

Using main figure name as 'plots/SP__K3_T2000_N50__27_algos/main_427944937445357777'...

Checking if the problems are too hard or not¶

If we assume we have a result that bounds the delay of the Change-Detection algorithm by a certain quantity \(D\), we can check that the sequence lengths (ie, \(\tau_{m+1}-\tau_m\)) are large enough for the CD-klUCB algorithm (our proposal) to be efficient.

[69]:

def lowerbound_on_sequence_length(horizon, gap):

r""" A function that computes the lower-bound (we will find) on the sequence length to have a reasonable bound on the delay of our change-detection algorithm.

- It returns the smallest possible sequence length :math:`L = \tau_{m+1} - \tau_m` satisfying:

.. math:: L \geq \frac{8}{\Delta^2} \log(T).

"""

if np.isclose(gap, 0): return 0

condition = lambda length: length >= (8/gap**2) * np.log(horizon)

length = 1

while not condition(length):

length += 1

return length

[70]:

def check_condition_on_piecewise_stationary_problems(horizon, listOfMeans, changePoints):

""" Check some conditions on the piecewise stationary problem."""

M = len(listOfMeans)

print("For a piecewise stationary problem with M = {} sequences...".format(M)) # DEBUG

for m in range(M - 1):

mus_m = listOfMeans[m]

tau_m = changePoints[m]

mus_mp1 = listOfMeans[m+1]

tau_mp1 = changePoints[m+1]

print("\nChecking m-th (m = {}) sequence, µ_m = {}, µ_m+1 = {} and tau_m = {} and tau_m+1 = {}".format(m, mus_m, mus_mp1, tau_m, tau_mp1)) # DEBUG

for i, (mu_i_m, mu_i_mp1) in enumerate(zip(mus_m, mus_mp1)):

gap = abs(mu_i_m - mu_i_mp1)

length = tau_mp1 - tau_m

lowerbound = lowerbound_on_sequence_length(horizon, gap)

print(" - For arm i = {}, gap = {:.3g} and length = {} with lowerbound on length = {}...".format(i, gap, length, lowerbound)) # DEBUG

if length < lowerbound:

print("WARNING For arm i = {}, gap = {:.3g} and length = {} < lowerbound on length = {} !!".format(i, gap, length, lowerbound)) # DEBUG

[71]:

for envId, env in enumerate(configuration["environment"]):

print("\n\n\nChecking environment number {}".format(envId)) # DEBUG

listOfMeans = env["params"]["listOfMeans"]

changePoints = env["params"]["changePoints"]

check_condition_on_piecewise_stationary_problems(HORIZON, listOfMeans, changePoints)

Checking environment number 0

For a piecewise stationary problem with M = 5 sequences...

Checking m-th (m = 0) sequence, µ_m = [0.2, 0.5, 0.9], µ_m+1 = [0.2, 0.2, 0.9] and tau_m = 0 and tau_m+1 = 400

- For arm i = 0, gap = 0 and length = 400 with lowerbound on length = 0...

- For arm i = 1, gap = 0.3 and length = 400 with lowerbound on length = 676...

WARNING For arm i = 1, gap = 0.3 and length = 400 < lowerbound on length = 676 !!

- For arm i = 2, gap = 0 and length = 400 with lowerbound on length = 0...

Checking m-th (m = 1) sequence, µ_m = [0.2, 0.2, 0.9], µ_m+1 = [0.2, 0.2, 0.1] and tau_m = 400 and tau_m+1 = 800

- For arm i = 0, gap = 0 and length = 400 with lowerbound on length = 0...

- For arm i = 1, gap = 0 and length = 400 with lowerbound on length = 0...

- For arm i = 2, gap = 0.8 and length = 400 with lowerbound on length = 96...

Checking m-th (m = 2) sequence, µ_m = [0.2, 0.2, 0.1], µ_m+1 = [0.7, 0.2, 0.1] and tau_m = 800 and tau_m+1 = 1200

- For arm i = 0, gap = 0.5 and length = 400 with lowerbound on length = 244...

- For arm i = 1, gap = 0 and length = 400 with lowerbound on length = 0...

- For arm i = 2, gap = 0 and length = 400 with lowerbound on length = 0...

Checking m-th (m = 3) sequence, µ_m = [0.7, 0.2, 0.1], µ_m+1 = [0.7, 0.5, 0.1] and tau_m = 1200 and tau_m+1 = 1600

- For arm i = 0, gap = 0 and length = 400 with lowerbound on length = 0...

- For arm i = 1, gap = 0.3 and length = 400 with lowerbound on length = 676...

WARNING For arm i = 1, gap = 0.3 and length = 400 < lowerbound on length = 676 !!

- For arm i = 2, gap = 0 and length = 400 with lowerbound on length = 0...

Checking environment number 1

For a piecewise stationary problem with M = 5 sequences...

Checking m-th (m = 0) sequence, µ_m = [0.4, 0.5, 0.9], µ_m+1 = [0.5, 0.4, 0.7] and tau_m = 0 and tau_m+1 = 400

- For arm i = 0, gap = 0.1 and length = 400 with lowerbound on length = 6081...

WARNING For arm i = 0, gap = 0.1 and length = 400 < lowerbound on length = 6081 !!

- For arm i = 1, gap = 0.1 and length = 400 with lowerbound on length = 6081...

WARNING For arm i = 1, gap = 0.1 and length = 400 < lowerbound on length = 6081 !!

- For arm i = 2, gap = 0.2 and length = 400 with lowerbound on length = 1521...

WARNING For arm i = 2, gap = 0.2 and length = 400 < lowerbound on length = 1521 !!

Checking m-th (m = 1) sequence, µ_m = [0.5, 0.4, 0.7], µ_m+1 = [0.6, 0.3, 0.5] and tau_m = 400 and tau_m+1 = 800

- For arm i = 0, gap = 0.1 and length = 400 with lowerbound on length = 6081...

WARNING For arm i = 0, gap = 0.1 and length = 400 < lowerbound on length = 6081 !!

- For arm i = 1, gap = 0.1 and length = 400 with lowerbound on length = 6081...

WARNING For arm i = 1, gap = 0.1 and length = 400 < lowerbound on length = 6081 !!

- For arm i = 2, gap = 0.2 and length = 400 with lowerbound on length = 1521...

WARNING For arm i = 2, gap = 0.2 and length = 400 < lowerbound on length = 1521 !!

Checking m-th (m = 2) sequence, µ_m = [0.6, 0.3, 0.5], µ_m+1 = [0.7, 0.2, 0.3] and tau_m = 800 and tau_m+1 = 1200

- For arm i = 0, gap = 0.1 and length = 400 with lowerbound on length = 6081...

WARNING For arm i = 0, gap = 0.1 and length = 400 < lowerbound on length = 6081 !!

- For arm i = 1, gap = 0.1 and length = 400 with lowerbound on length = 6081...

WARNING For arm i = 1, gap = 0.1 and length = 400 < lowerbound on length = 6081 !!

- For arm i = 2, gap = 0.2 and length = 400 with lowerbound on length = 1521...

WARNING For arm i = 2, gap = 0.2 and length = 400 < lowerbound on length = 1521 !!

Checking m-th (m = 3) sequence, µ_m = [0.7, 0.2, 0.3], µ_m+1 = [0.8, 0.1, 0.1] and tau_m = 1200 and tau_m+1 = 1600

- For arm i = 0, gap = 0.1 and length = 400 with lowerbound on length = 6081...

WARNING For arm i = 0, gap = 0.1 and length = 400 < lowerbound on length = 6081 !!

- For arm i = 1, gap = 0.1 and length = 400 with lowerbound on length = 6081...

WARNING For arm i = 1, gap = 0.1 and length = 400 < lowerbound on length = 6081 !!

- For arm i = 2, gap = 0.2 and length = 400 with lowerbound on length = 1521...

WARNING For arm i = 2, gap = 0.2 and length = 400 < lowerbound on length = 1521 !!

We checked that the two problems are not “easy enough” for our approach to be provably efficient.

Creating the Evaluator object¶

[72]:

evaluation = Evaluator(configuration)

Number of policies in this comparison: 27

Time horizon: 2000

Number of repetitions: 50

Sampling rate for plotting, delta_t_plot: 1

Number of jobs for parallelization: 4

Using this dictionary to create a new environment:

{'arm_type': <class 'SMPyBandits.Arms.Bernoulli.Bernoulli'>, 'params': {'listOfMeans': [[0.2, 0.5, 0.9], [0.2, 0.2, 0.9], [0.2, 0.2, 0.1], [0.7, 0.2, 0.1], [0.7, 0.5, 0.1]], 'changePoints': [0, 400, 800, 1200, 1600]}}

Special MAB problem, with arm (possibly) changing at every time step, read from a dictionnary 'configuration' = {'arm_type': <class 'SMPyBandits.Arms.Bernoulli.Bernoulli'>, 'params': {'listOfMeans': [[0.2, 0.5, 0.9], [0.2, 0.2, 0.9], [0.2, 0.2, 0.1], [0.7, 0.2, 0.1], [0.7, 0.5, 0.1]], 'changePoints': [0, 400, 800, 1200, 1600]}} ...

- with 'arm_type' = <class 'SMPyBandits.Arms.Bernoulli.Bernoulli'>

- with 'params' = {'listOfMeans': [[0.2, 0.5, 0.9], [0.2, 0.2, 0.9], [0.2, 0.2, 0.1], [0.7, 0.2, 0.1], [0.7, 0.5, 0.1]], 'changePoints': [0, 400, 800, 1200, 1600]}

- with 'listOfMeans' = [[0.2 0.5 0.9]

[0.2 0.2 0.9]

[0.2 0.2 0.1]

[0.7 0.2 0.1]

[0.7 0.5 0.1]]

- with 'changePoints' = [0, 400, 800, 1200, 1600]

==> Creating the dynamic arms ...

- with 'nbArms' = 3

- with 'arms' = [B(0.2), B(0.5), B(0.9)]

- Initial draw of 'means' = [0.2 0.5 0.9]

Using this dictionary to create a new environment:

{'arm_type': <class 'SMPyBandits.Arms.Bernoulli.Bernoulli'>, 'params': {'listOfMeans': [[0.4, 0.5, 0.9], [0.5, 0.4, 0.7], [0.6, 0.3, 0.5], [0.7, 0.2, 0.3], [0.8, 0.1, 0.1]], 'changePoints': [0, 400, 800, 1200, 1600]}}

Special MAB problem, with arm (possibly) changing at every time step, read from a dictionnary 'configuration' = {'arm_type': <class 'SMPyBandits.Arms.Bernoulli.Bernoulli'>, 'params': {'listOfMeans': [[0.4, 0.5, 0.9], [0.5, 0.4, 0.7], [0.6, 0.3, 0.5], [0.7, 0.2, 0.3], [0.8, 0.1, 0.1]], 'changePoints': [0, 400, 800, 1200, 1600]}} ...

- with 'arm_type' = <class 'SMPyBandits.Arms.Bernoulli.Bernoulli'>

- with 'params' = {'listOfMeans': [[0.4, 0.5, 0.9], [0.5, 0.4, 0.7], [0.6, 0.3, 0.5], [0.7, 0.2, 0.3], [0.8, 0.1, 0.1]], 'changePoints': [0, 400, 800, 1200, 1600]}

- with 'listOfMeans' = [[0.4 0.5 0.9]

[0.5 0.4 0.7]

[0.6 0.3 0.5]

[0.7 0.2 0.3]

[0.8 0.1 0.1]]

- with 'changePoints' = [0, 400, 800, 1200, 1600]

==> Creating the dynamic arms ...

- with 'nbArms' = 3

- with 'arms' = [B(0.4), B(0.5), B(0.9)]

- Initial draw of 'means' = [0.4 0.5 0.9]

Number of environments to try: 2

[73]:

def printAll(evaluation, envId):

print("\nGiving the vector of final regrets ...")

evaluation.printLastRegrets(envId)

print("\nGiving the final ranks ...")

evaluation.printFinalRanking(envId)

print("\nGiving the mean and std running times ...")

evaluation.printRunningTimes(envId)

print("\nGiving the mean and std memory consumption ...")

evaluation.printMemoryConsumption(envId)

Solving the problem¶

Now we can simulate all the environments.

WARNING That part takes some time, most stationary algorithms run with a time complexity linear in the horizon (ie., time takes \(\mathcal{O}(T)\)) and most piecewise stationary algorithms run with a time complexity square in the horizon (ie., time takes \(\mathcal{O}(T^2)\)).

First problem¶

[74]:

%%time

envId = 0

env = evaluation.envs[envId]

# Show the problem

evaluation.plotHistoryOfMeans(envId)

Warning: forcing to use putatright = False because there is 3 items in the legend.

CPU times: user 1.55 s, sys: 301 ms, total: 1.85 s

Wall time: 1.43 s

[75]:

%%time

# Evaluate just that env

evaluation.startOneEnv(envId, env)

Evaluating environment: PieceWiseStationaryMAB(nbArms: 3, arms: [B(0.2), B(0.5), B(0.9)])

- Adding policy #1 = {'archtype': <class 'SMPyBandits.Policies.Exp3PlusPlus.Exp3PlusPlus'>, 'params': {}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][0]' = {'archtype': <class 'SMPyBandits.Policies.Exp3PlusPlus.Exp3PlusPlus'>, 'params': {}} ...

- Adding policy #2 = {'archtype': <class 'SMPyBandits.Policies.UCBalpha.UCBalpha'>, 'params': {'alpha': 1}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][1]' = {'archtype': <class 'SMPyBandits.Policies.UCBalpha.UCBalpha'>, 'params': {'alpha': 1}} ...

- Adding policy #3 = {'archtype': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'params': {'klucb': <built-in function klucbBern>}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][2]' = {'archtype': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'params': {'klucb': <built-in function klucbBern>}} ...

- Adding policy #4 = {'archtype': <class 'SMPyBandits.Policies.Thompson.Thompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.Beta.Beta'>}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][3]' = {'archtype': <class 'SMPyBandits.Policies.Thompson.Thompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.Beta.Beta'>}} ...

- Adding policy #5 = {'archtype': <class 'SMPyBandits.Policies.DiscountedThompson.DiscountedThompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta'>, 'gamma': 0.99}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][4]' = {'archtype': <class 'SMPyBandits.Policies.DiscountedThompson.DiscountedThompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta'>, 'gamma': 0.99}} ...

- Adding policy #6 = {'archtype': <class 'SMPyBandits.Policies.DiscountedThompson.DiscountedThompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta'>, 'gamma': 0.9}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][5]' = {'archtype': <class 'SMPyBandits.Policies.DiscountedThompson.DiscountedThompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta'>, 'gamma': 0.9}} ...

- Adding policy #7 = {'archtype': <class 'SMPyBandits.Policies.DiscountedThompson.DiscountedThompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta'>, 'gamma': 0.7}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][6]' = {'archtype': <class 'SMPyBandits.Policies.DiscountedThompson.DiscountedThompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta'>, 'gamma': 0.7}} ...

- Adding policy #8 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.Exp3R'>, 'params': {'horizon': 2000}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][7]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.Exp3R'>, 'params': {'horizon': 2000}} ...

Warning: the policy Exp3R($T=2000$, $c=4.02$, $\alpha=0.01$) tried to use default value of gamma = 0.11191812753316992 but could not set attribute self.policy.gamma to gamma (maybe it's using an Exp3 with a non-constant value of gamma).

- Adding policy #9 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.Exp3RPlusPlus'>, 'params': {'horizon': 2000}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][8]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.Exp3RPlusPlus'>, 'params': {'horizon': 2000}} ...

Warning: the policy Exp3R++($T=2000$, $c=0.73$, $\alpha=0.303$) tried to use default value of gamma = 0.11191812753316992 but could not set attribute self.policy.gamma to gamma (maybe it's using an Exp3 with a non-constant value of gamma).

- Adding policy #10 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.CUSUM_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][9]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.CUSUM_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

compute_h_alpha_from_input_parameters__CUSUM() with:

T = 2000, UpsilonT = 5, K = 3, epsilon = 0.5, lmbda = 1, M = 100

Gave C2 = 1.0986122886681098, C1- = 2.1972245773362196 and C1+ = 0.6359887667199967 so C1 = 0.6359887667199967, and h = 9.420708132956397 and alpha = 0.0048256437784349945

- Adding policy #11 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.CUSUM_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][10]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.CUSUM_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

compute_h_alpha_from_input_parameters__CUSUM() with:

T = 2000, UpsilonT = 5, K = 3, epsilon = 0.5, lmbda = 1, M = 100

Gave C2 = 1.0986122886681098, C1- = 2.1972245773362196 and C1+ = 0.6359887667199967 so C1 = 0.6359887667199967, and h = 9.420708132956397 and alpha = 0.0048256437784349945

- Adding policy #12 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.PHT_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][11]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.PHT_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

compute_h_alpha_from_input_parameters__CUSUM() with:

T = 2000, UpsilonT = 5, K = 3, epsilon = 0.5, lmbda = 1, M = 100

Gave C2 = 1.0986122886681098, C1- = 2.1972245773362196 and C1+ = 0.6359887667199967 so C1 = 0.6359887667199967, and h = 9.420708132956397 and alpha = 0.0048256437784349945

- Adding policy #13 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.PHT_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][12]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.PHT_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

compute_h_alpha_from_input_parameters__CUSUM() with:

T = 2000, UpsilonT = 5, K = 3, epsilon = 0.5, lmbda = 1, M = 100

Gave C2 = 1.0986122886681098, C1- = 2.1972245773362196 and C1+ = 0.6359887667199967 so C1 = 0.6359887667199967, and h = 9.420708132956397 and alpha = 0.0048256437784349945

- Adding policy #14 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.BernoulliGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][13]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.BernoulliGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

- Adding policy #15 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.BernoulliGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][14]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.BernoulliGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

- Adding policy #16 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.GaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][15]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.GaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

- Adding policy #17 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.GaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][16]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.GaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

- Adding policy #18 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.SubGaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][17]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.SubGaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

- Adding policy #19 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.SubGaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][18]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.SubGaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

- Adding policy #20 = {'archtype': <class 'SMPyBandits.Policies.Monitored_UCB.Monitored_IndexPolicy'>, 'params': {'horizon': 2000, 'w': 80, 'b': 26.07187326473663, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][19]' = {'archtype': <class 'SMPyBandits.Policies.Monitored_UCB.Monitored_IndexPolicy'>, 'params': {'horizon': 2000, 'w': 80, 'b': 26.07187326473663, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Warning: the formula for gamma in the paper gave gamma = 0.0, that's absurd, we use instead 0.006622516556291391

- Adding policy #21 = {'archtype': <class 'SMPyBandits.Policies.Monitored_UCB.Monitored_IndexPolicy'>, 'params': {'horizon': 2000, 'w': 80, 'b': 26.07187326473663, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][20]' = {'archtype': <class 'SMPyBandits.Policies.Monitored_UCB.Monitored_IndexPolicy'>, 'params': {'horizon': 2000, 'w': 80, 'b': 26.07187326473663, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Warning: the formula for gamma in the paper gave gamma = 0.0, that's absurd, we use instead 0.006622516556291391

- Adding policy #22 = {'archtype': <class 'SMPyBandits.Policies.SWHash_UCB.SWHash_IndexPolicy'>, 'params': {'alpha': 1.0, 'lmbda': 1, 'policy': <class 'SMPyBandits.Policies.UCB.UCB'>}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][21]' = {'archtype': <class 'SMPyBandits.Policies.SWHash_UCB.SWHash_IndexPolicy'>, 'params': {'alpha': 1.0, 'lmbda': 1, 'policy': <class 'SMPyBandits.Policies.UCB.UCB'>}} ...

- Adding policy #23 = {'archtype': <class 'SMPyBandits.Policies.SlidingWindowUCB.SWUCBPlus'>, 'params': {'horizon': 2000, 'alpha': 1.0}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][22]' = {'archtype': <class 'SMPyBandits.Policies.SlidingWindowUCB.SWUCBPlus'>, 'params': {'horizon': 2000, 'alpha': 1.0}} ...

- Adding policy #24 = {'archtype': <class 'SMPyBandits.Policies.DiscountedUCB.DiscountedUCBPlus'>, 'params': {'max_nb_random_events': 5, 'alpha': 1.0, 'horizon': 2000}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][23]' = {'archtype': <class 'SMPyBandits.Policies.DiscountedUCB.DiscountedUCBPlus'>, 'params': {'max_nb_random_events': 5, 'alpha': 1.0, 'horizon': 2000}} ...

- Adding policy #25 = {'archtype': <class 'SMPyBandits.Policies.OracleSequentiallyRestartPolicy.OracleSequentiallyRestartPolicy'>, 'params': {'changePoints': array([ 0, 400, 800, 1200, 1600]), 'policy': <class 'SMPyBandits.Policies.UCB.UCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][24]' = {'archtype': <class 'SMPyBandits.Policies.OracleSequentiallyRestartPolicy.OracleSequentiallyRestartPolicy'>, 'params': {'changePoints': array([ 0, 400, 800, 1200, 1600]), 'policy': <class 'SMPyBandits.Policies.UCB.UCB'>, 'per_arm_restart': True}} ...

Info: creating a new policy OracleRestart-UCB($\Upsilon_T=4$, Per-Arm), with change points = [400, 800, 1200, 1600]...

- Adding policy #26 = {'archtype': <class 'SMPyBandits.Policies.OracleSequentiallyRestartPolicy.OracleSequentiallyRestartPolicy'>, 'params': {'changePoints': array([ 0, 400, 800, 1200, 1600]), 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][25]' = {'archtype': <class 'SMPyBandits.Policies.OracleSequentiallyRestartPolicy.OracleSequentiallyRestartPolicy'>, 'params': {'changePoints': array([ 0, 400, 800, 1200, 1600]), 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Info: creating a new policy OracleRestart-klUCB($\Upsilon_T=4$, Per-Arm), with change points = [400, 800, 1200, 1600]...

- Adding policy #27 = {'archtype': <class 'SMPyBandits.Policies.OracleSequentiallyRestartPolicy.OracleSequentiallyRestartPolicy'>, 'params': {'changePoints': array([ 0, 400, 800, 1200, 1600]), 'policy': <class 'SMPyBandits.Policies.Exp3PlusPlus.Exp3PlusPlus'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][26]' = {'archtype': <class 'SMPyBandits.Policies.OracleSequentiallyRestartPolicy.OracleSequentiallyRestartPolicy'>, 'params': {'changePoints': array([ 0, 400, 800, 1200, 1600]), 'policy': <class 'SMPyBandits.Policies.Exp3PlusPlus.Exp3PlusPlus'>, 'per_arm_restart': True}} ...

Info: creating a new policy OracleRestart-Exp3PlusPlus($\Upsilon_T=4$, Per-Arm), with change points = [400, 800, 1200, 1600]...

- Evaluating policy #1/27: Exp3++ ...

- Evaluating policy #2/27: UCB($\alpha=1$) ...

- Evaluating policy #3/27: kl-UCB ...

- Evaluating policy #4/27: Thompson ...

- Evaluating policy #5/27: DiscountedThompson($\gamma=0.99$) ...

- Evaluating policy #6/27: DiscountedThompson($\gamma=0.9$) ...

- Evaluating policy #7/27: DiscountedThompson($\gamma=0.7$) ...

- Evaluating policy #8/27: Exp3R($T=2000$, $c=4.02$, $\alpha=0.01$) ...

- Evaluating policy #9/27: Exp3R++($T=2000$, $c=0.73$, $\alpha=0.303$) ...

- Evaluating policy #10/27: CUSUM-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Per-Arm) ...

- Evaluating policy #11/27: CUSUM-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Global) ...

- Evaluating policy #12/27: PHT-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Per-Arm) ...

- Evaluating policy #13/27: PHT-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Global) ...

- Evaluating policy #14/27: BernoulliGLR-klUCB(Per-Arm) ...

- Evaluating policy #15/27: BernoulliGLR-klUCB(Global) ...

- Evaluating policy #16/27: GaussianGLR-klUCB(Per-Arm) ...

- Evaluating policy #17/27: GaussianGLR-klUCB(Global) ...

- Evaluating policy #18/27: SubGaussian-GLR-klUCB($\delta=0.001$, $\sigma=0.25$, joint, Per-Arm) ...

- Evaluating policy #19/27: SubGaussian-GLR-klUCB($\delta=0.001$, $\sigma=0.25$, joint, Global) ...

- Evaluating policy #20/27: M-klUCB($w=80$, $b=26.0719$, $\gamma=0.00662$, Per-Arm) ...

- Evaluating policy #21/27: M-klUCB($w=80$, $b=26.0719$, $\gamma=0.00662$, Global) ...

- Evaluating policy #22/27: SW-UCB#($\lambda=1$, $\alpha=1$) ...

- Evaluating policy #23/27: SW-UCB+($\tau=493$, $\alpha=1$) ...

- Evaluating policy #24/27: D-UCB+($\alpha=1$, $\gamma=0.9875$) ...

- Evaluating policy #25/27: OracleRestart-UCB($\Upsilon_T=4$, Per-Arm) ...

- Evaluating policy #26/27: OracleRestart-klUCB($\Upsilon_T=4$, Per-Arm) ...

- Evaluating policy #27/27: OracleRestart-Exp3PlusPlus($\Upsilon_T=4$, Per-Arm) ...

CPU times: user 16.6 s, sys: 403 ms, total: 17 s

Wall time: 22min 52s

[76]:

_ = printAll(evaluation, envId)

Giving the vector of final regrets ...

For policy #0 called 'Exp3++' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 476

Mean of last regrets R_T = 476

Median of last regrets R_T = 476

Max of last regrets R_T = 476

STD of last regrets R_T = 5.68e-14

For policy #1 called 'UCB($\alpha=1$)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 87.5

Mean of last regrets R_T = 87.5

Median of last regrets R_T = 87.5

Max of last regrets R_T = 87.5

STD of last regrets R_T = 1.42e-14

For policy #2 called 'kl-UCB' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 92.6

Mean of last regrets R_T = 92.6

Median of last regrets R_T = 92.6

Max of last regrets R_T = 92.6

STD of last regrets R_T = 1.42e-14

For policy #3 called 'Thompson' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 215

Mean of last regrets R_T = 215

Median of last regrets R_T = 215

Max of last regrets R_T = 215

STD of last regrets R_T = 0

For policy #4 called 'DiscountedThompson($\gamma=0.99$)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 27.4

Mean of last regrets R_T = 27.4

Median of last regrets R_T = 27.4

Max of last regrets R_T = 27.4

STD of last regrets R_T = 0

For policy #5 called 'DiscountedThompson($\gamma=0.9$)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 171

Mean of last regrets R_T = 171

Median of last regrets R_T = 171

Max of last regrets R_T = 171

STD of last regrets R_T = 0

For policy #6 called 'DiscountedThompson($\gamma=0.7$)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 426

Mean of last regrets R_T = 426

Median of last regrets R_T = 426

Max of last regrets R_T = 426

STD of last regrets R_T = 5.68e-14

For policy #7 called 'Exp3R($T=2000$, $c=4.02$, $\alpha=0.01$)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 587

Mean of last regrets R_T = 587

Median of last regrets R_T = 587

Max of last regrets R_T = 587

STD of last regrets R_T = 0

For policy #8 called 'Exp3R++($T=2000$, $c=0.73$, $\alpha=0.303$)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 550

Mean of last regrets R_T = 550

Median of last regrets R_T = 550

Max of last regrets R_T = 550

STD of last regrets R_T = 1.14e-13

For policy #9 called 'CUSUM-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Per-Arm)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 25.5

Mean of last regrets R_T = 25.5

Median of last regrets R_T = 25.5

Max of last regrets R_T = 25.5

STD of last regrets R_T = 0

For policy #10 called 'CUSUM-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Global)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 31.6

Mean of last regrets R_T = 31.6

Median of last regrets R_T = 31.6

Max of last regrets R_T = 31.6

STD of last regrets R_T = 0

For policy #11 called 'PHT-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Per-Arm)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 28.8

Mean of last regrets R_T = 28.8

Median of last regrets R_T = 28.8

Max of last regrets R_T = 28.8

STD of last regrets R_T = 0

For policy #12 called 'PHT-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Global)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 24.4

Mean of last regrets R_T = 24.4

Median of last regrets R_T = 24.4

Max of last regrets R_T = 24.4

STD of last regrets R_T = 0

For policy #13 called 'BernoulliGLR-klUCB(Per-Arm)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 21.1

Mean of last regrets R_T = 21.1

Median of last regrets R_T = 21.1

Max of last regrets R_T = 21.1

STD of last regrets R_T = 0

For policy #14 called 'BernoulliGLR-klUCB(Global)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 37.6

Mean of last regrets R_T = 37.6

Median of last regrets R_T = 37.6

Max of last regrets R_T = 37.6

STD of last regrets R_T = 0

For policy #15 called 'GaussianGLR-klUCB(Per-Arm)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 27.8

Mean of last regrets R_T = 27.8

Median of last regrets R_T = 27.8

Max of last regrets R_T = 27.8

STD of last regrets R_T = 0

For policy #16 called 'GaussianGLR-klUCB(Global)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 36.9

Mean of last regrets R_T = 36.9

Median of last regrets R_T = 36.9

Max of last regrets R_T = 36.9

STD of last regrets R_T = 0

For policy #17 called 'SubGaussian-GLR-klUCB($\delta=0.001$, $\sigma=0.25$, joint, Per-Arm)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 89.2

Mean of last regrets R_T = 89.2

Median of last regrets R_T = 89.2

Max of last regrets R_T = 89.2

STD of last regrets R_T = 1.42e-14

For policy #18 called 'SubGaussian-GLR-klUCB($\delta=0.001$, $\sigma=0.25$, joint, Global)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 127

Mean of last regrets R_T = 127

Median of last regrets R_T = 127

Max of last regrets R_T = 127

STD of last regrets R_T = 1.42e-14

For policy #19 called 'M-klUCB($w=80$, $b=26.0719$, $\gamma=0.00662$, Per-Arm)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 28.4

Mean of last regrets R_T = 28.4

Median of last regrets R_T = 28.4

Max of last regrets R_T = 28.4

STD of last regrets R_T = 0

For policy #20 called 'M-klUCB($w=80$, $b=26.0719$, $\gamma=0.00662$, Global)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 31.5

Mean of last regrets R_T = 31.5

Median of last regrets R_T = 31.5

Max of last regrets R_T = 31.5

STD of last regrets R_T = 0

For policy #21 called 'SW-UCB#($\lambda=1$, $\alpha=1$)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 79.5

Mean of last regrets R_T = 79.5

Median of last regrets R_T = 79.5

Max of last regrets R_T = 79.5

STD of last regrets R_T = 1.42e-14

For policy #22 called 'SW-UCB+($\tau=493$, $\alpha=1$)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 77.5

Mean of last regrets R_T = 77.5

Median of last regrets R_T = 77.5

Max of last regrets R_T = 77.5

STD of last regrets R_T = 0

For policy #23 called 'D-UCB+($\alpha=1$, $\gamma=0.9875$)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 578

Mean of last regrets R_T = 578

Median of last regrets R_T = 578

Max of last regrets R_T = 578

STD of last regrets R_T = 0

For policy #24 called 'OracleRestart-UCB($\Upsilon_T=4$, Per-Arm)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 85.9

Mean of last regrets R_T = 85.9

Median of last regrets R_T = 85.9

Max of last regrets R_T = 85.9

STD of last regrets R_T = 1.42e-14

For policy #25 called 'OracleRestart-klUCB($\Upsilon_T=4$, Per-Arm)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 56.8

Mean of last regrets R_T = 56.8

Median of last regrets R_T = 56.8

Max of last regrets R_T = 56.8

STD of last regrets R_T = 0

For policy #26 called 'OracleRestart-Exp3PlusPlus($\Upsilon_T=4$, Per-Arm)' ...

Last regrets (for all repetitions) have:

Min of last regrets R_T = 483

Mean of last regrets R_T = 483

Median of last regrets R_T = 483

Max of last regrets R_T = 483

STD of last regrets R_T = 1.14e-13

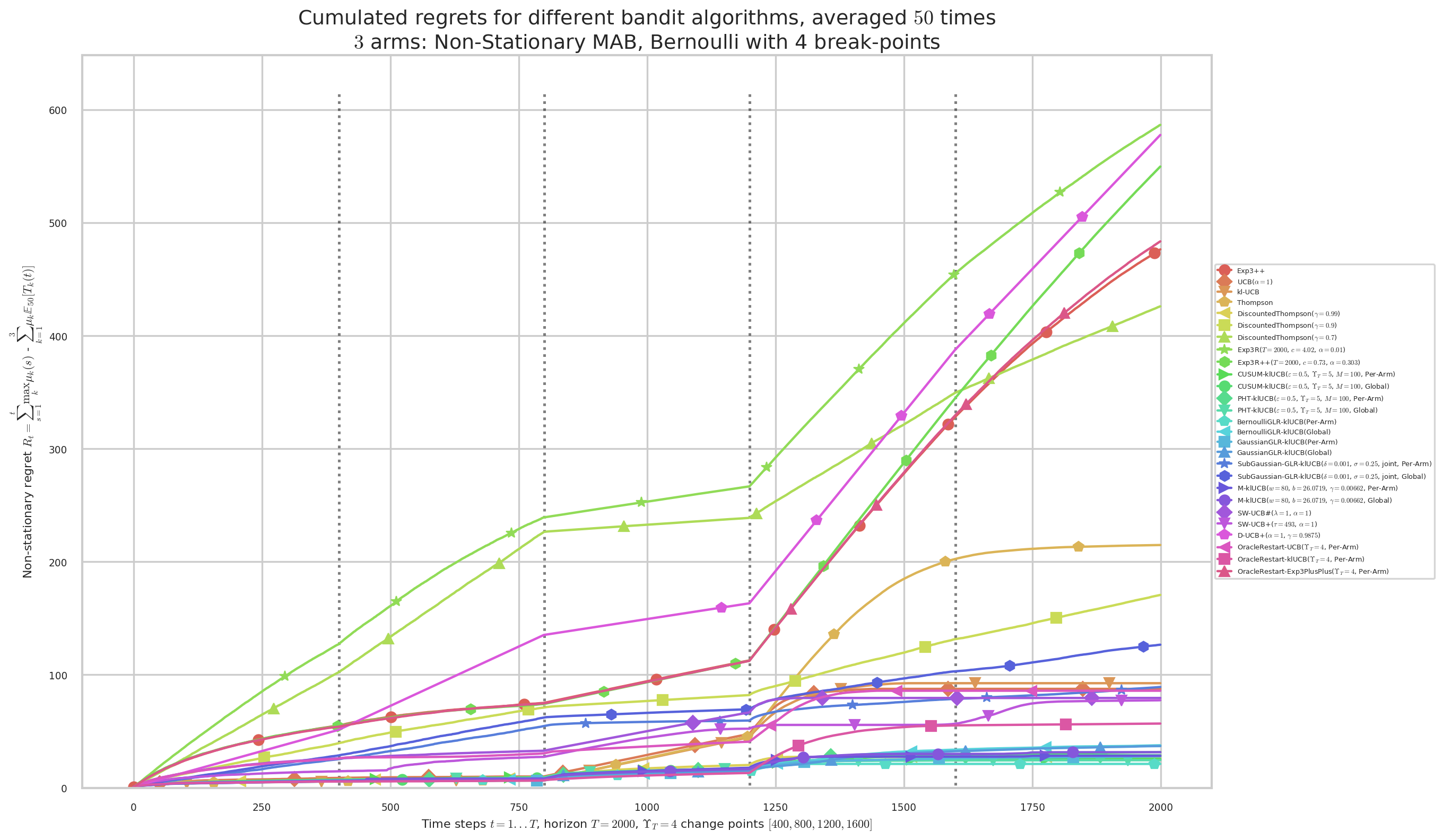

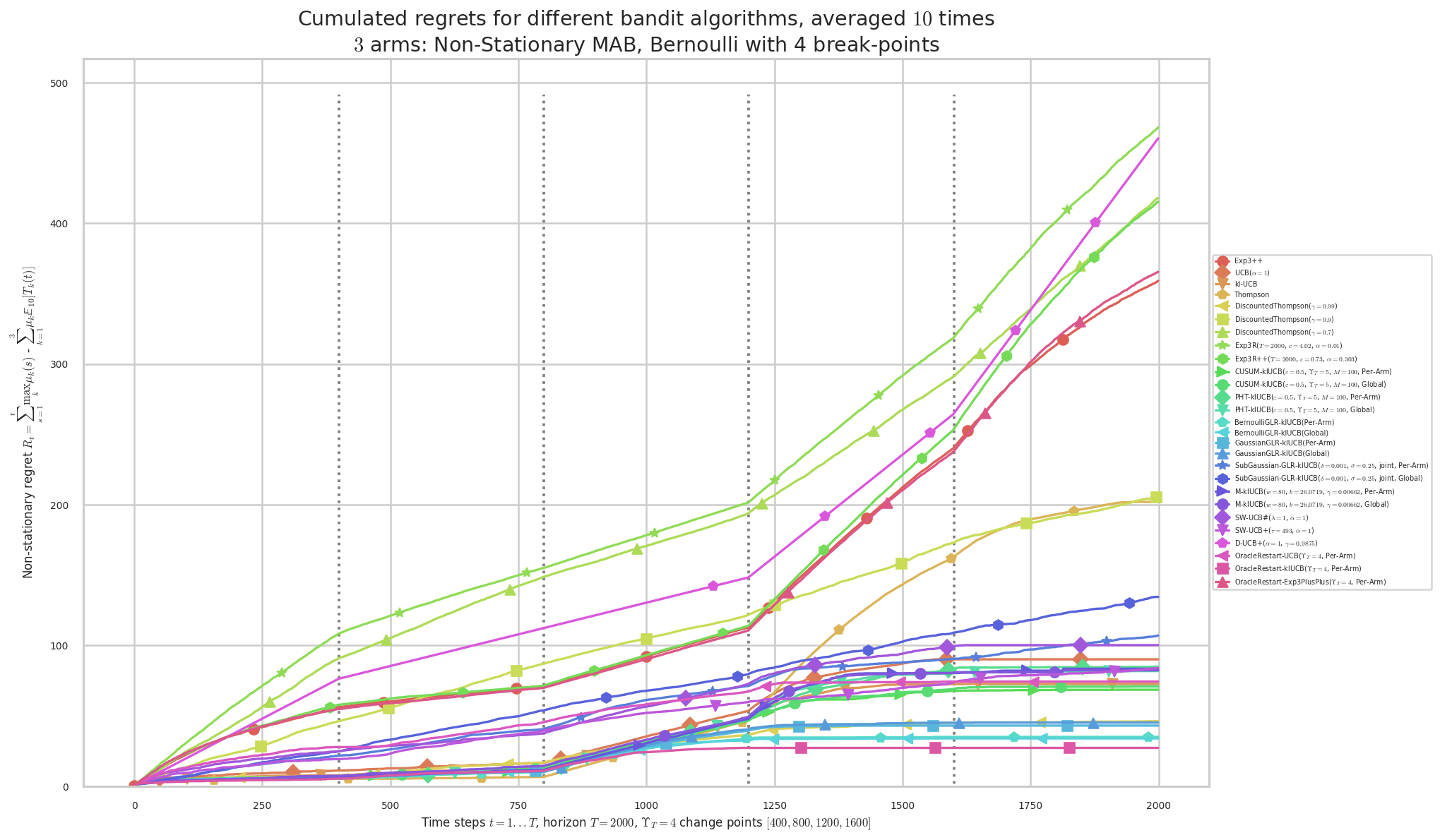

Giving the final ranks ...

Final ranking for this environment #0 :

- Policy 'BernoulliGLR-klUCB(Per-Arm)' was ranked 1 / 27 for this simulation (last regret = 21.146).

- Policy 'PHT-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Global)' was ranked 2 / 27 for this simulation (last regret = 24.43).

- Policy 'CUSUM-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Per-Arm)' was ranked 3 / 27 for this simulation (last regret = 25.476).

- Policy 'DiscountedThompson($\gamma=0.99$)' was ranked 4 / 27 for this simulation (last regret = 27.378).

- Policy 'GaussianGLR-klUCB(Per-Arm)' was ranked 5 / 27 for this simulation (last regret = 27.776).

- Policy 'M-klUCB($w=80$, $b=26.0719$, $\gamma=0.00662$, Per-Arm)' was ranked 6 / 27 for this simulation (last regret = 28.386).

- Policy 'PHT-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Per-Arm)' was ranked 7 / 27 for this simulation (last regret = 28.796).

- Policy 'M-klUCB($w=80$, $b=26.0719$, $\gamma=0.00662$, Global)' was ranked 8 / 27 for this simulation (last regret = 31.53).

- Policy 'CUSUM-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Global)' was ranked 9 / 27 for this simulation (last regret = 31.612).

- Policy 'GaussianGLR-klUCB(Global)' was ranked 10 / 27 for this simulation (last regret = 36.912).

- Policy 'BernoulliGLR-klUCB(Global)' was ranked 11 / 27 for this simulation (last regret = 37.576).

- Policy 'OracleRestart-klUCB($\Upsilon_T=4$, Per-Arm)' was ranked 12 / 27 for this simulation (last regret = 56.806).

- Policy 'SW-UCB+($\tau=493$, $\alpha=1$)' was ranked 13 / 27 for this simulation (last regret = 77.386).

- Policy 'SW-UCB#($\lambda=1$, $\alpha=1$)' was ranked 14 / 27 for this simulation (last regret = 79.482).

- Policy 'OracleRestart-UCB($\Upsilon_T=4$, Per-Arm)' was ranked 15 / 27 for this simulation (last regret = 85.9).

- Policy 'UCB($\alpha=1$)' was ranked 16 / 27 for this simulation (last regret = 87.53).

- Policy 'SubGaussian-GLR-klUCB($\delta=0.001$, $\sigma=0.25$, joint, Per-Arm)' was ranked 17 / 27 for this simulation (last regret = 88.882).

- Policy 'kl-UCB' was ranked 18 / 27 for this simulation (last regret = 92.558).

- Policy 'SubGaussian-GLR-klUCB($\delta=0.001$, $\sigma=0.25$, joint, Global)' was ranked 19 / 27 for this simulation (last regret = 126.09).

- Policy 'DiscountedThompson($\gamma=0.9$)' was ranked 20 / 27 for this simulation (last regret = 169.78).

- Policy 'Thompson' was ranked 21 / 27 for this simulation (last regret = 214.75).

- Policy 'DiscountedThompson($\gamma=0.7$)' was ranked 22 / 27 for this simulation (last regret = 424.28).

- Policy 'Exp3++' was ranked 23 / 27 for this simulation (last regret = 473.69).

- Policy 'OracleRestart-Exp3PlusPlus($\Upsilon_T=4$, Per-Arm)' was ranked 24 / 27 for this simulation (last regret = 480.71).

- Policy 'Exp3R++($T=2000$, $c=0.73$, $\alpha=0.303$)' was ranked 25 / 27 for this simulation (last regret = 545.47).

- Policy 'D-UCB+($\alpha=1$, $\gamma=0.9875$)' was ranked 26 / 27 for this simulation (last regret = 573.37).

- Policy 'Exp3R($T=2000$, $c=4.02$, $\alpha=0.01$)' was ranked 27 / 27 for this simulation (last regret = 583.93).

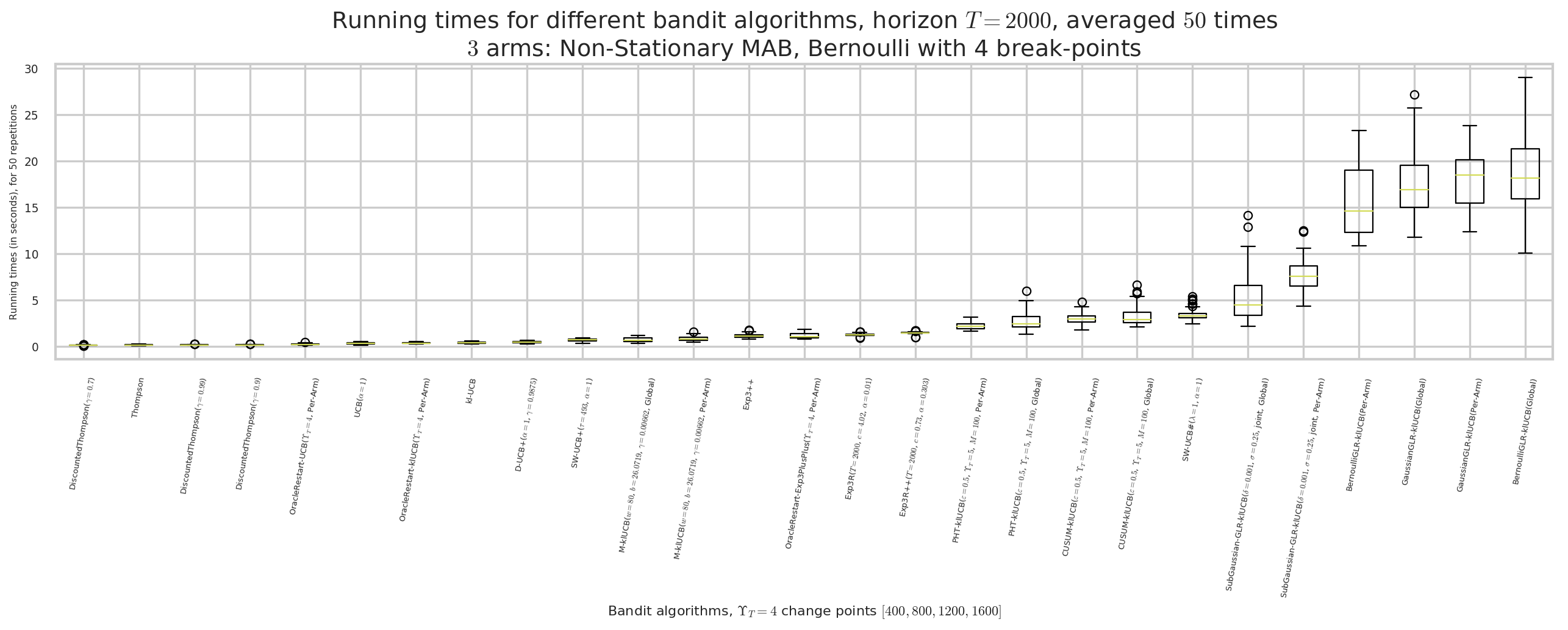

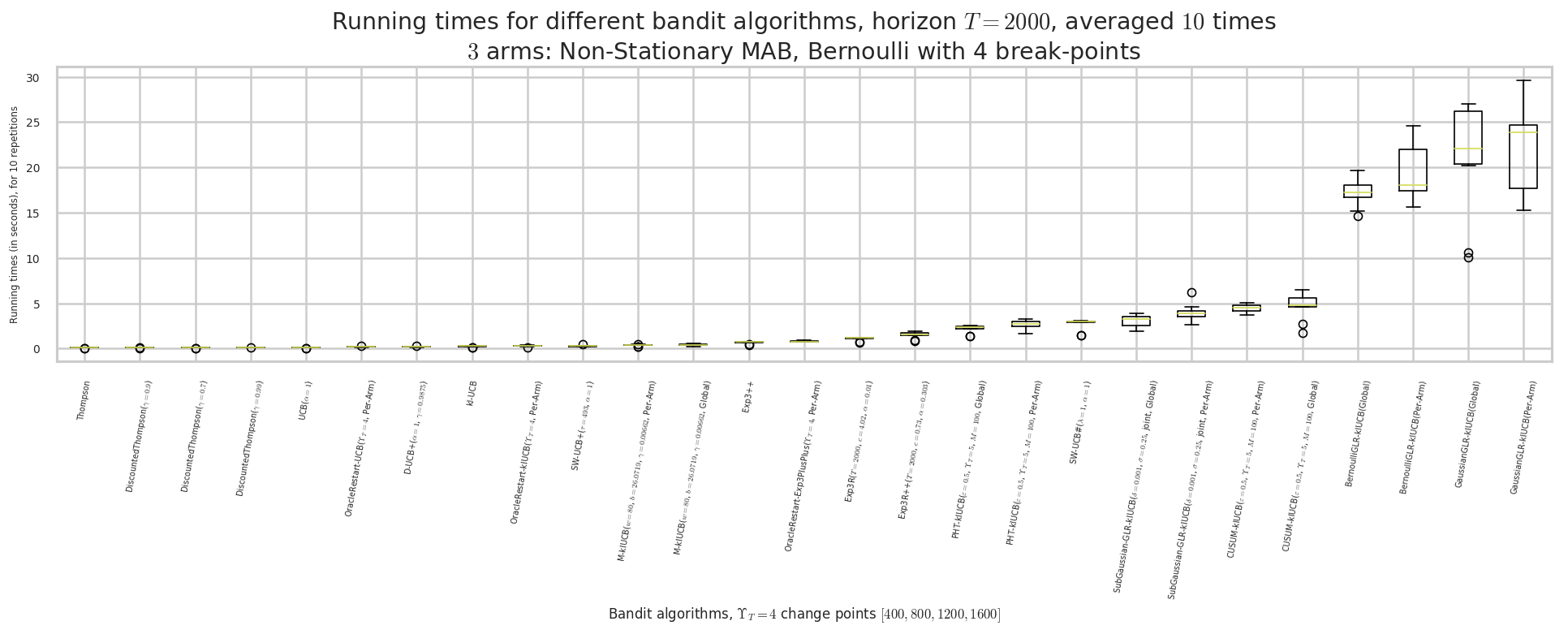

Giving the mean and std running times ...

For policy #6 called 'DiscountedThompson($\gamma=0.7$)' ...

120 ms ± 21.2 ms per loop (mean ± std. dev. of 50 runs)

For policy #3 called 'Thompson' ...

142 ms ± 46.4 ms per loop (mean ± std. dev. of 50 runs)

For policy #4 called 'DiscountedThompson($\gamma=0.99$)' ...

145 ms ± 38.3 ms per loop (mean ± std. dev. of 50 runs)

For policy #5 called 'DiscountedThompson($\gamma=0.9$)' ...

145 ms ± 31.8 ms per loop (mean ± std. dev. of 50 runs)

For policy #24 called 'OracleRestart-UCB($\Upsilon_T=4$, Per-Arm)' ...

215 ms ± 73.4 ms per loop (mean ± std. dev. of 50 runs)

For policy #1 called 'UCB($\alpha=1$)' ...

316 ms ± 84.7 ms per loop (mean ± std. dev. of 50 runs)

For policy #25 called 'OracleRestart-klUCB($\Upsilon_T=4$, Per-Arm)' ...

361 ms ± 67.8 ms per loop (mean ± std. dev. of 50 runs)

For policy #2 called 'kl-UCB' ...

365 ms ± 81.4 ms per loop (mean ± std. dev. of 50 runs)

For policy #23 called 'D-UCB+($\alpha=1$, $\gamma=0.9875$)' ...

432 ms ± 103 ms per loop (mean ± std. dev. of 50 runs)

For policy #22 called 'SW-UCB+($\tau=493$, $\alpha=1$)' ...

639 ms ± 150 ms per loop (mean ± std. dev. of 50 runs)

For policy #20 called 'M-klUCB($w=80$, $b=26.0719$, $\gamma=0.00662$, Global)' ...

688 ms ± 243 ms per loop (mean ± std. dev. of 50 runs)

For policy #19 called 'M-klUCB($w=80$, $b=26.0719$, $\gamma=0.00662$, Per-Arm)' ...

821 ms ± 245 ms per loop (mean ± std. dev. of 50 runs)

For policy #0 called 'Exp3++' ...

1.13 s ± 236 ms per loop (mean ± std. dev. of 50 runs)

For policy #26 called 'OracleRestart-Exp3PlusPlus($\Upsilon_T=4$, Per-Arm)' ...

1.14 s ± 275 ms per loop (mean ± std. dev. of 50 runs)

For policy #7 called 'Exp3R($T=2000$, $c=4.02$, $\alpha=0.01$)' ...

1.27 s ± 134 ms per loop (mean ± std. dev. of 50 runs)

For policy #8 called 'Exp3R++($T=2000$, $c=0.73$, $\alpha=0.303$)' ...

1.45 s ± 121 ms per loop (mean ± std. dev. of 50 runs)

For policy #11 called 'PHT-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Per-Arm)' ...

2.21 s ± 329 ms per loop (mean ± std. dev. of 50 runs)

For policy #12 called 'PHT-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Global)' ...

2.75 s ± 951 ms per loop (mean ± std. dev. of 50 runs)

For policy #9 called 'CUSUM-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Per-Arm)' ...

2.99 s ± 586 ms per loop (mean ± std. dev. of 50 runs)

For policy #10 called 'CUSUM-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Global)' ...

3.22 s ± 1.02 s per loop (mean ± std. dev. of 50 runs)

For policy #21 called 'SW-UCB#($\lambda=1$, $\alpha=1$)' ...

3.46 s ± 643 ms per loop (mean ± std. dev. of 50 runs)

For policy #18 called 'SubGaussian-GLR-klUCB($\delta=0.001$, $\sigma=0.25$, joint, Global)' ...

5.21 s ± 2.65 s per loop (mean ± std. dev. of 50 runs)

For policy #17 called 'SubGaussian-GLR-klUCB($\delta=0.001$, $\sigma=0.25$, joint, Per-Arm)' ...

7.67 s ± 1.75 s per loop (mean ± std. dev. of 50 runs)

For policy #13 called 'BernoulliGLR-klUCB(Per-Arm)' ...

15.7 s ± 3.76 s per loop (mean ± std. dev. of 50 runs)

For policy #16 called 'GaussianGLR-klUCB(Global)' ...

17.4 s ± 3.56 s per loop (mean ± std. dev. of 50 runs)

For policy #15 called 'GaussianGLR-klUCB(Per-Arm)' ...

18 s ± 2.78 s per loop (mean ± std. dev. of 50 runs)

For policy #14 called 'BernoulliGLR-klUCB(Global)' ...

18.5 s ± 3.84 s per loop (mean ± std. dev. of 50 runs)

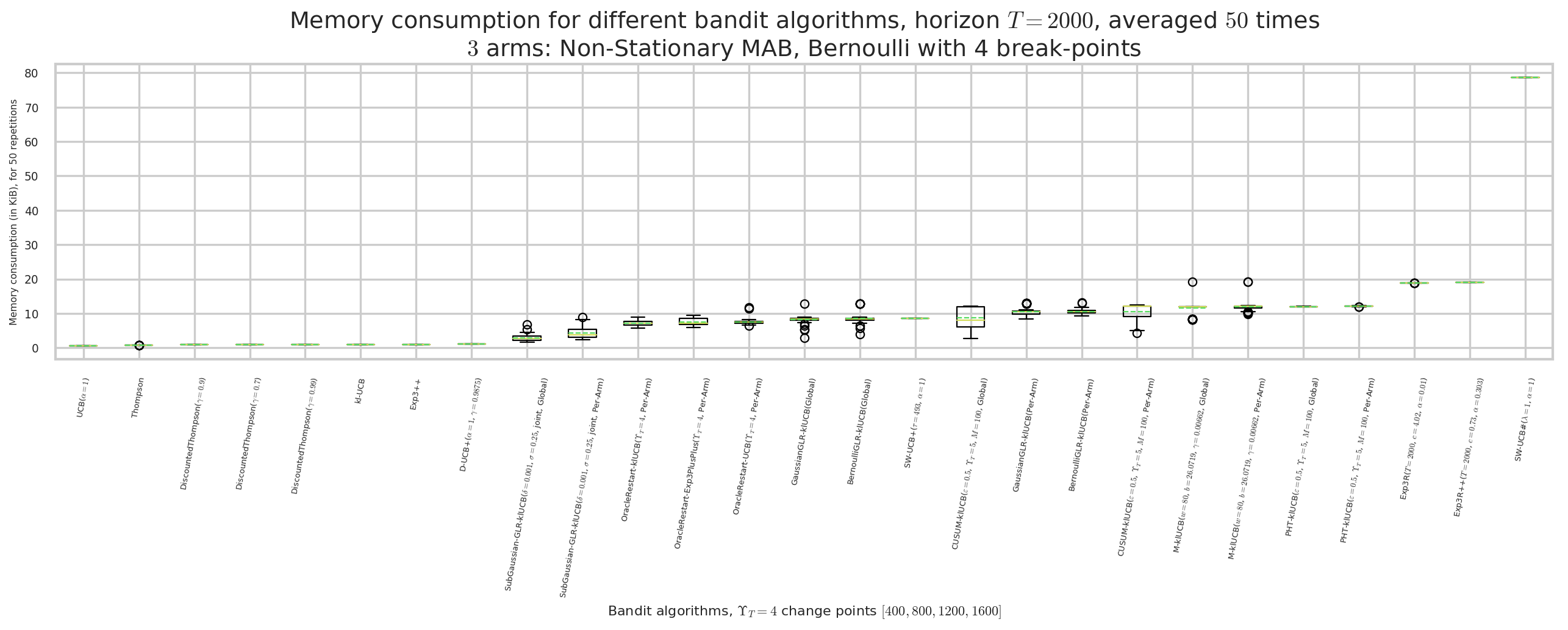

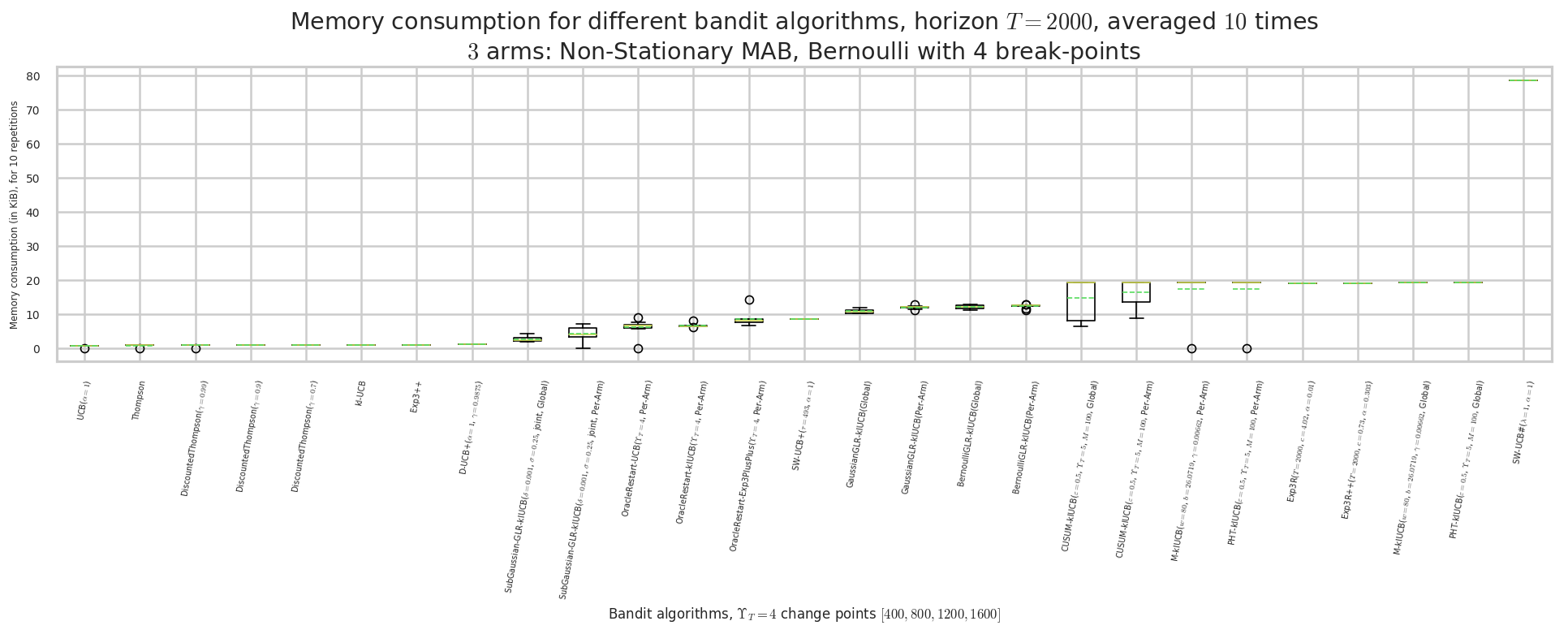

Giving the mean and std memory consumption ...

For policy #1 called 'UCB($\alpha=1$)' ...

636 B ± 0 B (mean ± std. dev. of 50 runs)

For policy #3 called 'Thompson' ...

826.9 B ± 0.3 B (mean ± std. dev. of 50 runs)

For policy #5 called 'DiscountedThompson($\gamma=0.9$)' ...

994 B ± 0 B (mean ± std. dev. of 50 runs)

For policy #6 called 'DiscountedThompson($\gamma=0.7$)' ...

994 B ± 0 B (mean ± std. dev. of 50 runs)

For policy #4 called 'DiscountedThompson($\gamma=0.99$)' ...

995 B ± 0 B (mean ± std. dev. of 50 runs)

For policy #2 called 'kl-UCB' ...

1007 B ± 0 B (mean ± std. dev. of 50 runs)

For policy #0 called 'Exp3++' ...

1 KiB ± 0 B (mean ± std. dev. of 50 runs)

For policy #23 called 'D-UCB+($\alpha=1$, $\gamma=0.9875$)' ...

1.2 KiB ± 0 B (mean ± std. dev. of 50 runs)

For policy #18 called 'SubGaussian-GLR-klUCB($\delta=0.001$, $\sigma=0.25$, joint, Global)' ...

2.9 KiB ± 990.6 B (mean ± std. dev. of 50 runs)

For policy #17 called 'SubGaussian-GLR-klUCB($\delta=0.001$, $\sigma=0.25$, joint, Per-Arm)' ...

4.4 KiB ± 1.7 KiB (mean ± std. dev. of 50 runs)

For policy #25 called 'OracleRestart-klUCB($\Upsilon_T=4$, Per-Arm)' ...

7.2 KiB ± 720.5 B (mean ± std. dev. of 50 runs)

For policy #26 called 'OracleRestart-Exp3PlusPlus($\Upsilon_T=4$, Per-Arm)' ...

7.5 KiB ± 1003.7 B (mean ± std. dev. of 50 runs)

For policy #24 called 'OracleRestart-UCB($\Upsilon_T=4$, Per-Arm)' ...

7.6 KiB ± 934.6 B (mean ± std. dev. of 50 runs)

For policy #16 called 'GaussianGLR-klUCB(Global)' ...

8.1 KiB ± 1.2 KiB (mean ± std. dev. of 50 runs)

For policy #14 called 'BernoulliGLR-klUCB(Global)' ...

8.5 KiB ± 1.7 KiB (mean ± std. dev. of 50 runs)

For policy #22 called 'SW-UCB+($\tau=493$, $\alpha=1$)' ...

8.6 KiB ± 0 B (mean ± std. dev. of 50 runs)

For policy #10 called 'CUSUM-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Global)' ...

8.8 KiB ± 3.2 KiB (mean ± std. dev. of 50 runs)

For policy #15 called 'GaussianGLR-klUCB(Per-Arm)' ...

10.5 KiB ± 1.2 KiB (mean ± std. dev. of 50 runs)

For policy #13 called 'BernoulliGLR-klUCB(Per-Arm)' ...

10.6 KiB ± 757.1 B (mean ± std. dev. of 50 runs)

For policy #9 called 'CUSUM-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Per-Arm)' ...

10.6 KiB ± 2.3 KiB (mean ± std. dev. of 50 runs)

For policy #20 called 'M-klUCB($w=80$, $b=26.0719$, $\gamma=0.00662$, Global)' ...

11.5 KiB ± 1.7 KiB (mean ± std. dev. of 50 runs)

For policy #19 called 'M-klUCB($w=80$, $b=26.0719$, $\gamma=0.00662$, Per-Arm)' ...

12 KiB ± 1.7 KiB (mean ± std. dev. of 50 runs)

For policy #12 called 'PHT-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Global)' ...

12 KiB ± 49.4 B (mean ± std. dev. of 50 runs)

For policy #11 called 'PHT-klUCB($\varepsilon=0.5$, $\Upsilon_T=5$, $M=100$, Per-Arm)' ...

12.1 KiB ± 73.3 B (mean ± std. dev. of 50 runs)

For policy #7 called 'Exp3R($T=2000$, $c=4.02$, $\alpha=0.01$)' ...

18.9 KiB ± 0.5 B (mean ± std. dev. of 50 runs)

For policy #8 called 'Exp3R++($T=2000$, $c=0.73$, $\alpha=0.303$)' ...

19.1 KiB ± 0 B (mean ± std. dev. of 50 runs)

For policy #21 called 'SW-UCB#($\lambda=1$, $\alpha=1$)' ...

78.6 KiB ± 0 B (mean ± std. dev. of 50 runs)

Second problem¶

[33]:

%%time

envId = 1

env = evaluation.envs[envId]

# Show the problem

evaluation.plotHistoryOfMeans(envId)

Warning: forcing to use putatright = False because there is 3 items in the legend.

CPU times: user 1.05 s, sys: 368 ms, total: 1.42 s

Wall time: 959 ms

[34]:

%%time

# Evaluate just that env

evaluation.startOneEnv(envId, env)

Evaluating environment: PieceWiseStationaryMAB(nbArms: 3, arms: [B(0.4), B(0.5), B(0.9)])

- Adding policy #1 = {'archtype': <class 'SMPyBandits.Policies.Exp3PlusPlus.Exp3PlusPlus'>, 'params': {}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][0]' = {'archtype': <class 'SMPyBandits.Policies.Exp3PlusPlus.Exp3PlusPlus'>, 'params': {}} ...

- Adding policy #2 = {'archtype': <class 'SMPyBandits.Policies.UCBalpha.UCBalpha'>, 'params': {'alpha': 1}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][1]' = {'archtype': <class 'SMPyBandits.Policies.UCBalpha.UCBalpha'>, 'params': {'alpha': 1}} ...

- Adding policy #3 = {'archtype': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'params': {'klucb': <built-in function klucbBern>}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][2]' = {'archtype': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'params': {'klucb': <built-in function klucbBern>}} ...

- Adding policy #4 = {'archtype': <class 'SMPyBandits.Policies.Thompson.Thompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.Beta.Beta'>}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][3]' = {'archtype': <class 'SMPyBandits.Policies.Thompson.Thompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.Beta.Beta'>}} ...

- Adding policy #5 = {'archtype': <class 'SMPyBandits.Policies.DiscountedThompson.DiscountedThompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta'>, 'gamma': 0.99}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][4]' = {'archtype': <class 'SMPyBandits.Policies.DiscountedThompson.DiscountedThompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta'>, 'gamma': 0.99}} ...

- Adding policy #6 = {'archtype': <class 'SMPyBandits.Policies.DiscountedThompson.DiscountedThompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta'>, 'gamma': 0.9}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][5]' = {'archtype': <class 'SMPyBandits.Policies.DiscountedThompson.DiscountedThompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta'>, 'gamma': 0.9}} ...

- Adding policy #7 = {'archtype': <class 'SMPyBandits.Policies.DiscountedThompson.DiscountedThompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta'>, 'gamma': 0.7}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][6]' = {'archtype': <class 'SMPyBandits.Policies.DiscountedThompson.DiscountedThompson'>, 'params': {'posterior': <class 'SMPyBandits.Policies.Posterior.DiscountedBeta.DiscountedBeta'>, 'gamma': 0.7}} ...

- Adding policy #8 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.Exp3R'>, 'params': {'horizon': 2000}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][7]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.Exp3R'>, 'params': {'horizon': 2000}} ...

Warning: the policy Exp3R($T=2000$, $c=4.02$, $\alpha=0.01$) tried to use default value of gamma = 0.11191812753316992 but could not set attribute self.policy.gamma to gamma (maybe it's using an Exp3 with a non-constant value of gamma).

- Adding policy #9 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.Exp3RPlusPlus'>, 'params': {'horizon': 2000}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][8]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.Exp3RPlusPlus'>, 'params': {'horizon': 2000}} ...

Warning: the policy Exp3R++($T=2000$, $c=0.73$, $\alpha=0.303$) tried to use default value of gamma = 0.11191812753316992 but could not set attribute self.policy.gamma to gamma (maybe it's using an Exp3 with a non-constant value of gamma).

- Adding policy #10 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.CUSUM_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][9]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.CUSUM_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

compute_h_alpha_from_input_parameters__CUSUM() with:

T = 2000, UpsilonT = 5, K = 3, epsilon = 0.5, lmbda = 1, M = 100

Gave C2 = 1.0986122886681098, C1- = 2.1972245773362196 and C1+ = 0.6359887667199967 so C1 = 0.6359887667199967, and h = 9.420708132956397 and alpha = 0.0048256437784349945

- Adding policy #11 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.CUSUM_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][10]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.CUSUM_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

compute_h_alpha_from_input_parameters__CUSUM() with:

T = 2000, UpsilonT = 5, K = 3, epsilon = 0.5, lmbda = 1, M = 100

Gave C2 = 1.0986122886681098, C1- = 2.1972245773362196 and C1+ = 0.6359887667199967 so C1 = 0.6359887667199967, and h = 9.420708132956397 and alpha = 0.0048256437784349945

- Adding policy #12 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.PHT_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][11]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.PHT_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

compute_h_alpha_from_input_parameters__CUSUM() with:

T = 2000, UpsilonT = 5, K = 3, epsilon = 0.5, lmbda = 1, M = 100

Gave C2 = 1.0986122886681098, C1- = 2.1972245773362196 and C1+ = 0.6359887667199967 so C1 = 0.6359887667199967, and h = 9.420708132956397 and alpha = 0.0048256437784349945

- Adding policy #13 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.PHT_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][12]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.PHT_IndexPolicy'>, 'params': {'horizon': 2000, 'max_nb_random_events': 5, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

compute_h_alpha_from_input_parameters__CUSUM() with:

T = 2000, UpsilonT = 5, K = 3, epsilon = 0.5, lmbda = 1, M = 100

Gave C2 = 1.0986122886681098, C1- = 2.1972245773362196 and C1+ = 0.6359887667199967 so C1 = 0.6359887667199967, and h = 9.420708132956397 and alpha = 0.0048256437784349945

- Adding policy #14 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.BernoulliGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][13]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.BernoulliGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

- Adding policy #15 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.BernoulliGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][14]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.BernoulliGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

- Adding policy #16 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.GaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][15]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.GaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

- Adding policy #17 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.GaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][16]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.GaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

- Adding policy #18 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.SubGaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][17]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.SubGaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

- Adding policy #19 = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.SubGaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][18]' = {'archtype': <class 'SMPyBandits.Policies.CD_UCB.SubGaussianGLR_IndexPolicy'>, 'params': {'horizon': 2000, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

- Adding policy #20 = {'archtype': <class 'SMPyBandits.Policies.Monitored_UCB.Monitored_IndexPolicy'>, 'params': {'horizon': 2000, 'w': 80, 'b': 26.07187326473663, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][19]' = {'archtype': <class 'SMPyBandits.Policies.Monitored_UCB.Monitored_IndexPolicy'>, 'params': {'horizon': 2000, 'w': 80, 'b': 26.07187326473663, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': True}} ...

Warning: the formula for gamma in the paper gave gamma = 0.0, that's absurd, we use instead 0.006622516556291391

- Adding policy #21 = {'archtype': <class 'SMPyBandits.Policies.Monitored_UCB.Monitored_IndexPolicy'>, 'params': {'horizon': 2000, 'w': 80, 'b': 26.07187326473663, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][20]' = {'archtype': <class 'SMPyBandits.Policies.Monitored_UCB.Monitored_IndexPolicy'>, 'params': {'horizon': 2000, 'w': 80, 'b': 26.07187326473663, 'policy': <class 'SMPyBandits.Policies.klUCB.klUCB'>, 'per_arm_restart': False}} ...

Warning: the formula for gamma in the paper gave gamma = 0.0, that's absurd, we use instead 0.006622516556291391

- Adding policy #22 = {'archtype': <class 'SMPyBandits.Policies.SWHash_UCB.SWHash_IndexPolicy'>, 'params': {'alpha': 1.0, 'lmbda': 1, 'policy': <class 'SMPyBandits.Policies.UCB.UCB'>}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][21]' = {'archtype': <class 'SMPyBandits.Policies.SWHash_UCB.SWHash_IndexPolicy'>, 'params': {'alpha': 1.0, 'lmbda': 1, 'policy': <class 'SMPyBandits.Policies.UCB.UCB'>}} ...

- Adding policy #23 = {'archtype': <class 'SMPyBandits.Policies.SlidingWindowUCB.SWUCBPlus'>, 'params': {'horizon': 2000, 'alpha': 1.0}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][22]' = {'archtype': <class 'SMPyBandits.Policies.SlidingWindowUCB.SWUCBPlus'>, 'params': {'horizon': 2000, 'alpha': 1.0}} ...

- Adding policy #24 = {'archtype': <class 'SMPyBandits.Policies.DiscountedUCB.DiscountedUCBPlus'>, 'params': {'max_nb_random_events': 5, 'alpha': 1.0, 'horizon': 2000}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][23]' = {'archtype': <class 'SMPyBandits.Policies.DiscountedUCB.DiscountedUCBPlus'>, 'params': {'max_nb_random_events': 5, 'alpha': 1.0, 'horizon': 2000}} ...

- Adding policy #25 = {'archtype': <class 'SMPyBandits.Policies.OracleSequentiallyRestartPolicy.OracleSequentiallyRestartPolicy'>, 'params': {'changePoints': array([ 0, 400, 800, 1200, 1600]), 'policy': <class 'SMPyBandits.Policies.UCB.UCB'>, 'per_arm_restart': True}} ...

Creating this policy from a dictionnary 'self.cfg['policies'][24]' = {'archtype': <class 'SMPyBandits.Policies.OracleSequentiallyRestartPolicy.OracleSequentiallyRestartPolicy'>, 'params': {'changePoints': array([ 0, 400, 800, 1200, 1600]), 'policy': <class 'SMPyBandits.Policies.UCB.UCB'>, 'per_arm_restart': True}} ...